Cloud Sovereignty: Why EU Companies Need to Rethink Their Cloud Strategy Now

If you work in IT infrastructure in Europe, you have probably noticed a shift in the conversation over the past two years. Cloud sovereignty is no longer a

In 2026, “tech trends” are less about shiny launches and more about the forces that shape everyday IT work—how software gets built, how systems stay secure, how identity is managed, and how much compute your organization can realistically sustain.

A useful way to make sense of the year is to separate novelty from pressure. Novelty is interesting. Pressure is what changes priorities, budgets, and job roles.

In this blog, we'll discuss the trends that matter across IT—security, software engineering, operations, data and compliance, translated into what they mean for teams trying to deliver reliable outcomes.

AI-assisted development is no longer a curiosity. It’s a throughput multiplier. But the tradeoff isn’t “quality goes down” in a simple way — it’s that failure modes shift.

When teams ship faster with AI help, they often reduce small mistakes while increasing the likelihood of deeper problems: permission boundaries implemented inconsistently, fragile architectures, subtle auth bugs, and “it works in the demo” integrations that don’t hold under real use.

For IT, this creates a new center of gravity: the bottleneck moves from typing to judgment. Architectural review, security review, test design, and production readiness become the scarce skills.

Treat AI-generated code like code written by a very fast junior engineer—useful, but requiring strong guardrails. Standardize reviews, enforce least privilege by default, and invest in testing that targets system-level behavior rather than happy-path correctness.

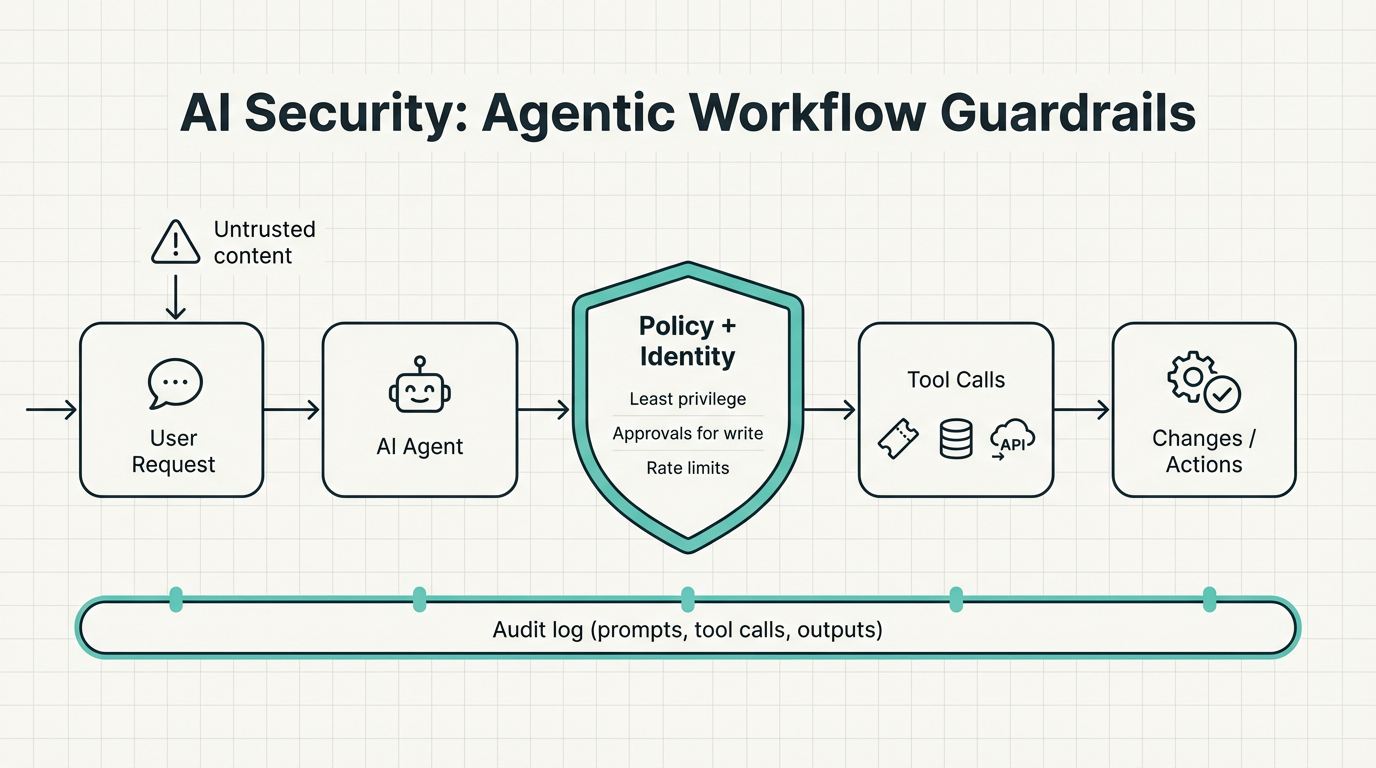

The biggest security story in 2026 isn’t only about prompt injection or hallucinations. It’s about AI systems taking actions (agents).

As soon as an assistant can open tickets, query internal tools, change configs, or trigger workflows, you’re no longer “using AI” — you’ve created a new kind of operator in your environment. That operator needs identity, permissions, logging, and boundaries.

The safest posture is intentionally boring: make read access easy, treat write access as privileged, and scope permissions as tightly as you would for a service account. Log prompts, tool calls, and outputs end-to-end for auditability, and design under the assumption that untrusted content will reach the system — because it will. If you are willing to invest in AI security tooling, check out our Agentic AI Engineering and Agentic AI Vulnerability Management stack, which helps you build and secure AI operators in a practical way.

A growing share of IT strategy is constrained by something teams don’t control: the availability of advanced compute. The supply chain is concentrated, lead times can be long, and demand spikes don’t politely align with your roadmap.

This matters even if you’re “just using the cloud.” Availability and pricing flow downstream into architecture choices, vendor decisions, and what product teams think is feasible.

The practical implication is resilience: keep the ability to pivot - across providers, across model sizes, and across deployment patterns, so you’re not locked into assumptions that break when capacity or pricing changes.

AI isn’t “just software.” As organizations scale AI usage, they also scale electricity demand, and that reality is starting to influence what’s possible, what it costs, and how quickly it can roll out. One visible signal: nuclear energy is moving back onto the roadmap in multiple regions as demand grows, with life extensions for existing reactors, plans for new builds, and accelerating interest in small modular reactors as a source of steady, low‑carbon baseload power.

Most companies won’t feel this as a facilities problem. They’ll feel it as second‑order effects in the market: cloud pricing and availability, slower timelines for capacity expansion in certain regions, hardware lead times, and more frequent executive questions like “what are we getting for this spend?”

The outlook is to treat energy as a strategic constraint that can shape roadmaps. Expect more pressure to justify compute-heavy initiatives and more scrutiny of workloads that scale cost without a clear business outcome.

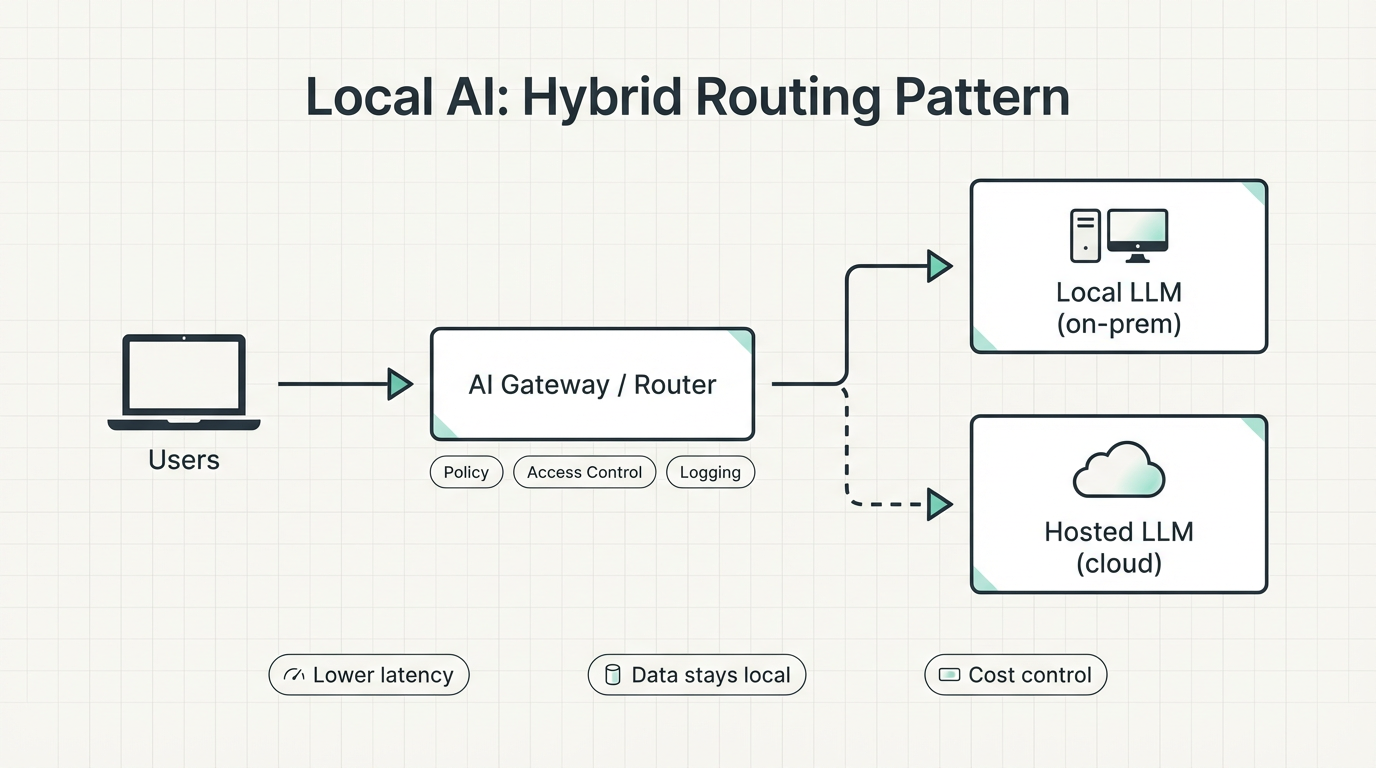

Those compute and energy constraints are one reason local AI is re-entering the conversation. For many IT teams, “AI adoption” currently means one thing: sending more data and more queries to external services - and hoping the bill, latency, and compliance questions stay manageable.

Local LLMs are not magic, and they won’t replace frontier cloud models for every use case. But they are increasingly “good enough” for many everyday workflows: drafting, summarization, classification, coding assistance, lightweight internal Q&A, and private copilots for repeatable tasks. The value proposition is straightforward: data stays in your environment, latency can be more predictable, and costs become easier to manage as usage grows.

The practical question we might ask is: Where do we run it? For many organizations, the answer isn’t always automatically an extremely expensive server rack - it’s a small, always-on machine in the office or lab. As local AI becomes more practical, the Mac mini has quietly become a go-to machine for this. It's cheap enough to justify, small enough to ignore, and efficient enough to leave running without it turning into an “infrastructure project". Apple Silicon systems (with unified memory, low power draw, low noise, and strong performance-per-watt) are convenient boxes for running small-to-mid local models and embeddings. Tools like Ollama or LM Studio make it easy to get started. They maybe won’t replace big GPU servers, but they can be a surprisingly effective starting point for pilots and internal tooling.

If you’re exploring local AI, treat it like any other IT capability: set expectations, define supported use cases, and be honest about trade-offs. You gain privacy and control, but you also inherit operational responsibility, like updates, model selection, access control, and evaluation. The most durable pattern is hybrid: run local by default for routine work, and route to a hosted model only when the task clearly needs it. Make sure to check out our latest blog post on Ollama’s 2025 updates for more details on local AI options.

For most IT teams in 2026, quantum computing is less an immediate technology decision and more a matter of platform strategy and expectation management. Progress is real, but uneven: there are visible breakthroughs and a geopolitically driven race for talent, research, standards, and industrial applications. That race will shape which vendors emerge, where ecosystems form, and how quickly certain industries gain access to capabilities that still look experimental today.

In practice, quantum will show up first not as a “new data center,” but as a specialized building block, mostly via cloud services and hybrid approaches. Its relevance starts where classical systems hit limits: complex AI-systems and Agents, AGI, simulation, certain search and sampling problems, and niche cases in research and industry. For IT, the goal is to be ready to evaluate claims and separate signal from hype. A sensible stance is to build internal baseline expertise, identify potential candidate workloads, and keep quantum on the roadmap as an observation and evaluation track, so you can act when it starts entering your stack “as a service.”

In the end, all of these trends boil down to a simple reality: AI won’t be “just another tool,” but a new layer in your day-to-day workflows, making it both a productivity lever and a risk at the same time. To stay ahead in 2026, it’s not about piling on more models and tools, but about solid engineering: AI coding that makes teams measurably faster, AI security that keeps agents, systems and automations governable, workflow automation that scales processes reliably, and local AI where privacy, cost, and latency become real advantages. If you treat these topics not as isolated initiatives but as one coherent architecture, you get robust systems instead of short-lived demos—and that’s exactly where we can support you pragmatically. If you want to turn these topics into something that works in production — not just in slides — Infralovers can help you design, implement, and harden AI coding, AI security, workflow automation, and local AI in a way that fits your reality and scales.

You are interested in our courses or you simply have a question that needs answering? You can contact us at anytime! We will do our best to answer all your questions.

Contact us