Dark Factory Gap: What Happens to Teams, Roles, and Organizations

In Part 1 of this series, we worked through the why: Shapiro's five levels of AI development, Brynjolfsson's J-Curve, and the core thesis that AI tools alone

Remember last July when we dug into Ollama and its promise for local AI? Well, a lot has happened since then. Ollama has rolled out some pretty significant updates that show they're not just sticking to the command line anymore — though they haven't forgotten their roots either.

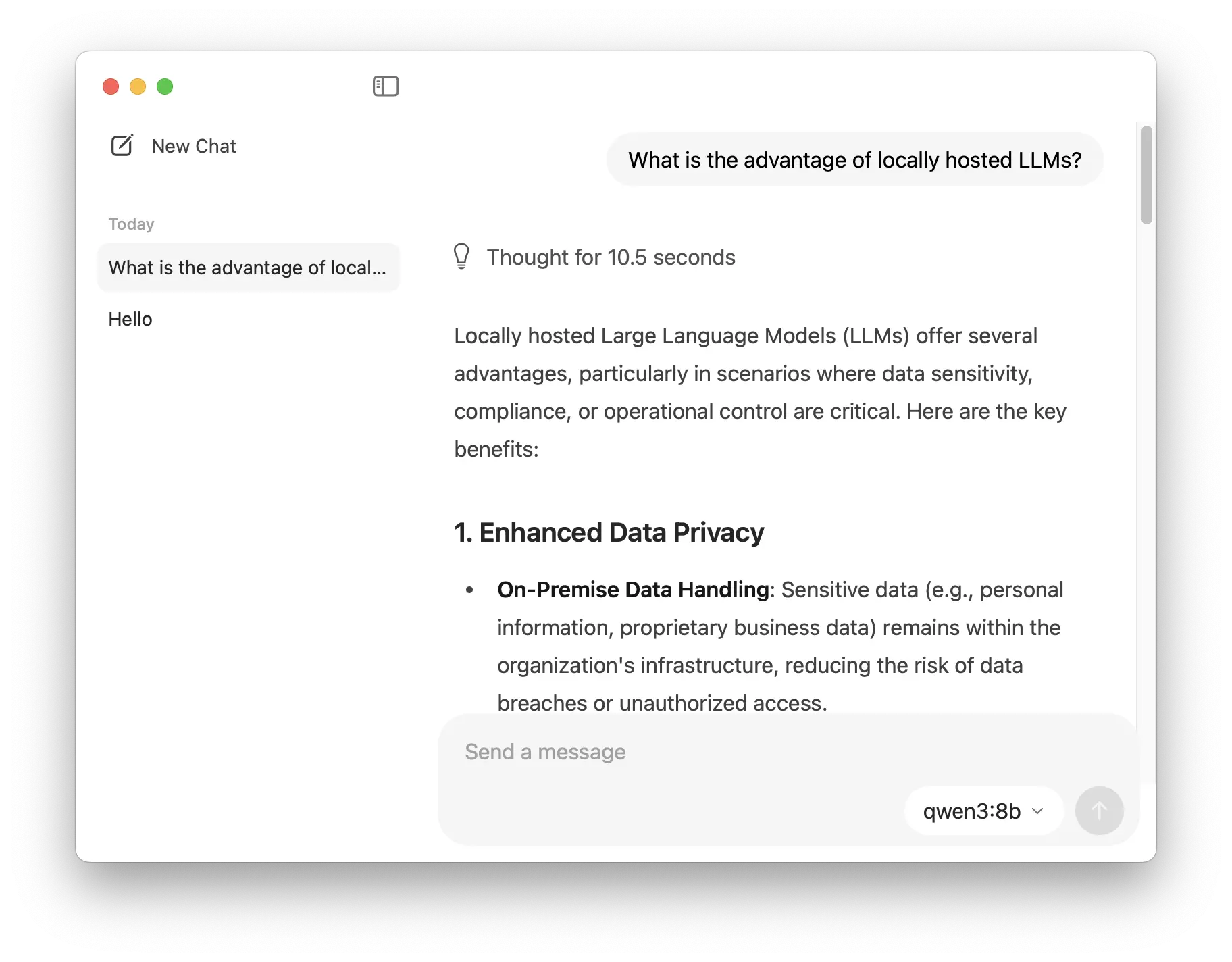

Ollama introduced a native desktop application for macOS and Windows on July 30, 2025, taking an important step forward in usability, as the tool becomes more accessible to a wider audience. The interface is clean and straightforward, focusing on essential features like a chat window and history of interactions without unnecessary complexity. Users can easily drag and drop PDFs or images into the chat window for seamless model interaction, if the model supports it. Some settings were also introduced in the new UI. For example, the addition of a context-length slider allows precise control over how much information the model retains, which proves valuable during extended sessions.

Ollama launched Turbo in preview this August — a cloud inference service priced at $20 per month. This represents a shift for a platform originally focused on local AI deployment. Turbo offers access to datacenter-grade hardware for running larger models that would be impractical on the typical consumer hardware.

The announcement has generated mixed reactions. While some appreciate the option for enhanced performance, others question whether this aligns with Ollama's local-first philosophy. At $20/month, the service faces competition from well-established cloud providers like Anthropic Claude and OpenAI, offering their proprietary models at similar pricing.

Important Note: Local Ollama functionality remains completely free and requires no account. You can download, run, and manage models locally without any registration or subscription. The account system only becomes relevant when accessing cloud-based features like Turbo.

There were also further API enhancements that developers should be aware of:

Announced in June 2025 with Stanford’s Hazy Research lab, Secure Minions lets local Ollama models work together with more powerful cloud models while keeping all data end‑to‑end encrypted. Running on NVIDIA H100 GPUs in confidential computing mode, it delivers up to 98% of frontier‑model accuracy with 5–30x lower cost and under 1% added latency — making sure sensitive context never leaves the device in plaintext.

Several optimization updates enhance performance and usability:

The 2025 updates demonstrate Ollama's evolution from a simple local model runner to a robust AI platform offering both local and cloud-powered options. While the introduction of paid services like Turbo has sparked debate, Ollama continues to prioritize free, open-source local functionality, ensuring accessibility for privacy-focused and cost-conscious users. Looking forward, the team has hinted at upcoming features that will further enhance desktop integration, including a computer use agent update for seamless interaction with local files and applications — similar to recent tools like Claude's desktop agent.

This new hybrid approach positions Ollama to serve both individual developers seeking local AI capabilities and organizations requiring scalable, high-performance inference — though the long-term success of this strategy will depend on competitive pricing, model availability, and continued investment in local-first features.

We all know AI is a never-ending journey of improvement and adaptation and it is often hard to stay up-to-date with the latest advancements. We at Infralovers are committed to helping you navigate this landscape with, so be sure to subscribe to our newsletter for the latest updates and insights.

You are interested in our courses or you simply have a question that needs answering? You can contact us at anytime! We will do our best to answer all your questions.

Contact us