Cloud Sovereignty: Why EU Companies Need to Rethink Their Cloud Strategy Now

If you work in IT infrastructure in Europe, you have probably noticed a shift in the conversation over the past two years. Cloud sovereignty is no longer a

The world of AI is evolving rapidly, and with it, the way we interact with APIs and infrastructure. At Infralovers, we're excited to explore how Postman's new Model Context Protocol (MCP) servers can revolutionize the way we integrate AI with our infrastructure management tools, particularly with GitHub Copilot and command-line interfaces (CLI).

The Model Context Protocol is an open standard that enables AI systems to securely access and interact with external data sources and tools. Think of it as a universal translator that allows AI models like GitHub Copilot to understand and work with your specific tools, APIs, and data sources in real-time.

MCP servers act as intermediaries that expose standardized interfaces for AI systems to interact with various services - from cloud infrastructure providers like HCP Terraform (previously known as Terraform Cloud) to container orchestration platforms like Kubernetes, and messaging platforms like Discord.

Postman has introduced the ability to create custom MCP servers that expose REST API collections as MCP tools. This means you can now:

Let's dive into a practical example using our HCP Terraform MCP server implementation.

In our implementation, we've created an MCP server that exposes HashiCorp HCP Terraform APIs. This server provides tools for:

This MCP server is generated using Postman's new capabilities to generate MCP servers on the fly using existing API collections.

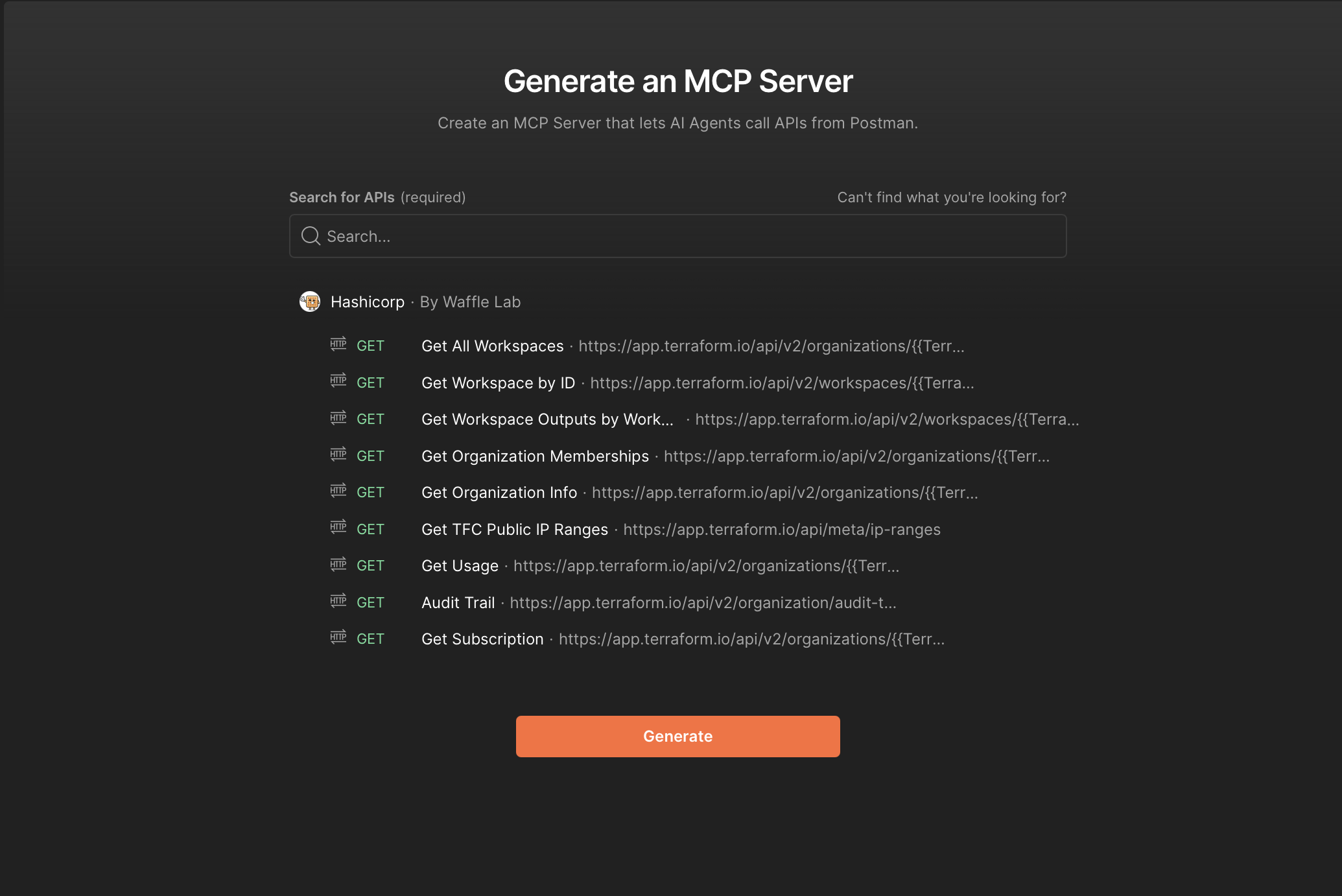

For this we simply need to go to Postman's new MCP Generator and select the collection we want to convert into an MCP server.

Once generated, the MCP server can be downloaded, run locally or even easily converted into a Docker container for deployment in any environment.

The downloaded MCP server follows a clean, modular architecture:

postman-terraform-mcp-server/

├── index.js # Entry point

├── mcpServer.js # Core MCP server logic

├── package.json # Dependencies

├── Dockerfile # Containerization

├── README.md # Documentation

├── lib/ # Shared libraries

├── commands/ # CLI commands

└── tools/

└── hashicorp/

└── hashicorp-terraform-cloud/

├── get-organization-info.js

├── get-all-workspaces.js

├── get-subscription.js

└── [additional API tools...]

Each tool file corresponds to a specific HCP Terraform API endpoint, automatically generated from Postman collections.

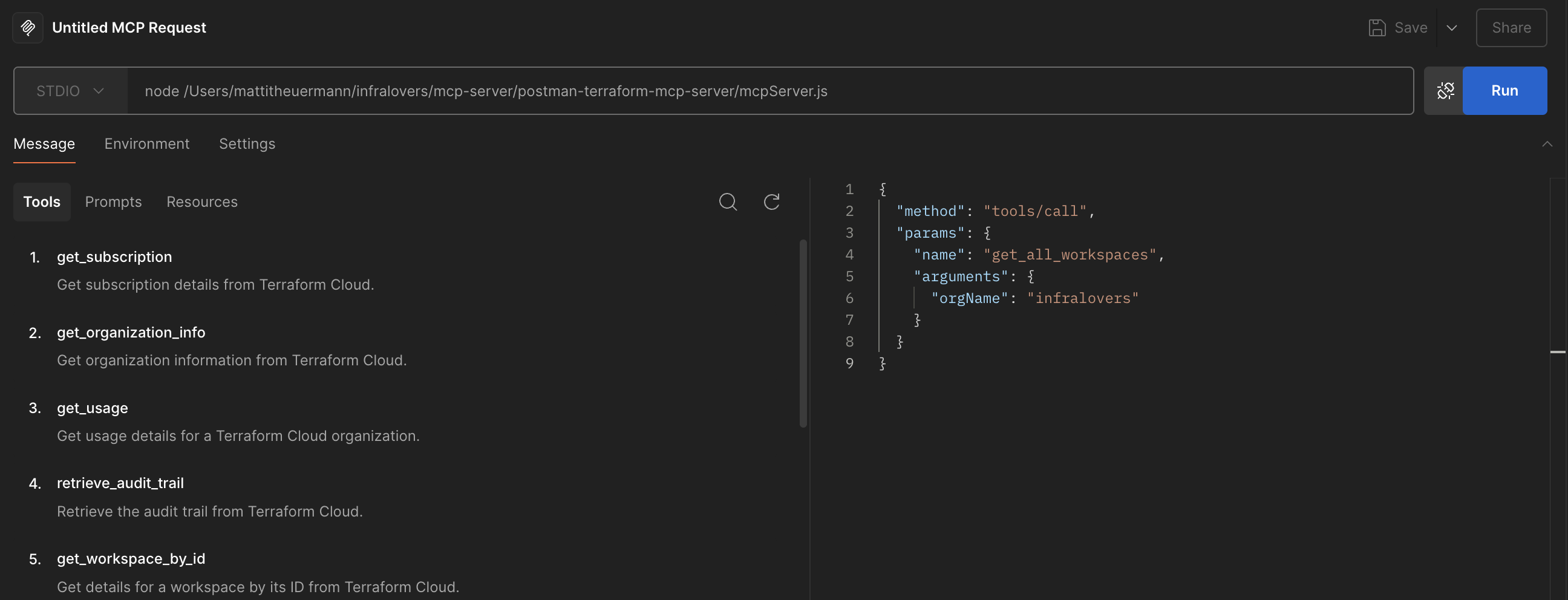

Postman's MCP servers can be used directly within the Postman application. For this we need to:

Install the dependencies:

1# Navigate to your MCP server directory root

2cd /path/to/postman-terraform-mcp-server

3

4# Install dependencies

5npm install

Configure the API keys in the .env file:

1HASHICORP_API_KEY=your-hcp-terraform-api-key

Open Postman and navigate to the workspace where you want to create the MCP request.

Click on the "New" button and select "MCP Request" from the menu.

Choose the communication method for the MCP server:

Enter the server's command and arguments (for STDIO) or the URL (for HTTP with SSE).

All of these steps are documented in detail in the README.md file of the MCP server we generated earlier.

Once configured, you can start making requests to the MCP server directly from Postman.

One of the most powerful integrations is connecting your MCP server to GitHub Copilot in VS Code. Here's how to set it up:

First, ensure your MCP server is running and accessible:

1# Navigate to your MCP server directory

2cd /path/to/postman-terraform-mcp-server

3

4# Install dependencies

5npm install

Add the following JSON block to your User Settings (JSON) file in VS Code. You can do this by opening the Command Palette (Cmd/Ctrl + Shift + P) and selecting "Preferences: Open User Settings (JSON)".

1{

2 "mcp": {

3 "inputs": [

4 {

5 "type": "promptString",

6 "id": "api-key",

7 "description": "API Key",

8 "password": true

9 }

10 ],

11 "servers": {

12 "<server_name>": {

13 "command": "node",

14 "args": ["<absolute/path/to/mcpServer.js>"],

15 "env": {

16 "<API_KEY>": "${input:api-key}"

17 }

18 }

19 }

20 }

21}

Replace <server_name> with a name for your MCP server, and provide the absolute paths to your Node.js executable and the MCP server script.

Replace <API_KEY> with the environment variable name your MCP server expects (e.g., HASHICORP_API_KEY).

In the case of our Terraform MCP server, it could look like this:

1{

2 "mcp": {

3 "inputs": [

4 {

5 "type": "promptString",

6 "id": "hashicorp-api-key",

7 "description": "HashiCorp API Key",

8 "password": true

9 }

10 ],

11 "servers": {

12 "terraform": {

13 "command": "node",

14 "args": ["/path/to/postman-terraform-mcp-server/mcpServer.js"],

15 "env": {

16 "HASHICORP_API_KEY": "${input:hashicorp-api-key}"

17 }

18 }

19 }

20 }

21}

Once configured, you can interact with GitHub Copilot using natural language queries that leverage your Terraform infrastructure:

Example Interaction:

User: "What workspaces do we have in our Infralovers organization?"

GitHub Copilot: Let me check your HCP Terraform organization...

[Calls bb7_get_all_workspaces with orgName: "Infralovers"]

Result: Found 43 workspaces including:

- VPC and networking infrastructure

- Certificate management

- Training environments

- Production workloads

Instead of using Postman or GitHub Copilot, you can also interact with your MCP server using the CLI. This is for example possible with the mcp-cli tool, which allows you to run queries directly against your MCP servers.

The MCP CLI provides a command-line interface for interacting with MCP servers.

1# Clone the repository:

2git clone https://github.com/chrishayuk/mcp-cli

3cd mcp-cli

4# Install the package with development dependencies:

5pip install -e ".[cli,dev]"

6# Run the CLI:

7mcp-cli --help

After installation, you can configure your MCP server connection in the server_config.json file:

1 {

2 "mcpServers": {

3 "terraform": {

4 "command": "<absolute/path/to/node>",

5 "args": ["<absolute/path/to/mcpServer.js>"],

6 "env": {

7 "HASHICORP_API_KEY": "<your-hcp-terraform-api-key>"

8 }

9 }

10 }

11 }

Make sure to replace the paths and API key with your actual values.

With the MCP CLI, you can now chat with your MCP server using simple commands.

1mcp-cli chat --server terraform

You can then enter queries like:

What workspaces do we have in our Infralovers organization?

The MCP CLI will process your request, call the appropriate MCP tool, and return the results directly in your terminal.

1╭─ ⚡ Streaming • 939 chunks • 19.4s • 16.0 words/s • 148 chars/s ─────────────────────────────────────────────────────────────────────────╮

2│ Here are your Terraform workspaces in the organization "ACME": │

3│ │

4│ 1 Workspace Name: one │

5│ • ID: ws-fsgfsdg │

6│ • Terraform Version: 1.7.5 │

7│ • Environment: default │

8│ • Locked: Yes │

9│ • Resource Count: 6 │

10│ 2 Workspace Name: two │

11│ • ID: ws-dfsgdfsg │

12│ • Terraform Version: latest │

13│ • Environment: default │

14│ • Locked: No │

15│ • Resource Count: 9 │

16│ 3 Workspace Name: three │

17│ • ID: ws-fdsgdfsg │

18│ • Terraform Version: latest │

19│ • Environment: default │

20│ • Locked: No │

21│ • Resource Count: 3 │

22...

Postman's MCP server capabilities represent a fundamental shift in how we think about API development, testing, and integration. By bridging the gap between static API documentation and dynamic, AI-accessible tools, MCP servers enable:

As we've seen with our HCP Terraform example, the practical applications are limitless - from infrastructure management to service monitoring, from automated testing to intelligent debugging.

Bei Infralovers bieten wir eine neue Schulung AI Essentials for Engineers an, die Sie in die Lage versetzt, KI in Ihre technischen Arbeitsabläufe zu integrieren, indem Sie praktische Erfahrungen mit großen Sprachmodellen (LLMs), Retrieval-augmented Generation (RAG) und KI-Agenten sammeln. Sie erhalten eine solide Grundlage für KI-Konzepte, ethische und regulatorische Überlegungen (einschließlich des EU-KI-Gesetzes) und praktische Fähigkeiten im Umgang mit Tools wie LangChain, OpenAI, Ollama und LightRAG.

Der Kurs bietet auch eine eingehende, praktische Behandlung des Model Context Protocol (MCP) und zeigt Ihnen, wie Sie KI mit realen APIs und Infrastrukturen verbinden können. Schließen Sie sich Entwicklern auf der ganzen Welt an, die ihre Karrieren mit praxisorientiertem, KI-gesteuertem Fachwissen vorantreiben.

You are interested in our courses or you simply have a question that needs answering? You can contact us at anytime! We will do our best to answer all your questions.

Contact us