Is Atlantis a Viable Alternative to HashiCorp Cloud Platform Terraform?

Infrastructure as Code (IaC) has revolutionized the way organizations manage cloud infrastructure, with Terraform leading as a premier tool. HashiCorp Cloud

In the rapidly evolving world of artificial intelligence, the challenge isn't about what AI can do — it's about how seamlessly it can be integrated into our daily lives. Since the advent of Generative-AI in early 2023, we've seen numerous AI applications designed to tackle various tasks. However, these innovations often face an integration problem rather than a capabilities problem. Prompts are the inputs or queries provided to an AI model to generate responses or outputs. These prompts serve as the starting point for the AI's creative process, guiding it to produce relevant and contextually appropriate content. It’s not easy to discover new and useful prompts and to manage different versions of the ones we like. This is where Fabric comes in.

Fabric is an open-source framework designed to address the integration challenge by enabling everyone to apply AI granularly to everyday challenges. The philosophy behind Fabric is that AI is a magnifier of human creativity. By breaking problems into individual components and applying AI to each piece, Fabric empowers users to solve complex issues in a structured and efficient manner. In this blog post, we will dive into Fabric's innovative capabilities and explore its diverse use cases.

Fabric was created to streamline the process of incorporating AI into various aspects of life and work. It does this through a system of modular components called Patterns, which are AI prompt templates formatted in Markdown for maximum readability and editability. This format ensures that both users and AI can easily understand and execute the instructions.

To get the most out of Fabric, users can install the Fabric client, which provides a comprehensive suite of tools and commands for interacting with AI models.

Clone the Repository and switch to directory:

1git clone https://github.com/danielmiessler/fabric.git

1cd fabric

Install pipx:

1brew install pipx

1sudo apt install pipx

Install Ollama (Optional):

Install local models if required for the use case. To do this, download Ollama and install the desired model from the available models. Be aware of hardware requirements.

Ollama is a platform to run Large Language Models (LLMs) on your local machine.

Navigate to the Fabric Directory:

1cd fabric

Install Fabric:

1pipx install .

Refresh Terminal Configuration:

1source ~/.bashrc

1source ~/.zshrc

Setup Fabric:

Run the setup command and follow the prompts to enter API keys for the required services.

1fabric --setup

Ready to Use:

Fabric is now installed and configured and we’re ready to start using it!

1fabric --help

Fabric provides several commands that streamline interaction with its models and enhance productivity. Here's a brief introduction to some of the most useful ones:

List Available Models:

To see which models are currently available on our system or subscribed to, we use:

1fabric --listmodels

This command lists all installed and subscribed models, helping us choose the most suitable one for our task.

List All Available Patterns:

Explore the range of patterns available within Fabric by using:

1fabric --list

This command provides an overview of available patterns, which define specific tasks or actions we can execute with Fabric.

Switch Default Model:

Change the default model Fabric uses with:

1fabric --changeDefaultModel <MODEL>

This command allows us to switch between different models seamlessly, adapting to different needs or preferences, without having to specify the model each time.

Update Patterns:

Keep patterns up to date for optimal performance by running:

1fabric --update

This command ensures we have access to the latest improvements and additions in Fabric's pattern library.

Fabric includes flags that enhance command execution:

-s: Stream output of the model directly.-p: Specify a particular pattern we want to use.-sp: Stream output with a specified pattern selection.These flags provide flexibility in how we interact with Fabric, tailoring responses and actions to your specific requirements.

Fabric's command structure enables users to interact with AI efficiently through a command-line interface (CLI). By piping input into Fabric, users can trigger specific actions and execute various tasks seamlessly. The input for Fabric can be diverse and adaptable to different tasks. It can include text, URLs, file paths, or other command outputs. A Fabric command is structured as follows:

1<INPUT> | fabric --model <MODEL> -p <PATTERN>

When using Fabric, we have the option to choose between local and remote AI models. Local models, such as those from Ollama, can be used without additional costs but require robust hardware. These models can be downloaded with the command ollama pull <MODEL>, with details about models available on the Ollama website. Additionally, users must consider different model parameter and quantization sizes, which affect performance and resource usage. On the other hand, remote models like OpenAI and Claude offer high performance and convenience but come with a subscription fee. If local hardware isn't sufficient and models react slowly, we can connect to these remote models via an API token and view available models with the command fabric --listmodels.

Patterns are the core of Fabric's functionality. They are designed to tackle specific tasks such as:

Fabric Patterns are different from typical prompts as they are tested, highly structured and use Markdown to ensure clarity and order. This structure not only aids the creator in developing effective prompts but also makes it easier for the AI to follow instructions and provide concise answers.

For example, a Pattern can look like this:

# IDENTITY and PURPOSE

You are an expert content summariser. You take content in and output a Markdown formatted summary using the format below.

Take a deep breath and think step by step about how to best accomplish this goal using the following steps.

# OUTPUT SECTIONS

- Combine all of your understanding of the content into a single, 20-word sentence in a section called ONE SENTENCE SUMMARY:.

- Output the 10 most important points of the content as a list with no more than 15 words per point into a section called MAIN POINTS:.

- Output a list of the 5 best takeaways from the content in a section called TAKEAWAYS:.

# OUTPUT INSTRUCTIONS

- Create the output using the formatting above.

- You only output human readable Markdown.

- Output numbered lists, not bullets.

- Do not output warnings or notes—just the requested sections.

- Do not repeat items in the output sections.

- Do not start items with the same opening words.

# INPUT:

INPUT:

Fabric simplifies AI interactions through its command-line interface (CLI), where input is piped into the tool to trigger specific actions. This approach enables seamless execution of various tasks, ranging from transcribing videos to querying information and chaining commands together. Let’s explore some us-case examples:

Transcribing YouTube Videos:

Easily transcribe the content of any YouTube video:

1yt --transcript <YOUTUBE-VIDEO-LINK> | fabric --model <MODEL> -sp summarize

This command summarises the key points from the video. The yt helper is an integrated tool (like ts for audio transcriptions or save for saving of content, while keeping the output stream intact) for transcribing the content of a YouTube Video.

Asking Any Question:

Get instant answers to any question:

1echo "Give me a list of ice cream flavors" | fabric -s -p ai

With ai, Fabric uses its default Pattern to provide accurate responses to a given input.

Stitching:

Chain multiple Fabric commands for complex tasks:

1pbpaste | fabric -p summarize | fabric -s -p write_essay

Stitching enables seamless integration of more Fabric functionalities to handle complex tasks effectively. In this example, we pipe any input (e.g. the content of a website) into a summarising Fabric task using pbpaste. The output of the first Fabric task is then piped again to write an essay.

1yt --transcript https://www.youtube.com/watch\?v\=UbDyjIIGaxQ | fabric --model gpt-4-turbo -p extract_wisdom | fabric --model gpt-4-turbo -sp summarize

In this example, we transcribe Daniel Miessler’s YouTube video about Fabric. The output is then piped into the Fabric extract_wisdom command. This command extracts the and main ideas, quotes, references and recommendations from the video. The output of the first Fabric command is then piped again to summarise the content.

This is what the output, formatted in Markdown, looks like:

# ONE SENTENTCE SUMMARY:

Fabric, created by Daniel Miessler, is an open-source AI tool designed to enhance human capabilities by simplifying AI usage.

# MAIN POINTS:

1. Fabric is an open-source AI tool that augments human capabilities.

2. Users can interact with Fabric through command line, GUI, or voice commands.

3. The tool features "Extract Wisdom" for efficient text processing.

4. It supports integration with various AI models and local setups.

5. Fabric allows for the creation of custom prompts to address specific problems.

6. Designed for easy setup across multiple platforms, enhancing accessibility.

7. Community involvement is encouraged for developing new prompts and patterns.

8. Fabric integrates seamlessly with note-taking applications like Obsidian.

9. It offers patterns that mimic human note-taking and summarization processes.

10. Fabric's CLI-native design appeals to users who prefer command-line interfaces.

# TAKEAWAYS:

1. Fabric democratizes AI by being open-source and encouraging user contributions.

2. Reduces friction in AI usage, boosting productivity and problem-solving capabilities.

3. Customizable patterns allow for tailored AI interactions to meet individual needs.

4. Integrates well with text-based systems, fitting into modern digital workflows.

5. Its community-driven development model promotes continuous improvement and innovation.

Fabric also enables us to create our own Patterns by simply using a predefined Pattern for that! To create our own Pattern, we go to .config/fabric and create a new folder within the directory for our custom templates. Within this folder, we specify another sub-folder for our Pattern and create a system.md file to paste in the content of our created pattern.

1.config/fabric/MY_CUSTOM_PATTERNS/PATTERN_EXAMPLE/system.md

To create the actual prompt for the Pattern, we use the improve_prompt Pattern and specify a simple and rough version of a prompt. We’ll save the output to the created system.md file.

1echo "You are an expert in understanding and digesting TED talks, identifying key points mentioned, funny stories, key quotes. You will identify themes, create discussion questions and identify stories." | fabric -sp improve_prompt

This is what the output could look like:

**You are an expert in analyzing TED talks. Your task is to:**

1. **Identify Key Points:** Extract and list the main ideas or arguments presented in the TED talk.

2. **Highlight Funny Stories:** Note any humorous anecdotes or stories shared during the talk.

3. **Capture Key Quotes:** Provide direct quotes that encapsulate significant insights or moments from the talk.

4. **Determine Themes:** Analyze and state the overarching themes of the talk.

5. **Create Discussion Questions:** Formulate thought-provoking questions based on the content of the talk that could be used to spark further discussion.

6. **Summarize Stories:** Briefly summarize any stories told during the talk, explaining their relevance to the main message.

**Please present your analysis in a structured format, using bullet points for each section.**

In Fabric, a Context refers to additional, pre-created information that can be applied to Patterns to add more context to a given task and provide more relevant and accurate responses, tailored to specific needs. This makes Fabric even more customisalble. For example, we can tell the model to focus on certain aspects of a topic within a text and ignore the rest, or we can tell it what we're trying to achieve.

Custom contexts can be stored locally in:

1~/.config/fabric/context.

Agents are modular components designed to execute specific tasks or interact with data. They handle tasks like text summarisation, API integrations, and data processing, making Fabric versatile and adaptable for various AI-related functions. Agents are accessed through Fabric's CLI and use PraisonAI. They enhance Fabric's capabilities by enabling focused functionalities without altering its core framework extensively. They work with OpenAI and Ollama models.

1echo "Search for recent articles about the future of AI and write me a 500-word essay on the findings. Include the article references." | fabric --agents

This is what the output could look like, where Fabric first generates an agent with its own Pattern:

Starting PraisonAI...

[DEBUG]: == Working Agent: Researcher

[INFO]: == Starting Task: Use web scraping tools to gather recent articles about the future of artificial intelligence from various scholarly sources and credible websites.

[DEBUG]: == [Researcher] Task output: To gather recent articles about the future of AI, you will need to follow these steps:

...

[DEBUG]: == Working Agent: Writer

[INFO]: == Starting Task: Write a 500-word essay based on the summarized article findings about the future of AI, including referenced article details.

[DEBUG]: == [Writer] Task output: The future of artificial intelligence (AI) stands as one of the most riveting and rapidly evolving fronts in the technological landscape, promising to redefine a myriad of sectors including healthcare, finance, transportation, and education. This essay draws upon a comprehensive review of recent scholarly articles and authoritative reports to delineate the trajectory and implications of AI advancements.

Recent scholarly articles from the Journal of Artificial Intelligence Research and databases like IEEE Xplore suggest that AI's future will be shaped by significant advancements in machine learning algorithms and increased computational power, enabling more complex problem-solving capabilities (Source: IEEE Xplore, 2023). For instance, deep learning models that mimic human brain functions are becoming more sophisticated, allowing for more intuitive AI responses.

Tech news websites like TechCrunch have highlighted innovative applications of AI in real-world scenarios such as autonomous vehicles and smart cities, indicating a shift towards more integrated and functional uses of AI technologies (Source: TechCrunch, 2023). These advancements suggest a future where AI not only automates tasks but also enhances human capabilities and decision-making processes.

AI-centric portals like arXiv offer insights into the ethical implications and governance challenges that accompany AI development. There is a growing discourse on the need for robust ethical frameworks and regulatory measures to ensure that AI technologies are used responsibly and do not perpetuate biases or infringe on privacy (Source: arXiv, 2023). This aspect of AI’s future is crucial for garnering public trust and facilitating sustainable integration into society.

Furthermore, the integration of AI in healthcare, as reported in databases like PubMed, is particularly promising, with AI systems being developed to diagnose diseases, customize patient treatment plans, and manage healthcare records more efficiently (Source: PubMed, 2023). However, this also raises significant concerns about data security and the need for stringent data protection measures.

The future of AI also hinges on the advancement of quantum computing, which could potentially revolutionize AI’s processing capabilities. As discussed in recent IEEE Xpore articles, quantum AI could lead to breakthroughs in fields such as cryptography and complex system simulation, offering unprecedented speed and accuracy (Source: IEEE Xplore, 2023).

...

A Mill in Fabric refers to the server component that makes various AI patterns available. Mills are responsible for hosting and managing these patterns, allowing users to access and execute them.

Looms are the client-side applications that interact with mills. They are responsible for sending user input to the mills and retrieving the processed output. Looms can be command-line tools, web interfaces, or any other application that communicates with the mill.

Mills and Looms in Fabric enable businesses to automate data analysis tasks efficiently. For instance, a company processing large volumes of customer feedback can use a mill (server) to host AI patterns for sentiment analysis and summarisation. Employees access these tools through looms (client-side apps), sending data to the mill for rapid analysis. This setup accelerates insight generation, supports quick decision-making, and enhances overall operational efficiency.

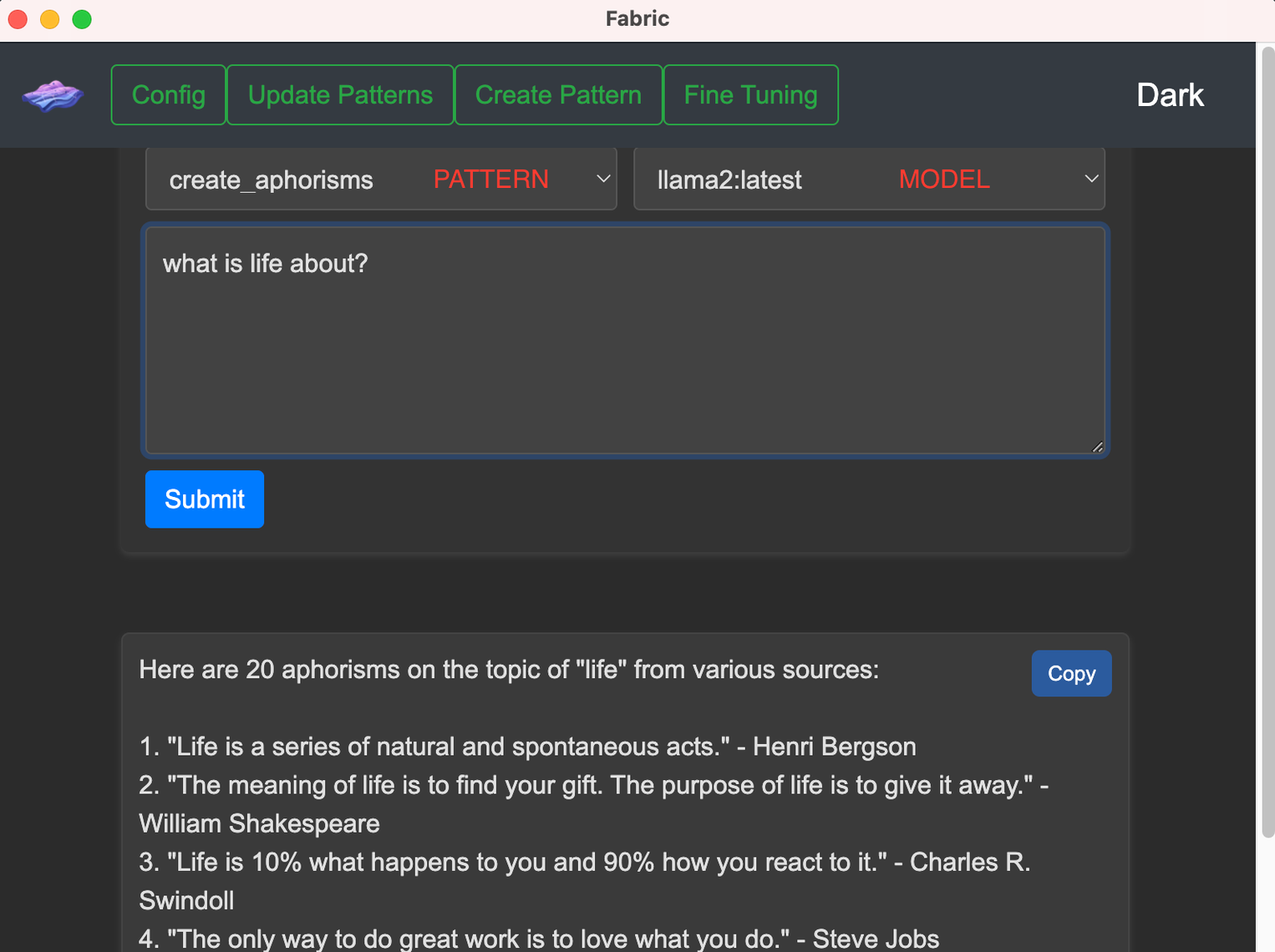

Fabric also offers a user-friendly UI that enhances its versatility beyond the command-line interface. By running fabric --gui, users can initiate the Fabric UI, provided Node.js and NPM (Node Package Manager). Be sure to install Node and NPM.

This graphical interface simplifies interaction with Fabric, offering an intuitive way to access its functionalities and manage tasks. The Fabric UI streamlines the process of configuring models, executing commands, and visualising outputs, making it accessible for users who prefer a visual interface over command-line operations. It extends Fabric's utility by providing a seamless integration point for both novice and experienced users to leverage its AI capabilities effectively.

Fabric is more than just a collection of AI prompts; it's a robust framework designed to augment human capabilities by seamlessly integrating AI into everyday tasks. By reducing the friction associated with AI integration, Fabric helps users unlock the full potential of artificial intelligence, making it an invaluable tool for both personal and professional use. Whether we’re looking to streamline our workflow, enhance our productivity, or simply explore the possibilities of AI, Fabric provides the tools we need to succeed.

If you are interested in learning more about AI tools, check out our blog post about AI Coding Assistants. Stay tuned to our blog for more updates on the latest tools and technologies. At Infralovers, we are committed to keeping you at the cutting edge of the tech landscape.

You are interested in our courses or you simply have a question that needs answering? You can contact us at anytime! We will do our best to answer all your questions.

Contact us