Is Atlantis a Viable Alternative to HashiCorp Cloud Platform Terraform?

Infrastructure as Code (IaC) has revolutionized the way organizations manage cloud infrastructure, with Terraform leading as a premier tool. HashiCorp Cloud

Within our previous posts about HashiCorp Packer we have shown how to build images for AWS and Azure using Packer. In this article we will add something we covered in our final thoughts within our HCP Packer Template VM distribution article: How to promote changes to the Packer templates to different environments.

With the default configuration of a HCP Packer hcp_packer_registry definition for the HCP Packer Registry, all iterations are created within the latest channel. That is fine for testing, but we want to have a more control about the distribution of our templates. We want to have a selection of channels for our different enviroments, e.g. to have a staging channel for our staging environment and a production channel for our production environment. So we need to create multiple HCP Packer Registry channels and automate the distribution of our templates to these channels.

Our current Packer image definition looks like the following snippet:

1build {

2

3 sources = [ "source.amazon-ebs.eu-central-1","source.amazon-ebs.eu-east-1", "source.amazon-ebs.us-east-1", "source.amazon-ebs.us-west-1" ]

4

5 hcp_packer_registry {

6

7 bucket_name = replace(replace(var.image_name, ".", "-"), "_","-")

8 description = "HCP Packer Registry: ${var.image_name}-${var.ansible_play}-${var.ci_commit_ref}-${var.ci_commit_sha"

9 bucket_labels = {

10 "owner" = "infralovers",

11 "name" = "${var.image_name}",

12 "play" = "${var.ansible_play}",

13 "ci_commit_ref" = "${var.ci_commit_ref}",

14 "ci_commit_sha" = "${var.ci_commit_sha}"

15 }

16

17 build_labels = {

18 "owner" = "infralovers",

19 "build-time" = "${var.timestamp}",

20 "name" = "${var.image_name}",

21 "play" = "${var.ansible_play}",

22 "ci_commit_ref" = "${var.ci_commit_ref}",

23 "ci_commit_sha" = "${var.ci_commit_sha}"

24 }

25 }

26

27 provisioner "shell" {

28 inline = ["while [ ! -f /var/lib/cloud/instance/boot-finished ]; do echo 'Waiting for cloud-init...'; sleep 1; done"]

29 }

30

31 provisioner "shell" {

32 execute_command = "echo 'packer' | {{ .Vars }} sudo -S -E bash '{{ .Path }}'"

33 script = "packer/scripts/setup.sh"

34 }

35

36 provisioner "ansible" {

37 galaxy_file = "ansible/requirements.yaml"

38 playbook_file = "ansible/common.yml"

39 use_proxy = false

40 groups = [ "common", var.ansible_play ]

41 }

42

43 provisioner "ansible" {

44 playbook_file = "ansible/${var.ansible_play}.yml"

45 use_proxy = false

46 groups = [ "common", var.ansible_play ]

47 }

48

49 provisioner "shell" {

50 execute_command = "echo 'packer' | {{ .Vars }} sudo -S -E bash '{{ .Path }}'"

51 script = "packer/scripts/cleanup.sh"

52 }

53

54 provisioner "cnspec" {

55 on_failure = "continue"

56 score_threshold = 85

57 asset_name = "${var.image_name}${var.image_tag}"

58 sudo {

59 active = true

60 }

61 annotations = {

62 Source_AMI = "{{ .SourceAMI }}"

63 Creation_Date = "{{ .SourceAMICreationDate }}"

64 }

65 }

66}

Within this definition we were switching from ansible-local to ansible as provisioner, but more on that on a different article that caused this change.

Also we are using cnspec from our friends of Mondoo to check the security of our images. This is a great tool to check the security of your images and we will cover this in a different article as well.

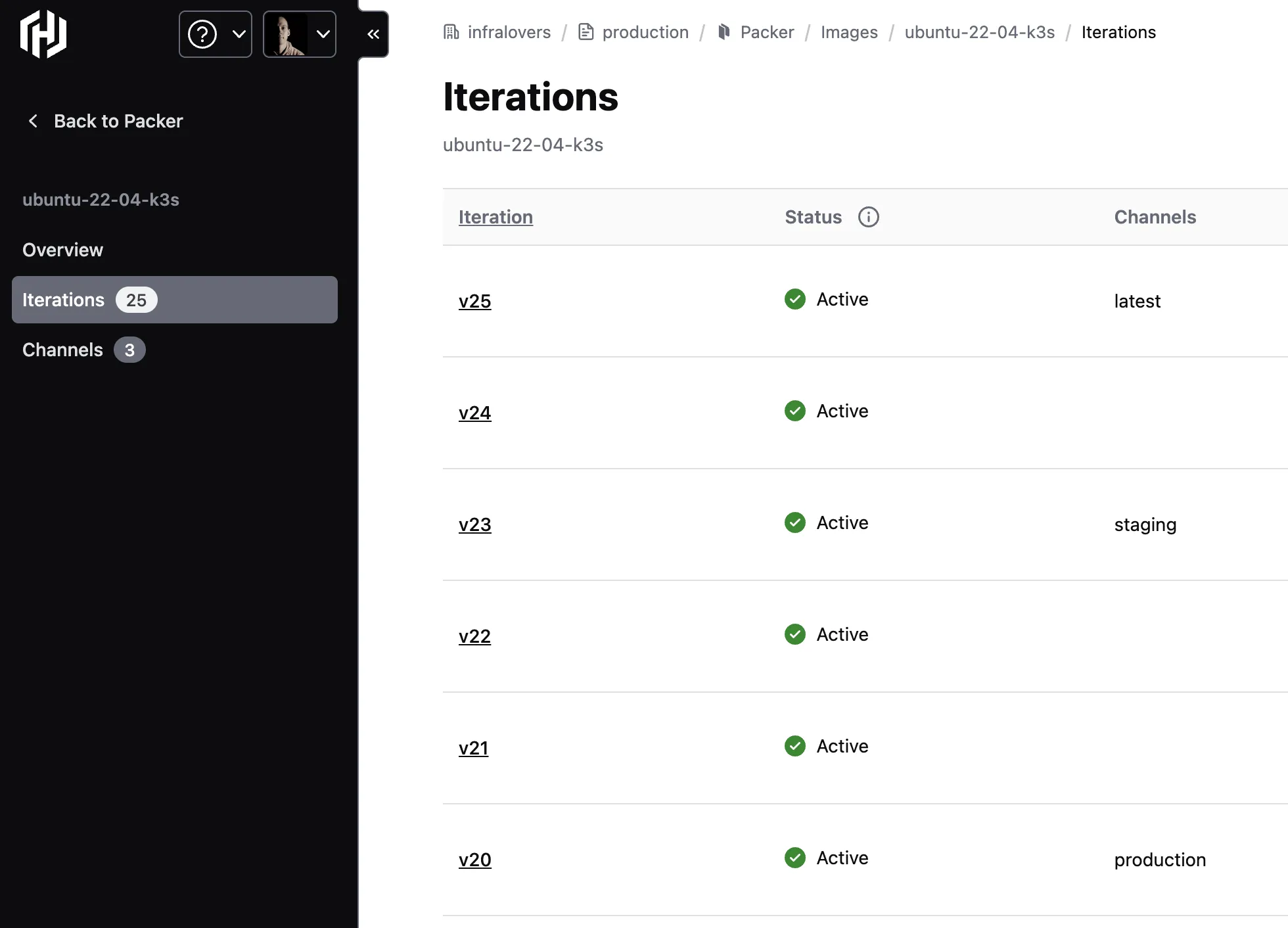

On the first build we will create the latest iteration. Every following build will create a new iteration. All Packer builds are generating new iterations of the image. We can see the iterations within the HCP Packer Registry:

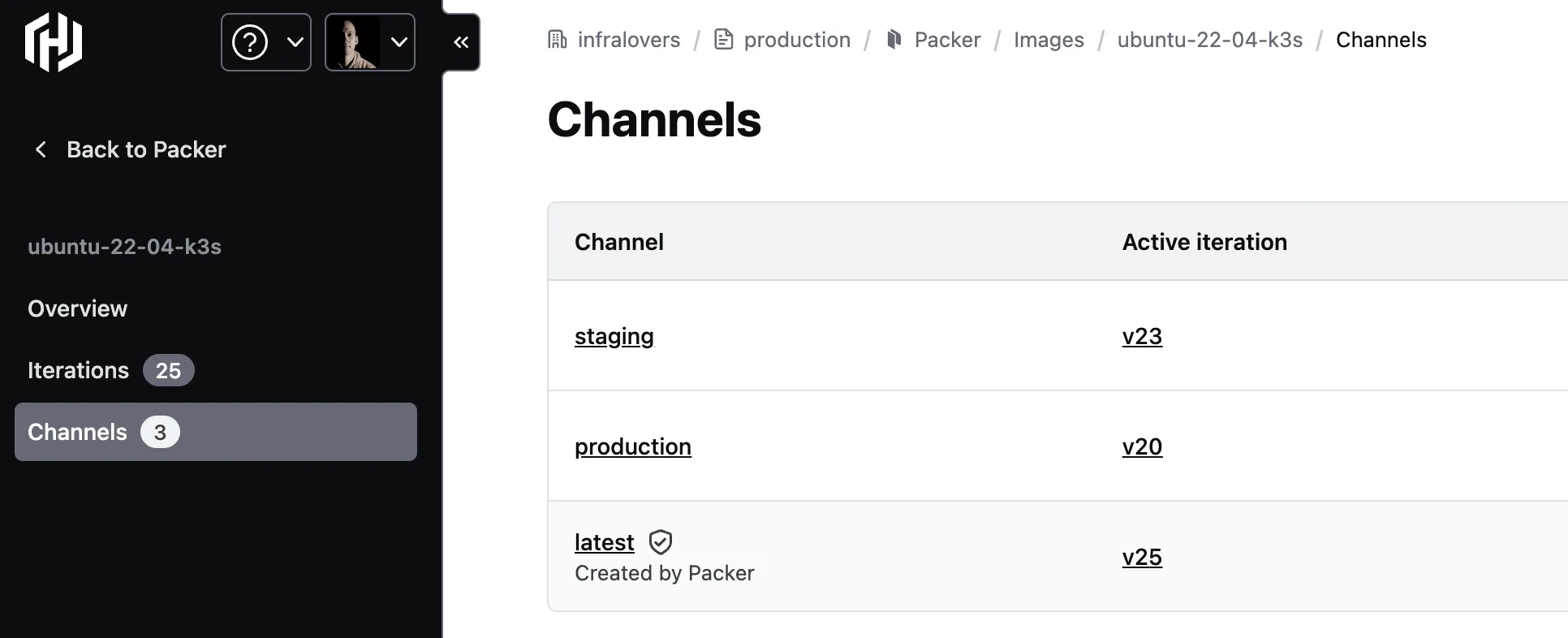

Right now our HCP Packer definition for the HCP Packer Registry creates all iterations of the template within the latest channel. That is fine for testing, but we want to have a more controlled distribution of our templates. We want to have a selection of channel for our dedicated enviroments, e.g. to have a staging channel for our staging environment and a production channel for our production environment. So we need to create a new HCP Packer Registry for each of these channels. We will use the following snippet to create the HCP Packer Registry for the production channel:

1variable "hcp_image_name" {

2 type = string

3}

4

5variable "hcp_channel"{

6 type = string

7 default = "production"

8}

9

10variable "hcp_channel_source" {

11 type = string

12 default = "latest"

13}

14

15data "hcp_packer_iteration" "latest" {

16 bucket_name = var.hcp_image_name

17 channel = var.hcp_channel_source

18}

19

20resource "hcp_packer_channel" "production" {

21 name = var.hcp_channel

22 bucket_name = var.hcp_image_name

23}

24

25resource "hcp_packer_channel_assignment" "production" {

26 bucket_name = var.hcp_image_name

27 channel_name = var.hcp_channel

28

29 iteration_version = data.hcp_packer_iteration.latest.incremental_version

30}

Because we are running this following code within a pipeline to address the functionality with multiple different images, we need to associate the current state within the HCP registry by running the import commands. This allows us to process the changes afterwards - and we want to use the same toolset from HashiCorp in this setup, so we are using terraform for this changes. We assume that the variable IMAGE_NAME is set to the name of the image we want to create:

1terraform import -var "hcp_image_name=${IMAGE_NAME}" hcp_packer_channel.production "${IMAGE_NAME}:production"

2

3terraform import -var "hcp_image_name=${IMAGE_NAME}" hcp_packer_channel_assignment.production "${IMAGE_NAME}:production"

4

5terraform apply -auto-approve -var "hcp_image_name=${IMAGE_NAME}"

So the following statements represent the acutal comands when the variable is replacedÖ

1terraform import -var "hcp_image_name=ubuntu-22-04-docker" hcp_packer_channel.production "ubuntu-22-04-docker:production"

2

3terraform import -var "hcp_image_name=ubuntu-22-04-docker" hcp_packer_channel_assignment.production "ubuntu-22-04-docker:production"

4

5terraform apply -auto-approve -var "hcp_image_name=ubuntu-22-04-docker"

Those import commands are relevant to import the existing HCP Packer Registry for the production channel. The last command adresses the HCP Packer Registry for the production channel.

This snippet creates a new HCP Packer Registry for the production channel and assigns the latest iteration to this channel. We can use the same snippet to create a staging channel as well. We just need to change the name of the channel and the source channel of the HCP Packer Registry.

We have shown how to create a HCP Packer Registry for a specific channel. We can use this to create a latest and a production channel. We can use the same snippet to create any other channel as well. We just need to change the name of the channel and the source channel of the HCP Packer Registry. By this setup we can create a release pipeline that uses the latest channel as development stage and production as production ready. In between those stages we can create any other channel we need, e.g. staging or qa. This gives us a lot of flexibility to create a release pipeline that fits our needs.

We are using this setup within a pipeline to create images within a pipeline and distribute them to different environments by running pipelines related Merge Requests and their according target branch. When a Merge Request is related to main, this will promote the related image to the production channel.

You are interested in our courses or you simply have a question that needs answering? You can contact us at anytime! We will do our best to answer all your questions.

Contact us