Is Atlantis a Viable Alternative to HashiCorp Cloud Platform Terraform?

Infrastructure as Code (IaC) has revolutionized the way organizations manage cloud infrastructure, with Terraform leading as a premier tool. HashiCorp Cloud

In a joint effort Jannis Rake-Revelant, Jürgen Brüder, and myself Edmund Haselwanter had a look at several what we call "Openstack Lifecycle Management tools".

This time Jannis Rake-Revelant did most of the work, so thanks for sharing your findings :-)

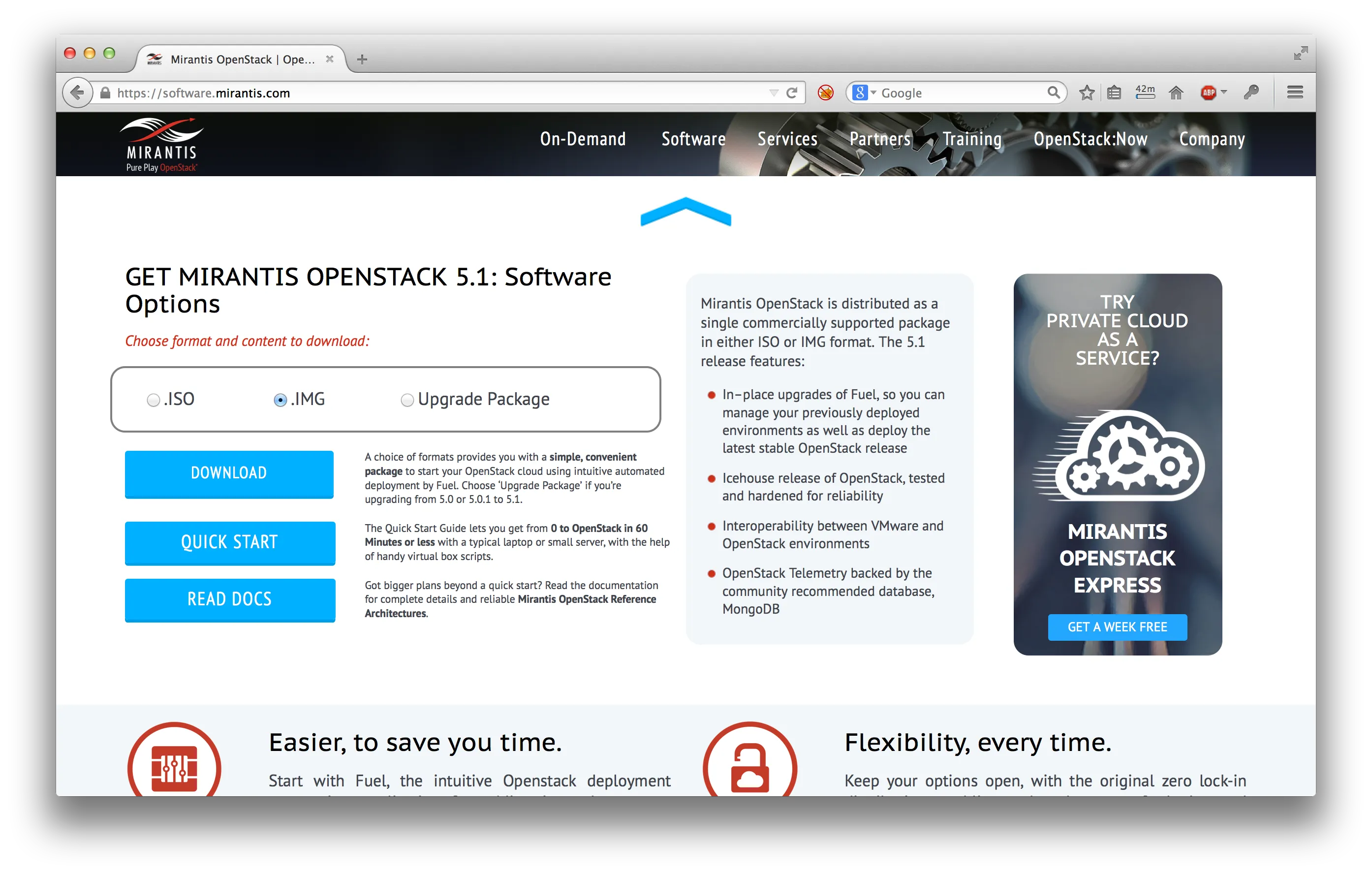

The Cloud Services company Mirantis offers a so called "Pure Play Openstack" distribution called "Mirantis Openstack". It is deployed using an automation system called "Mirantis Fuel" at can be obtained at https://software.mirantis.com/.

The version to be deployed in this document is 5.1 and based on the Icehouse Release Cycle of Openstack and CentOS 6.5 as a host operating system (an option to deploy on Ubuntu is also offered but has not been testet yet).

Existing documentation for (virtual) deployments seem to be sparse. The documentation at hand is based on the Mirantis Documentation for a manual deployment on VirtualBox and is adapted to VMWare Fusion.

The minimum setup of the distribution would consist of three VMs. For a more realistic deployment we will work with two instead of only one compute node:

The following specifications are suggested to have a testable system:

| VM | vCPU | RAM | HDD | NICs |

|------------|------|--------|----------|------|

| Admin | 1 | 1 GB | 50 GB | 1 |

| Controller | 1 | 2 GB | 30 GB | 2 |

| Compute 1 | 1 | 2 GB | 30 GB | 2 |

| Compute 2 | 1 | 2 GB | 30 GB | 2 |

| | | | | |

| _Total_ | _1_ | _7 GB_ | _140 GB_ | _7_ |

The total HDD space is theoretical, since we will be working with virtual disks that expand if needed. The RAM requirement leads to the recommendation to use a 12-16 GB system.

The OpenStack systems needs at least two virtual NICs per VM since Mirantis Openstack works with at least two untagged networks (admin and public). The network setup will be detailed later on.

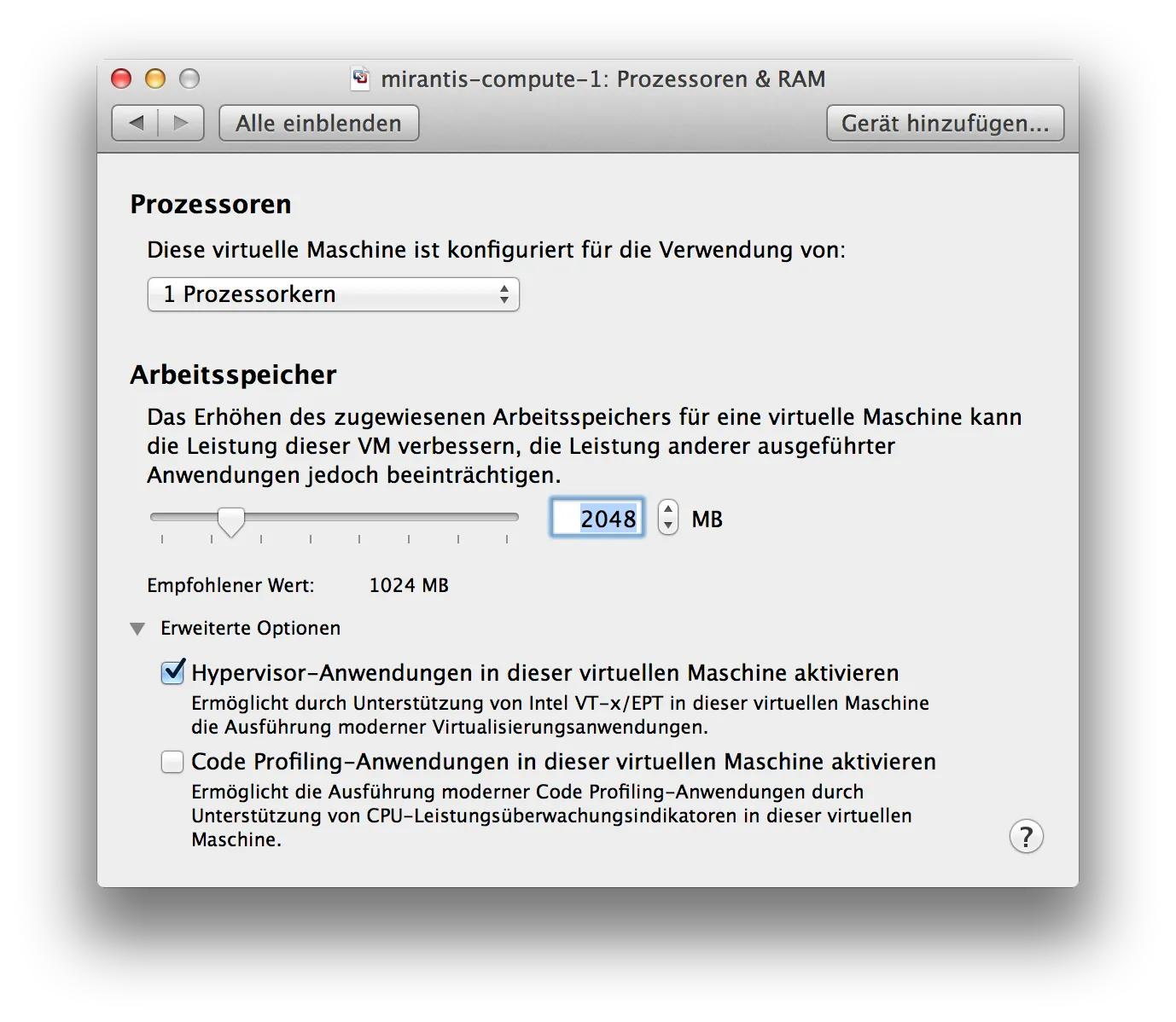

It is also necessary to use a virtualization solution (in our case VMware Fusion 5.05) and system allowing for nested virtualization (Intel VT-x) and enable this setting explicitly.

The admin node is needed to bootstrap the Openstack Installation. An ISO (2,6 GB) for installation can be obtained here:

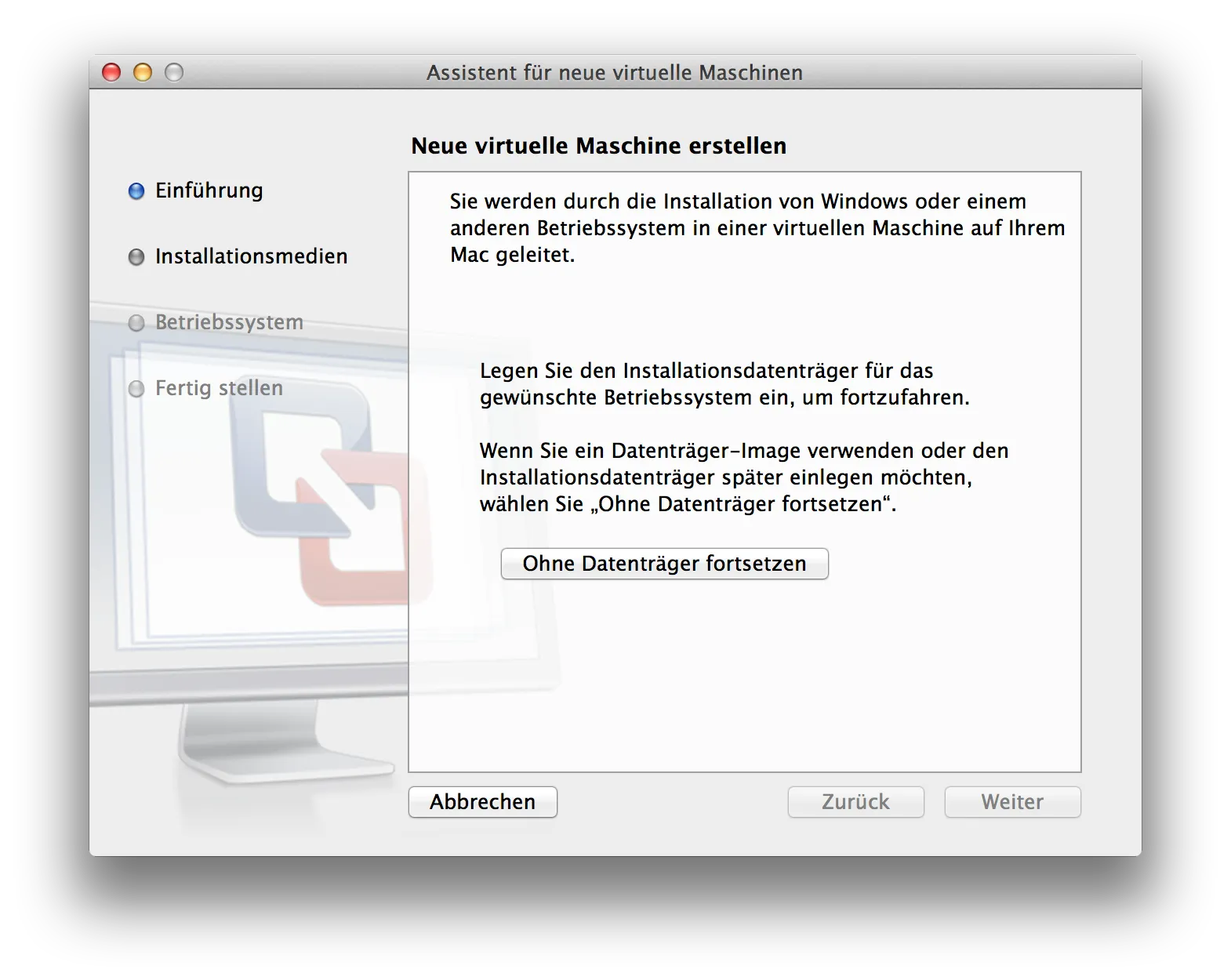

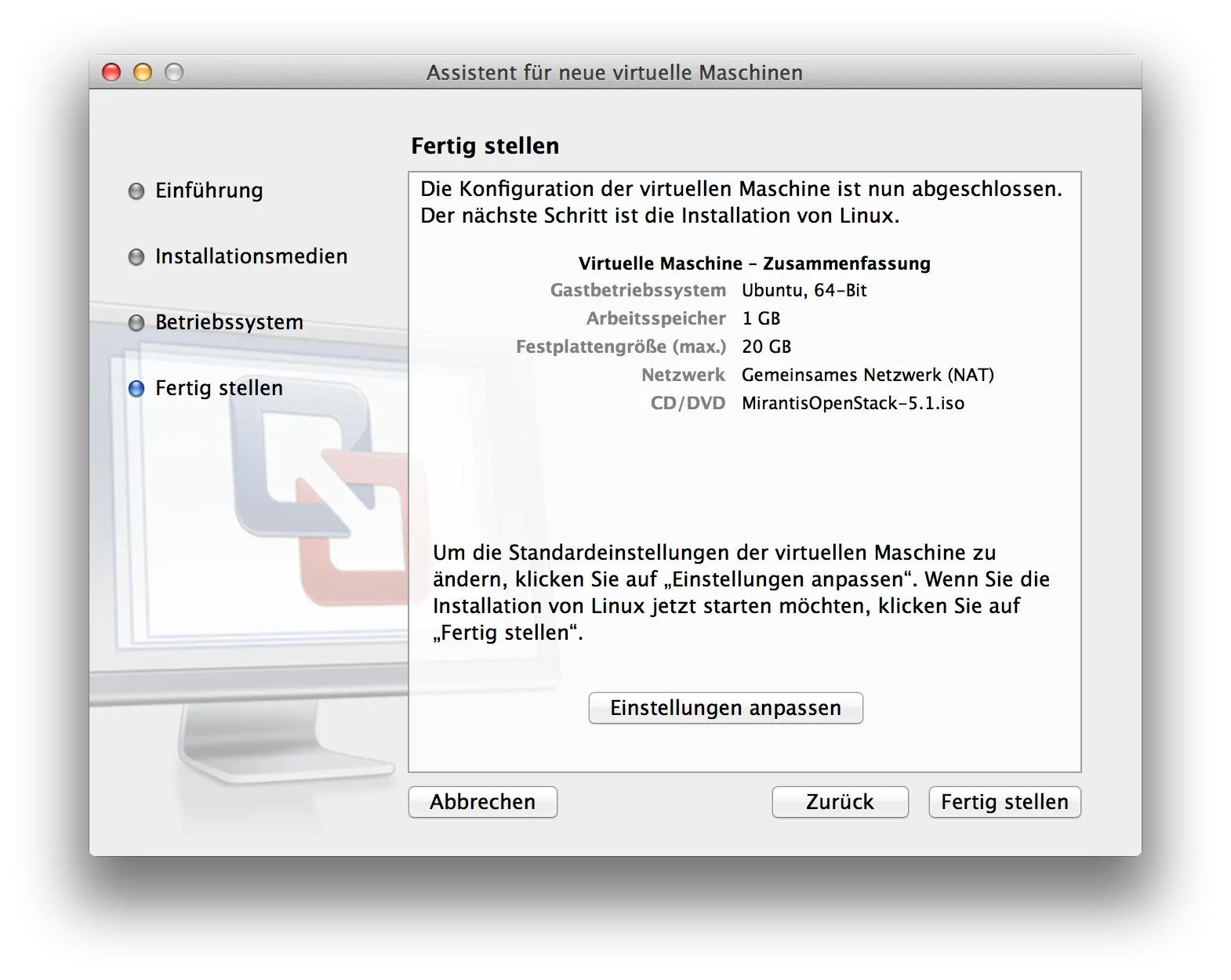

Next we need to prepare the VM using the VMware Fusion assistant:

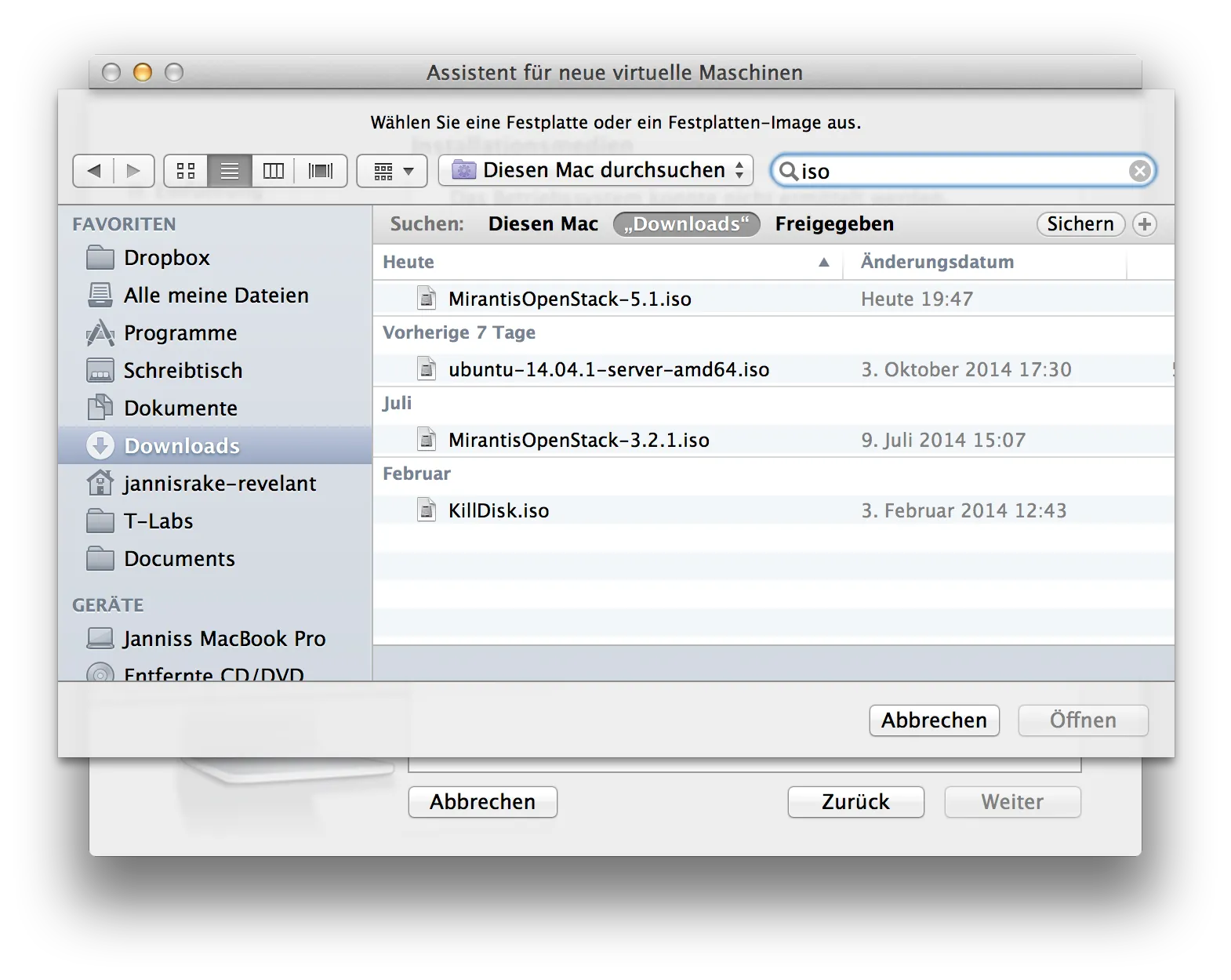

We provide the ISO for installation:

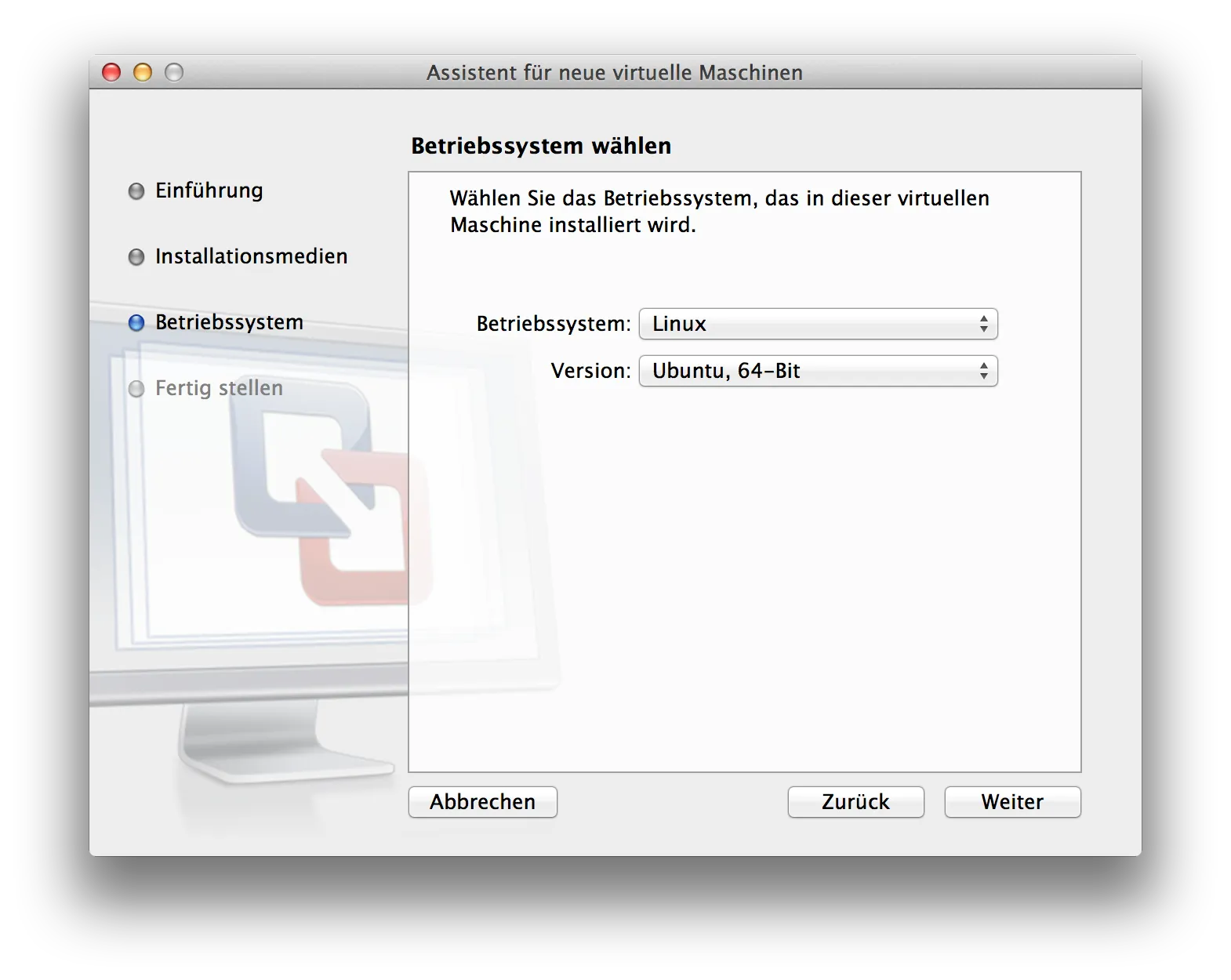

Chose Centos Linux in the 64bit option (important!):

The default setting for the VM need to be adjusted:

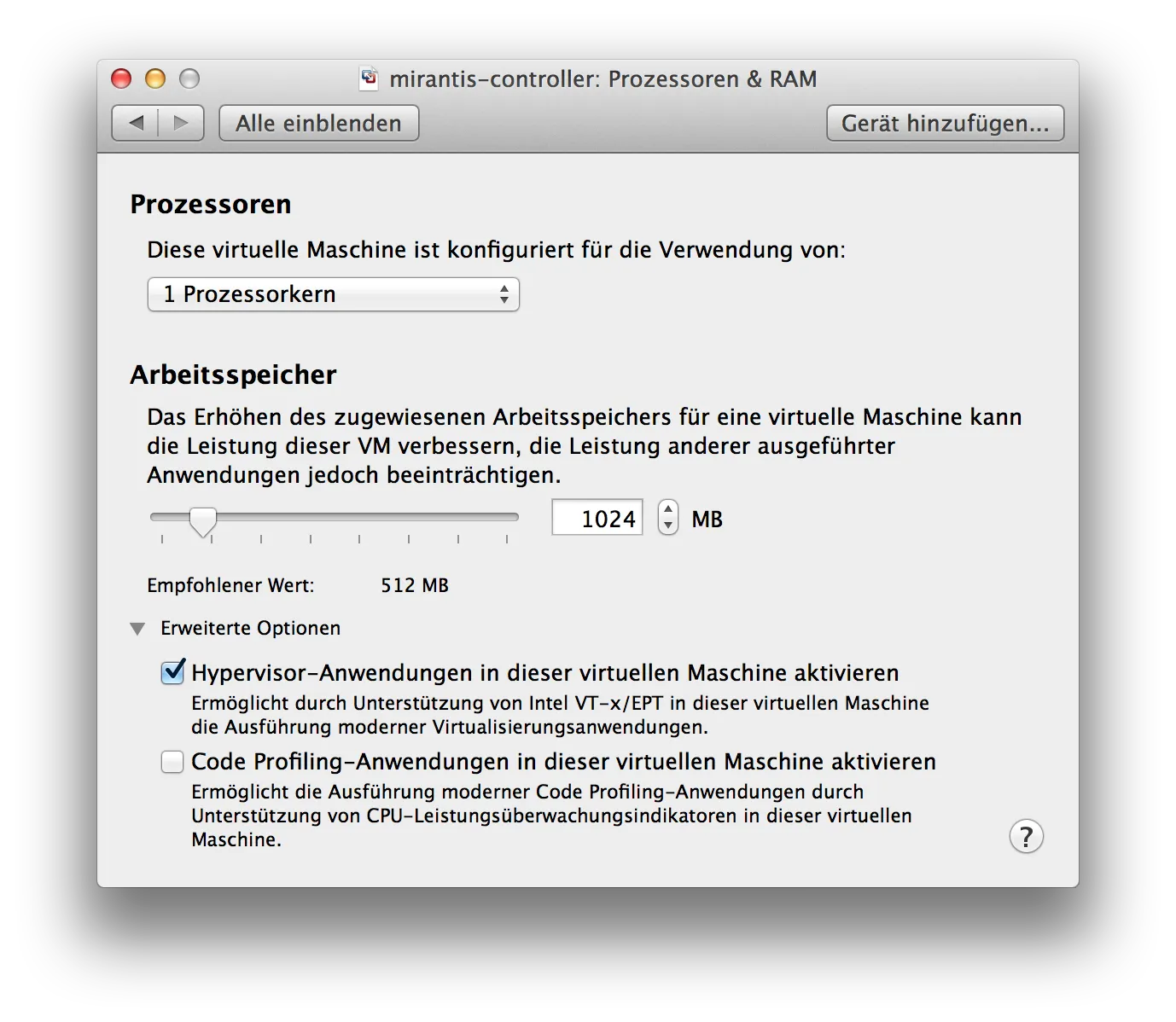

It should not matter in theory, but since we do not know if Fuel uses nested virtualization before bootstrapping it is turned on:

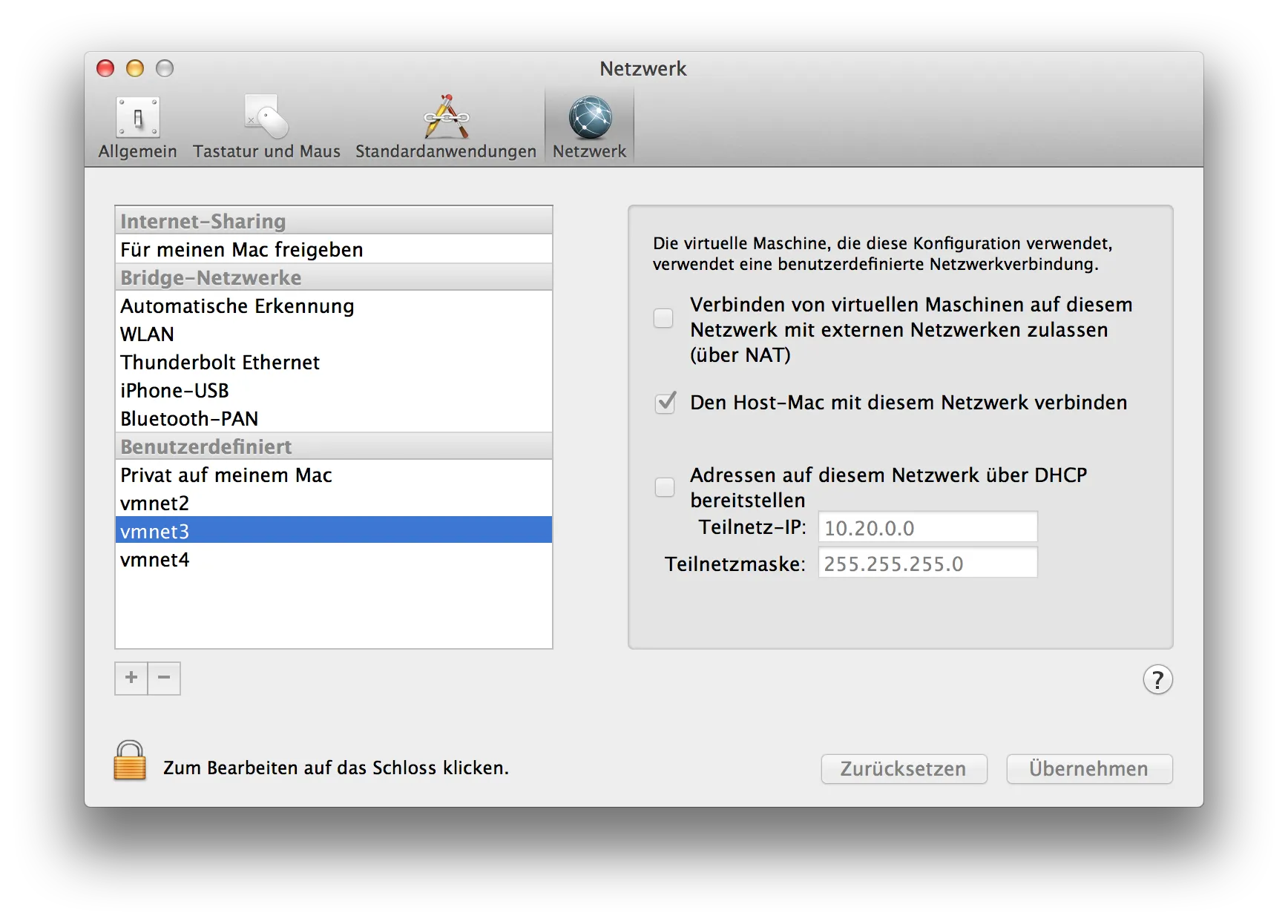

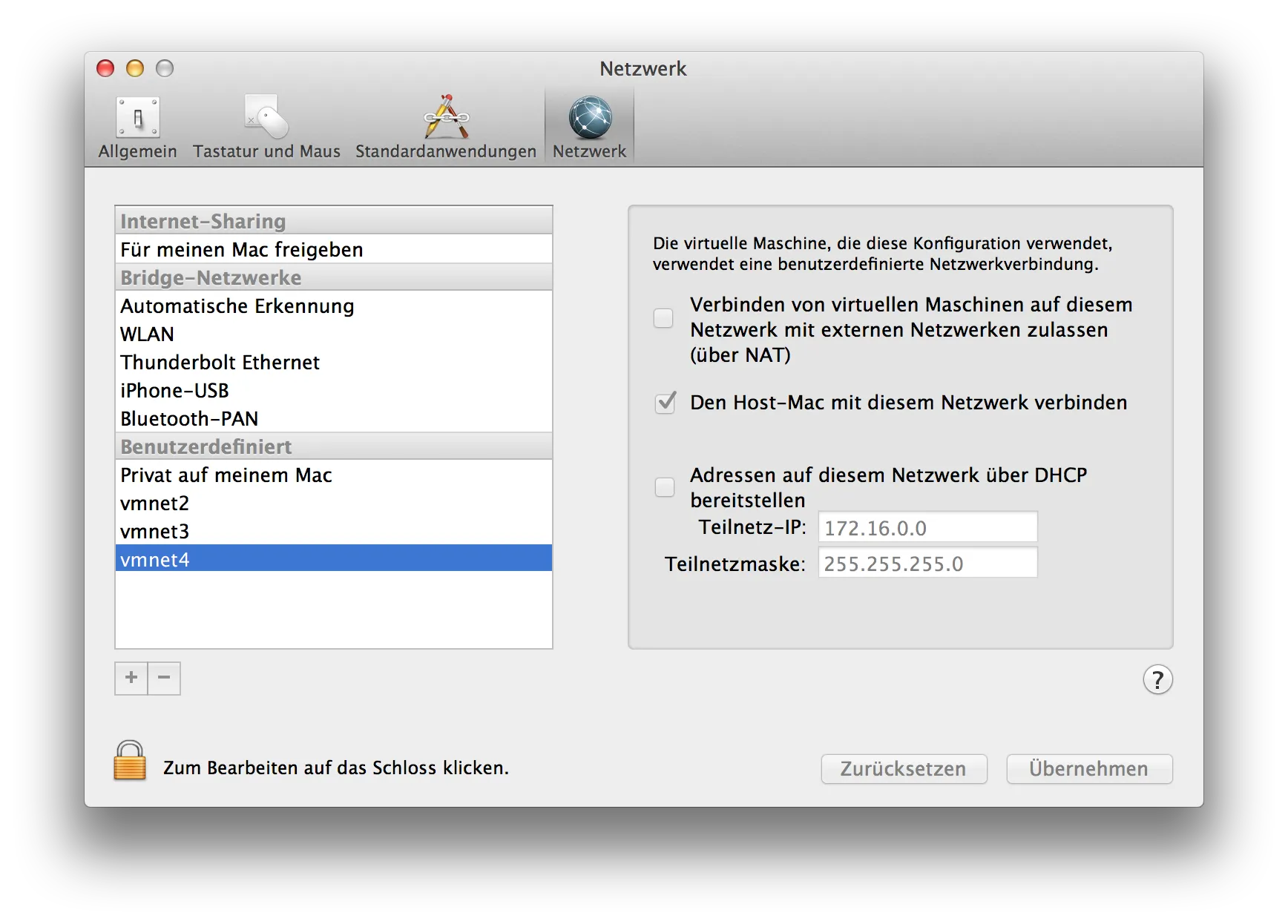

IMPORTANT: The next step is very important since the default (unattended) installation of Fuel from the ISO uses a static IP (10.20.0.2). So we need to create a custom network in the general (not VM) settings in VMware Fusion:

In our case it is vmnet3 with no DHCP enabled. Although no DHCP is needed the IP range and mask need to be edited correctly, in our case 10.20.0.0/24 in order to have connectivity to the admin console of fuel via our local browser.

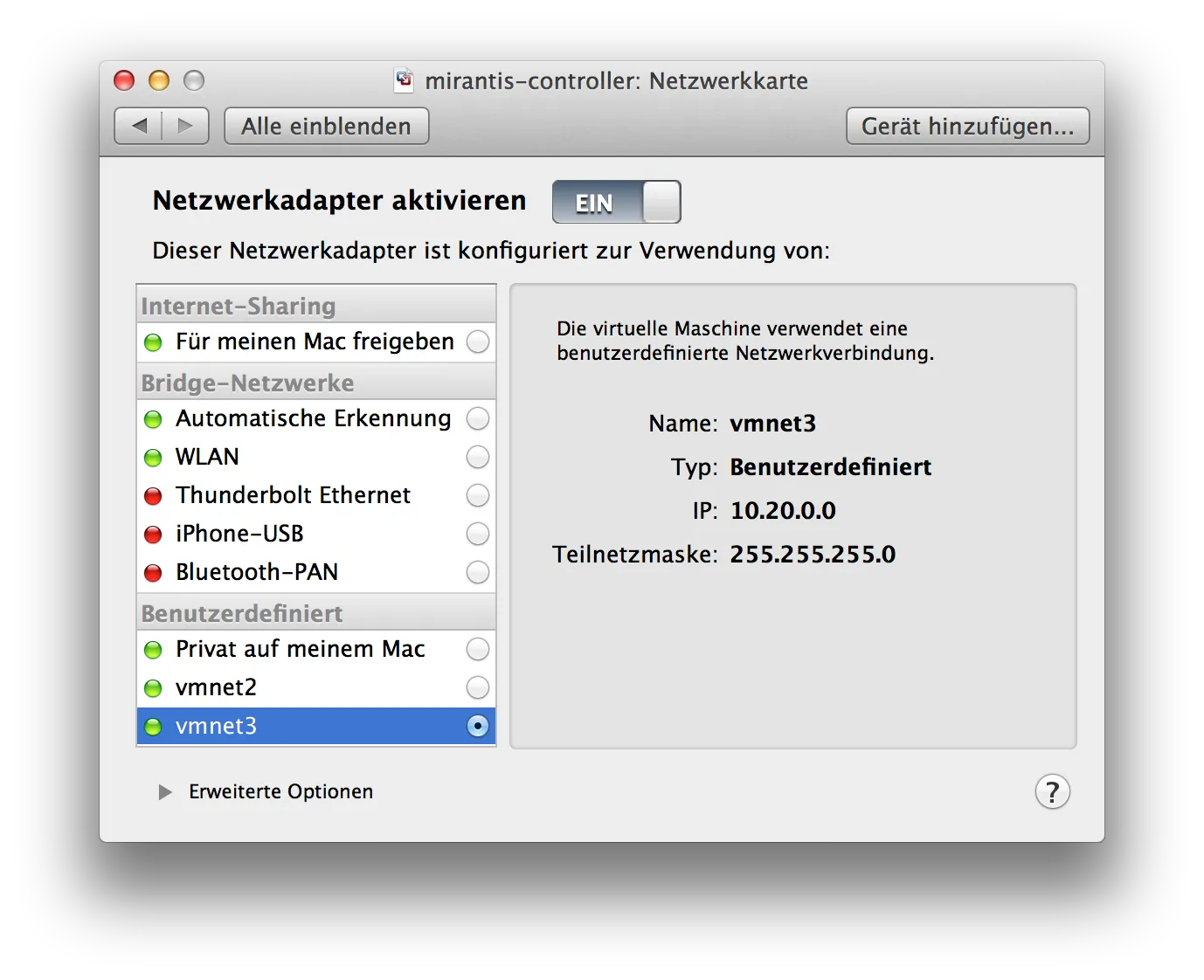

Make sure the VM is connected to the correct virtual network:

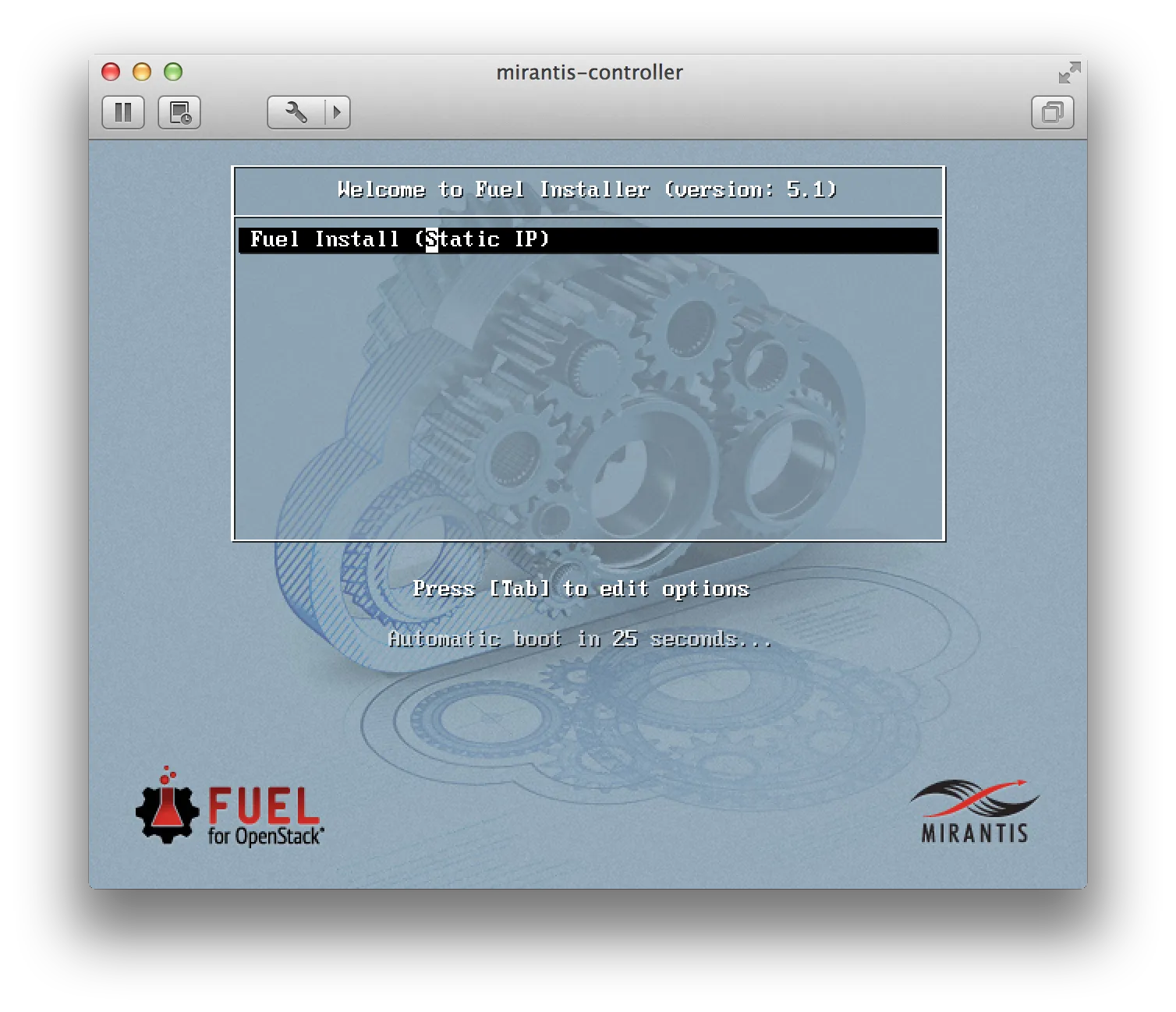

We can now start the VM and the automatic installation of Fuel will do the rest:

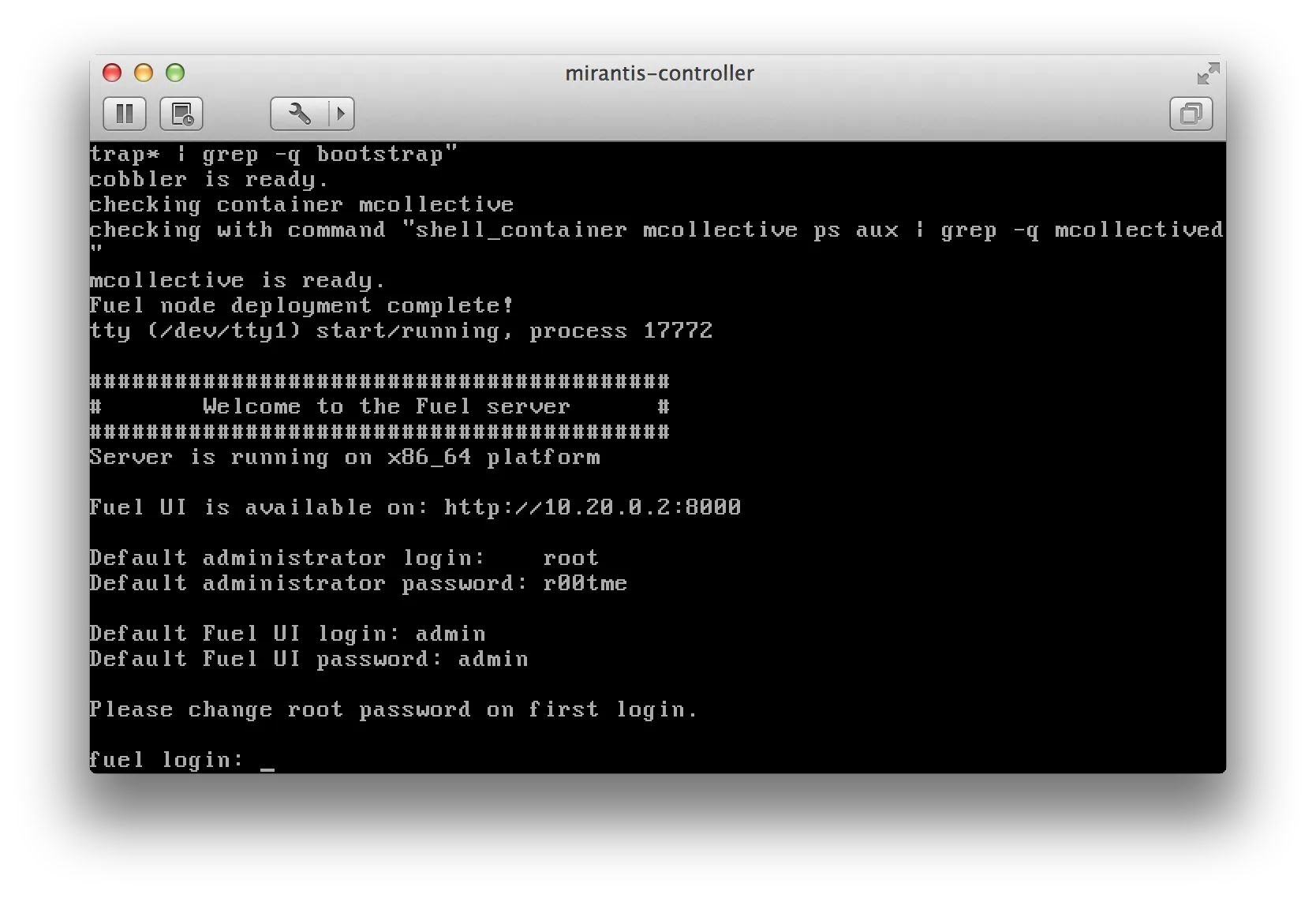

After a successful install you should see the following screen:

The bootstraping can be done using a web browser and no log in on the VM console is needed.

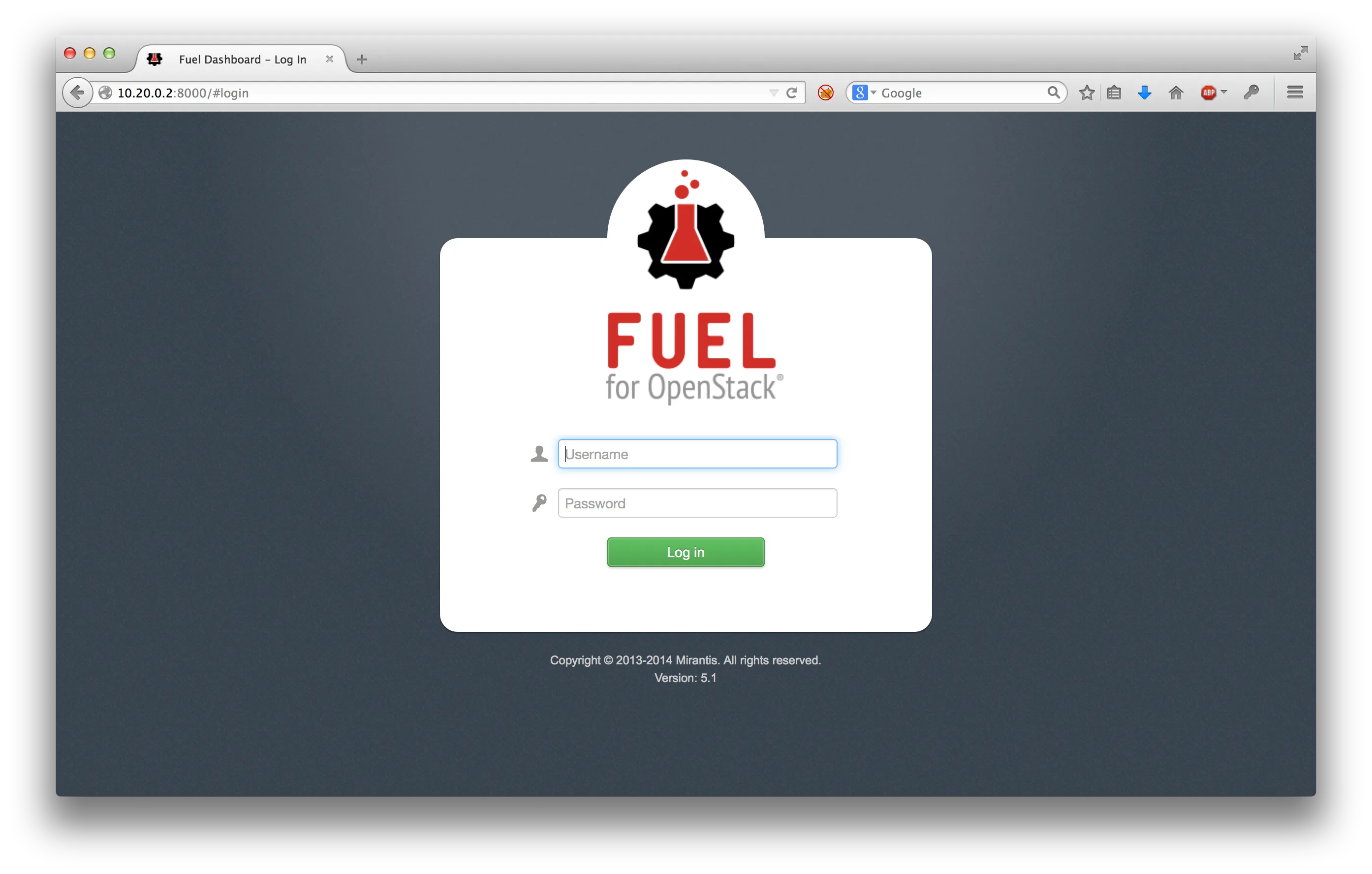

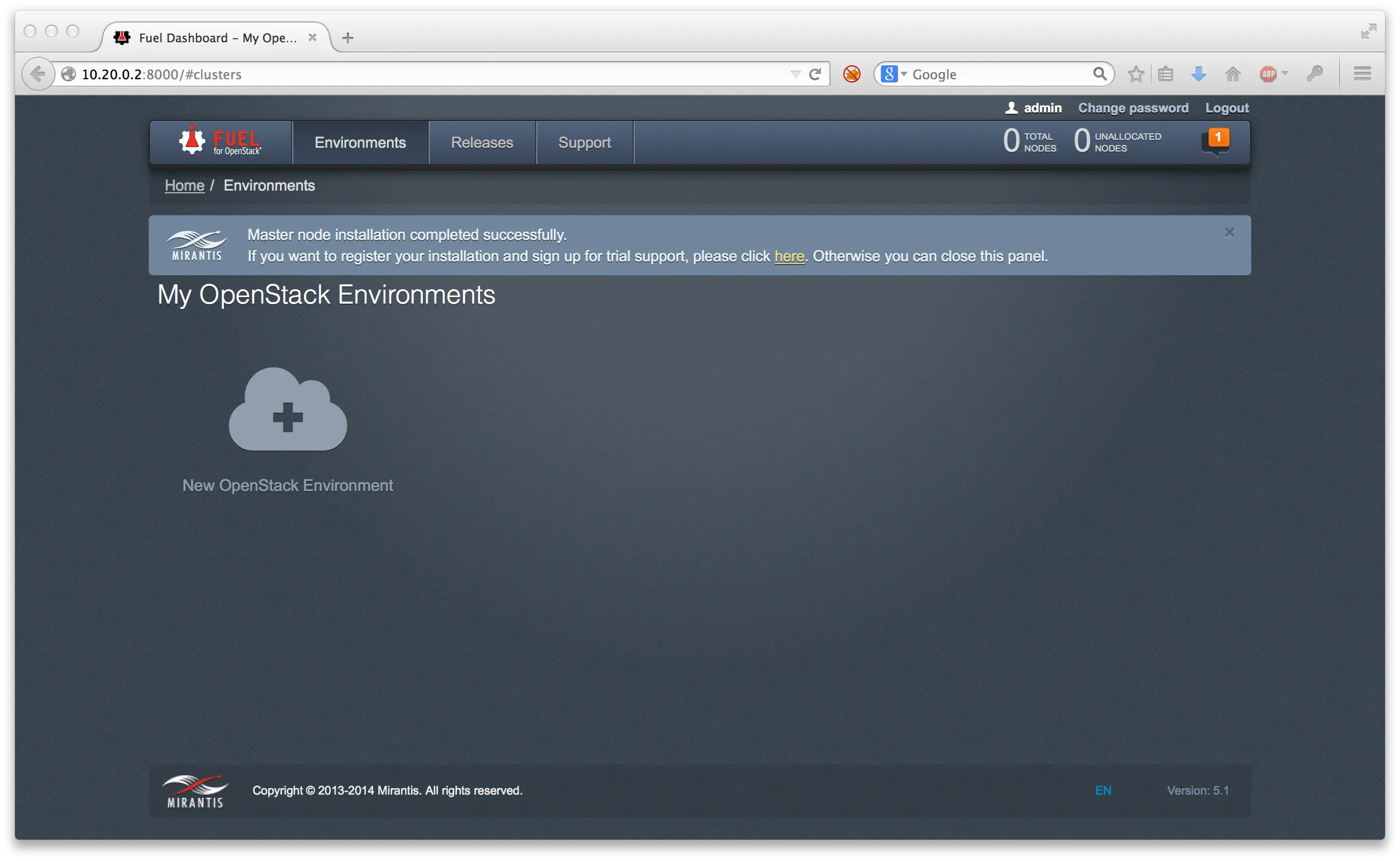

Once the installation of the admin node has finished, the Fuel web interface is available (by default) at http://10.20.0.2:8000/#login with the credentials admin:admin :

Before the admin node can go to work it needs empty VMs to bootstrap via PXE boot. The setup of the VMs differs slightly from that of the admin node.

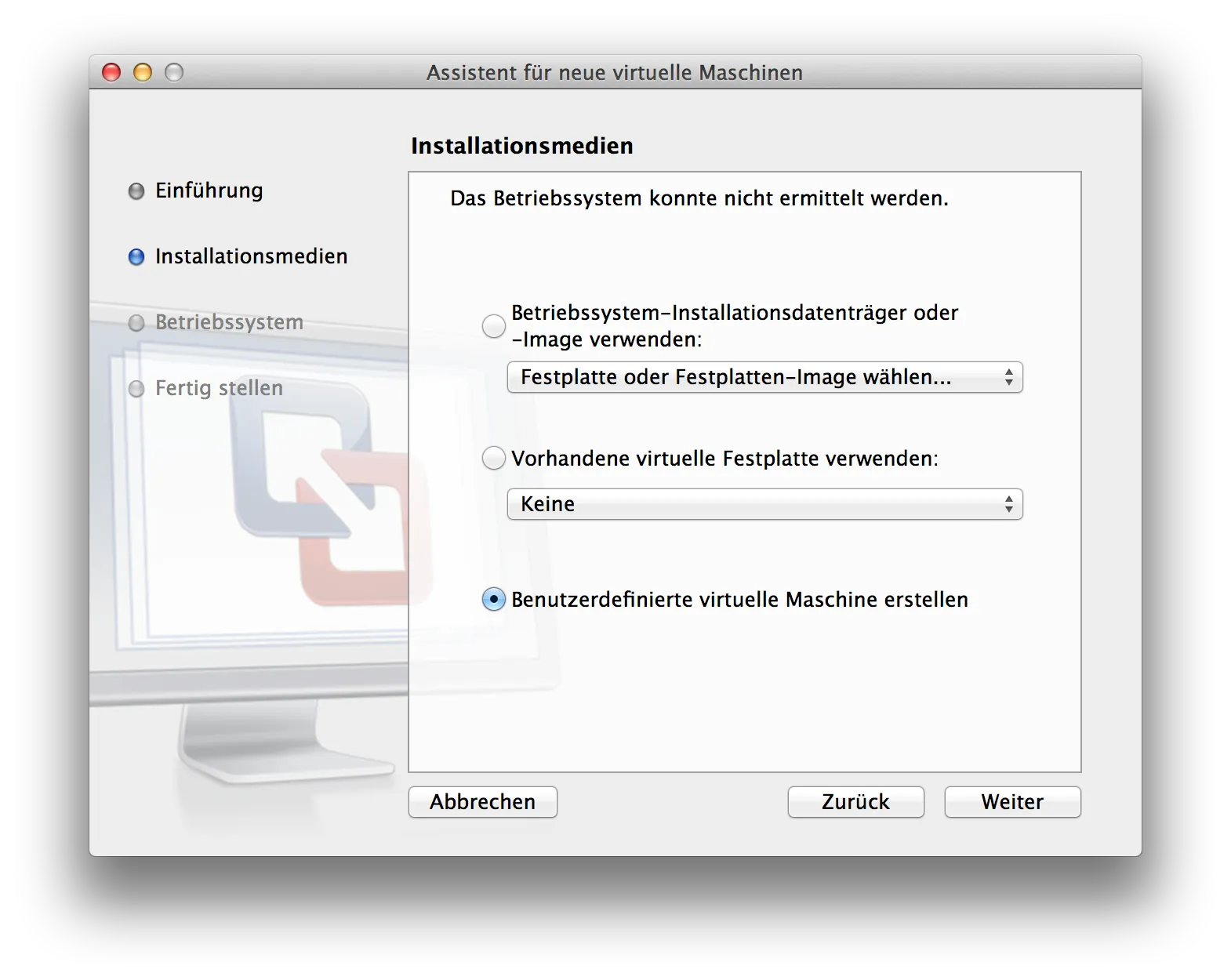

First, three custom VMs need to be defined without an existing disk or ISO with the already mentioned specifications for controller and compute nodes:

Just like the admin node, CentOS Linux 64 Bit is chosen as a profile. Also, the if the number of cores, RAM and HDD settings are correct and nested virtualization is active:

For the public network of Openstack an additional virtual network needs to be created like it was done for the intial admin network, in this case with the default range provided by Mirantis (172.16.0.0/24 on vmnet4):

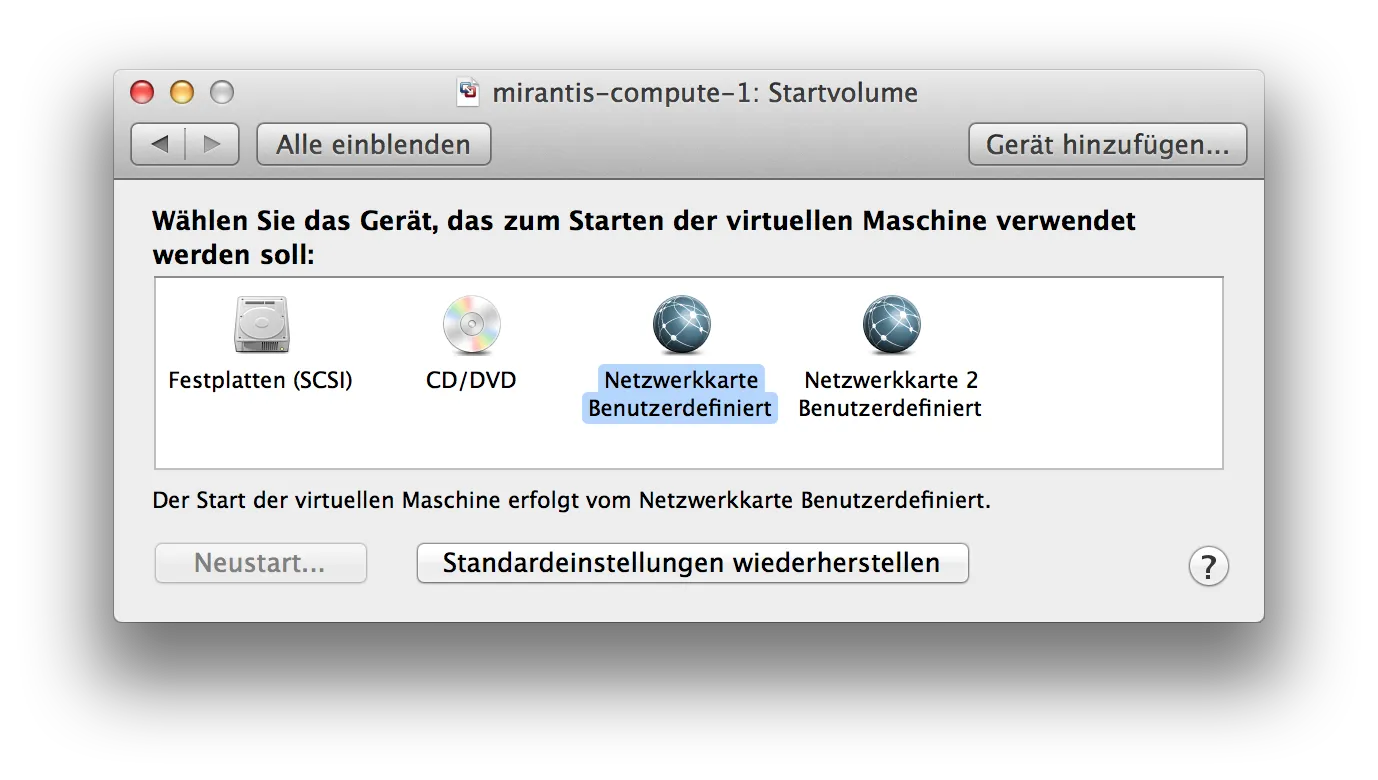

In order to PXE boot all VMs also need to be connected to the admin net vmnet3. In addition the VMs boot device need to be set to the NIC that is connected to said virtual network:

All VMs can now be started to be discovered by Fuel.

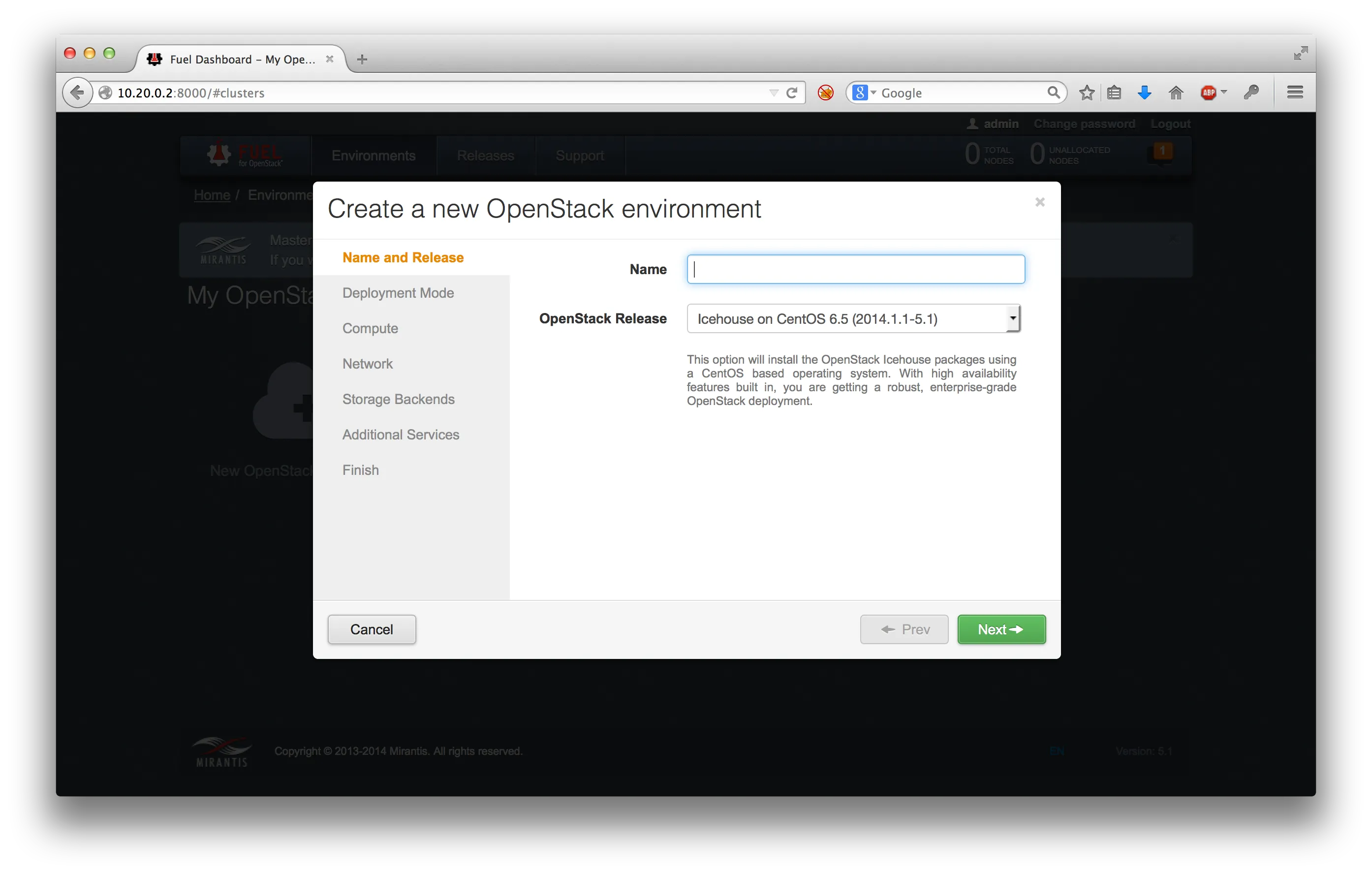

After logging into the Fuel admin dashboard we are presented with the option to create a new environment:

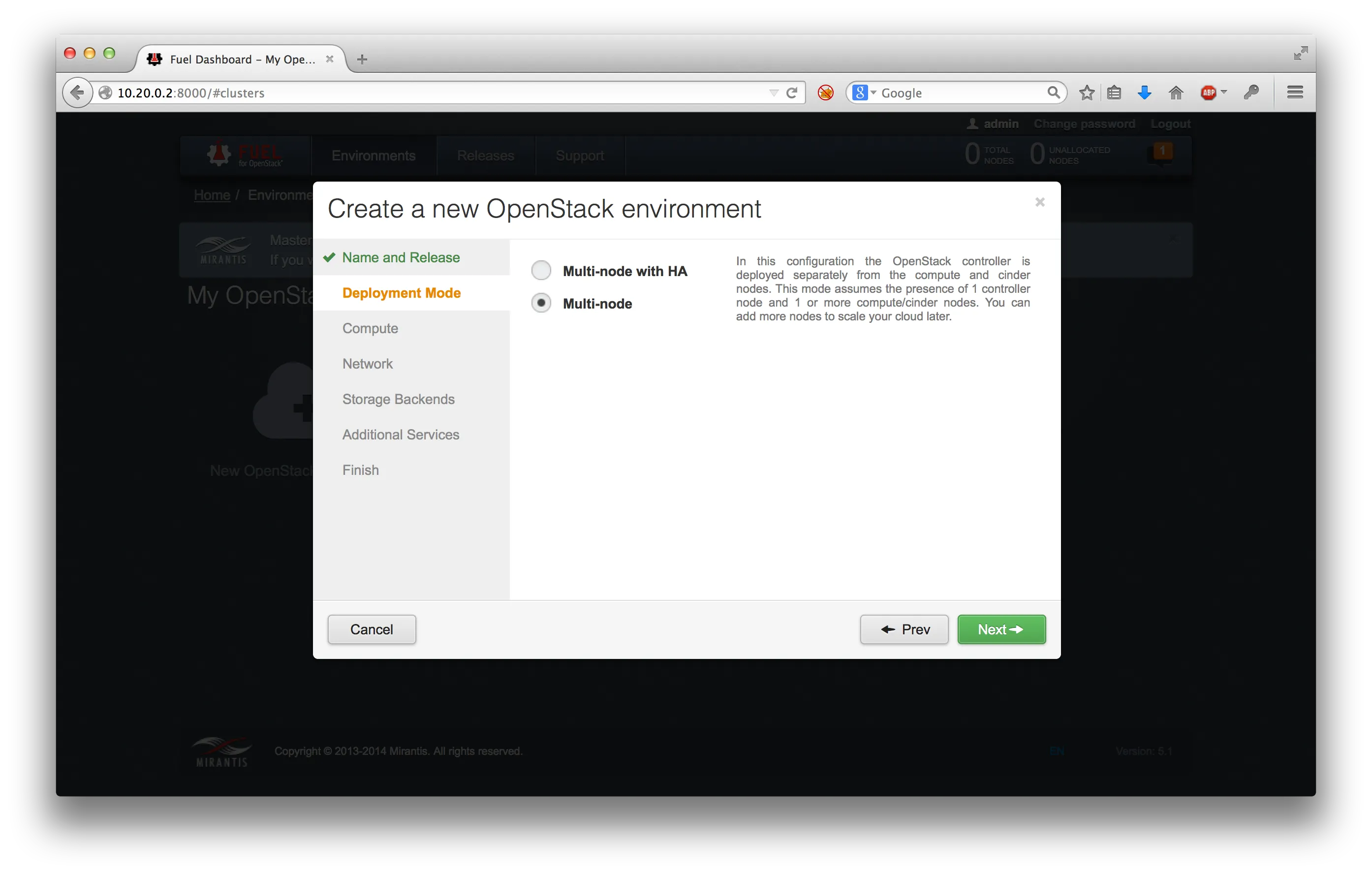

We chose an Icehouse Installation based on CentOS 6.5 and a multi-node setup (non-HA):

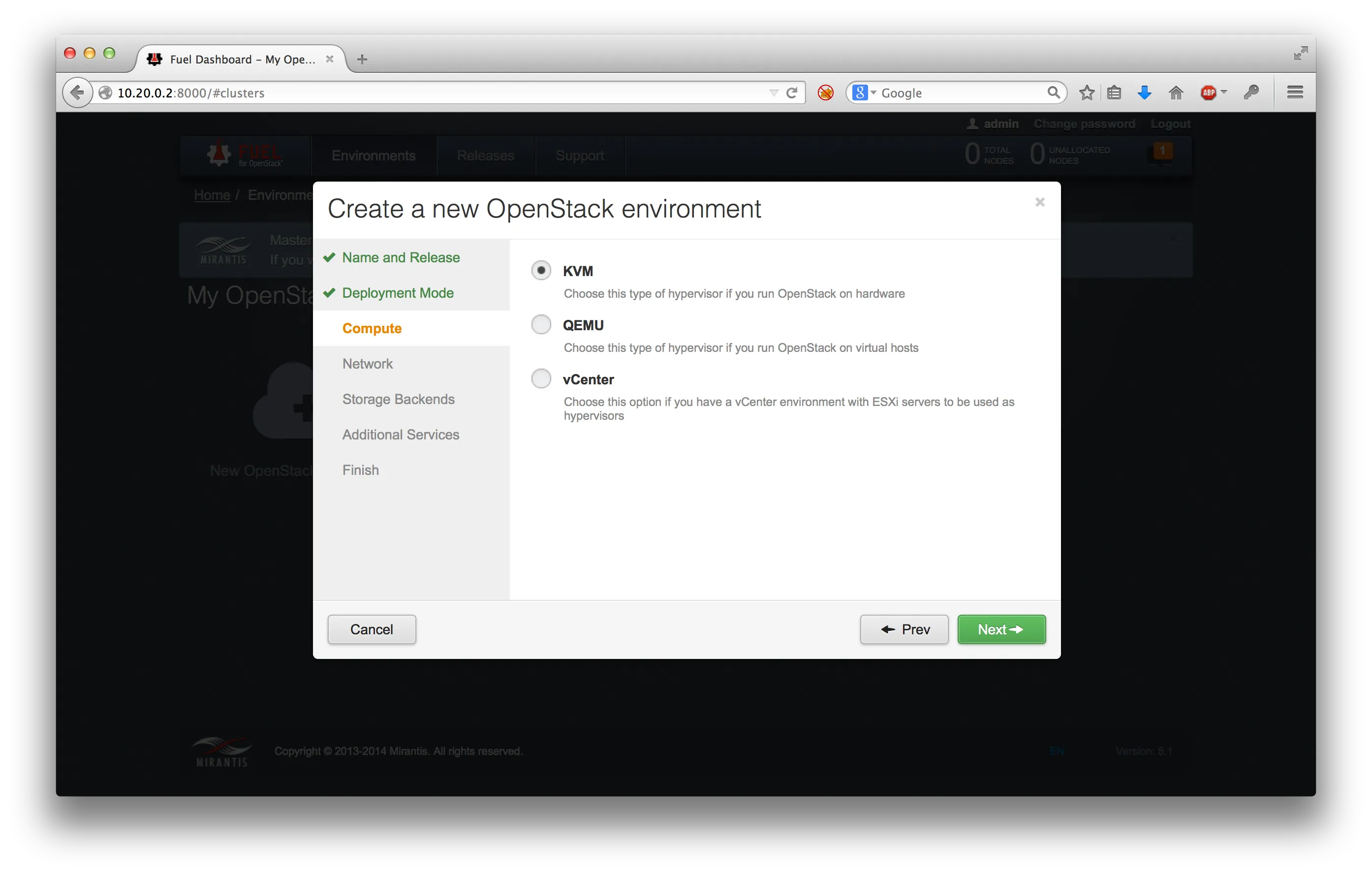

We can base our compute nodes on KVM, since we have nested virtulization available. Qemu setups can also be deployed but offer less performance.

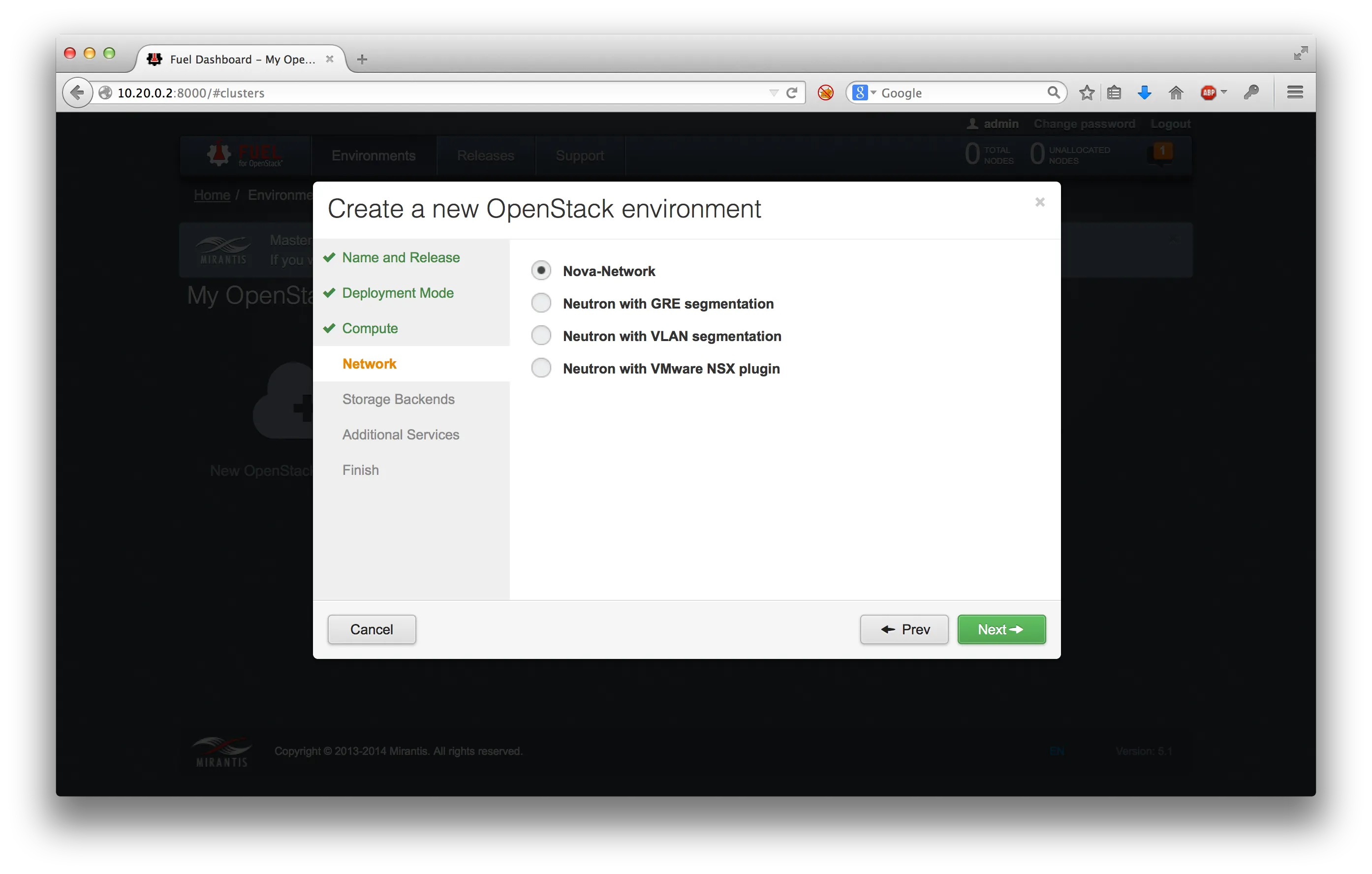

The network will be a simple nova (not neutron) setup:

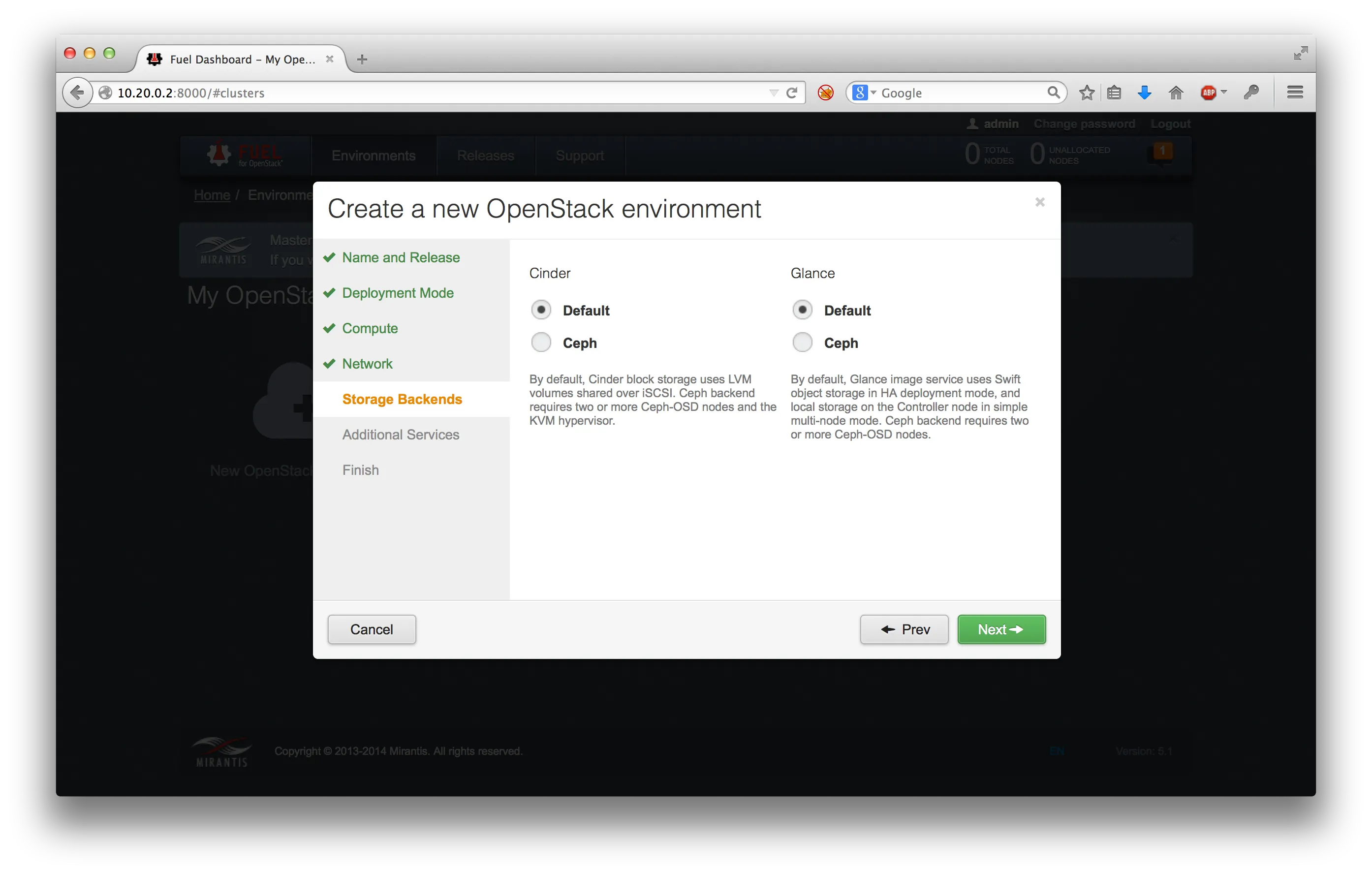

As storage backends default LVM volumes for cinder (block storage) and local storage for glance (image storage) are selected:

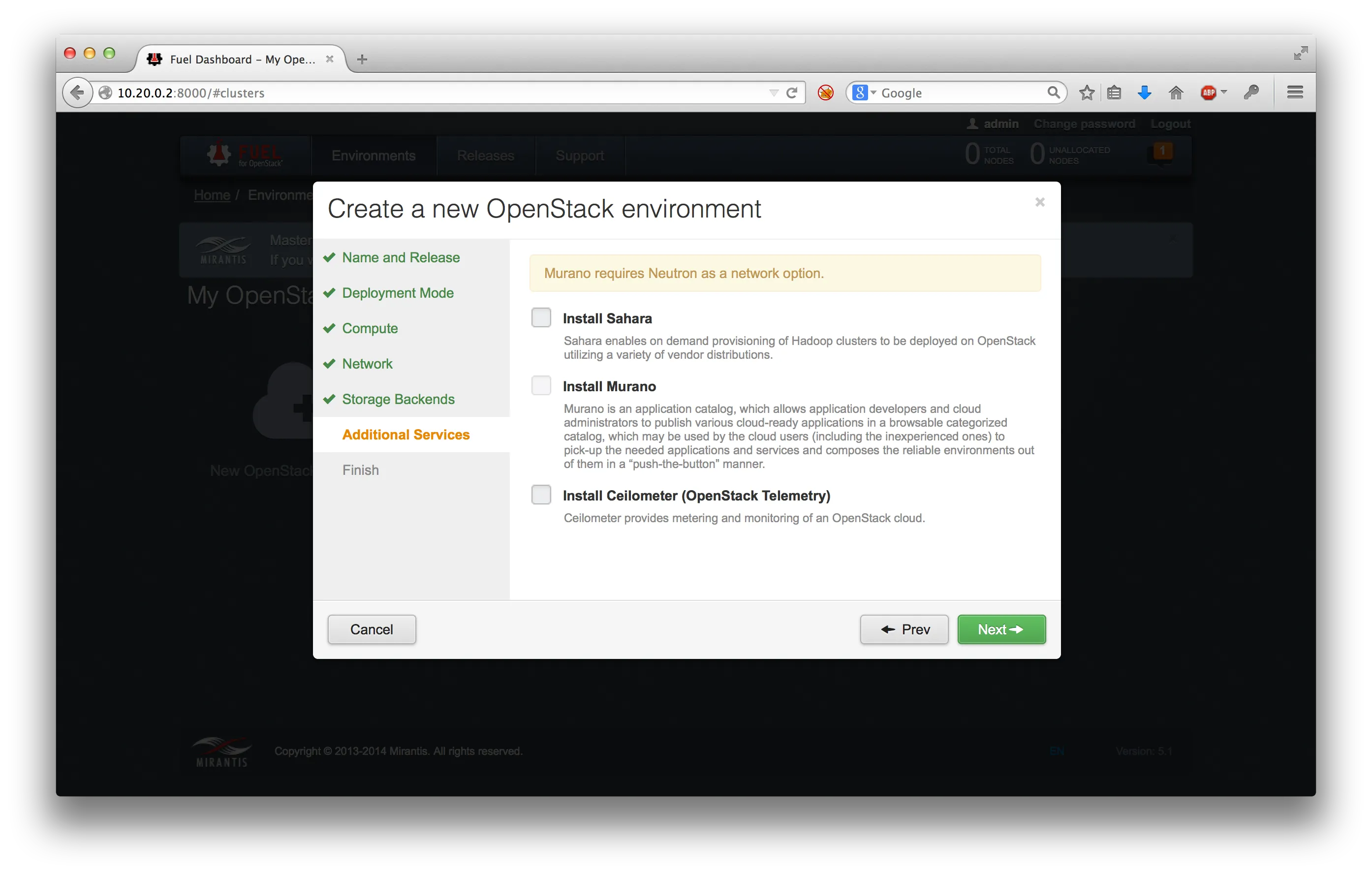

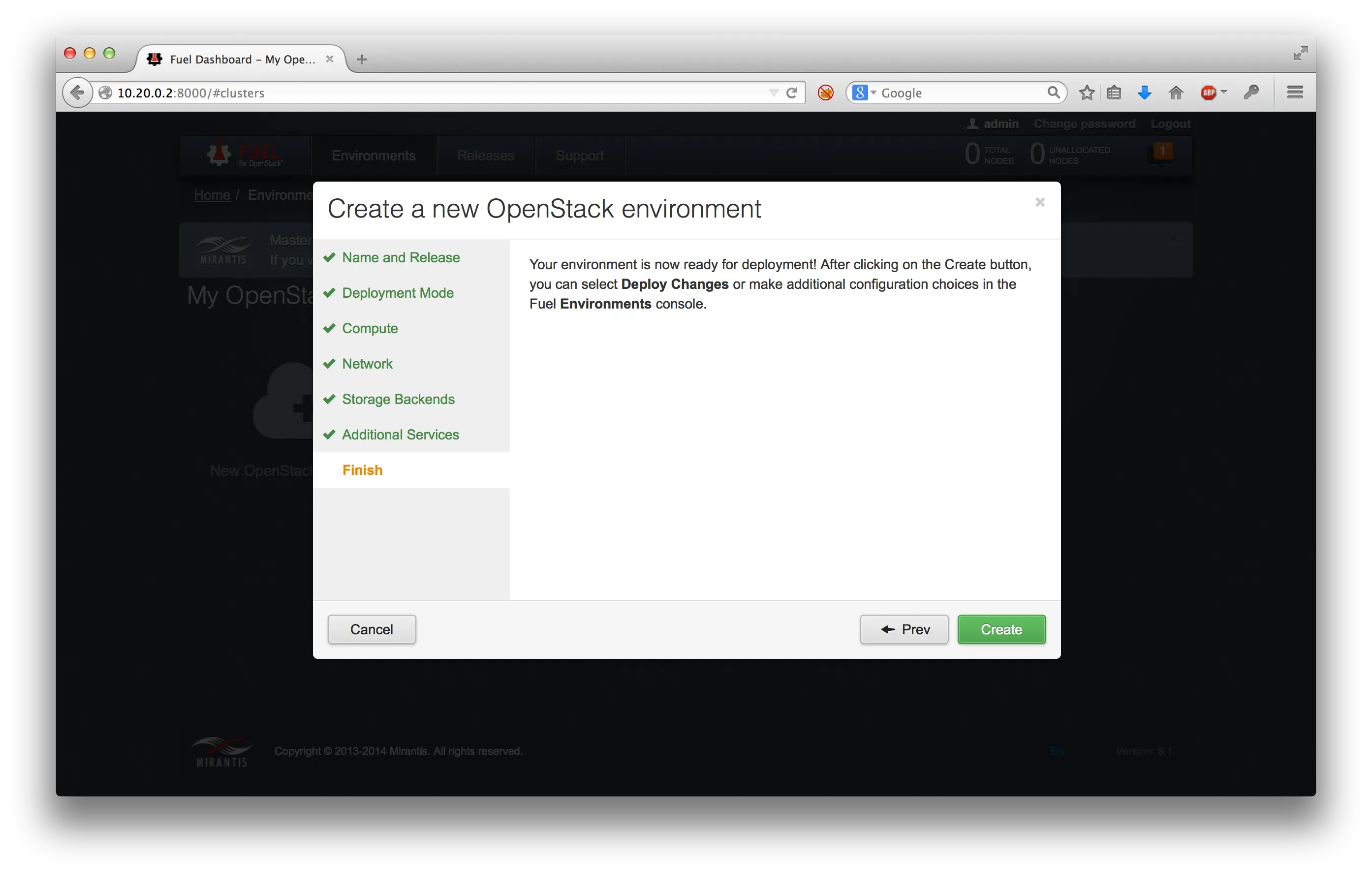

We refrain from installing additional services and finish the creation of a new environment:

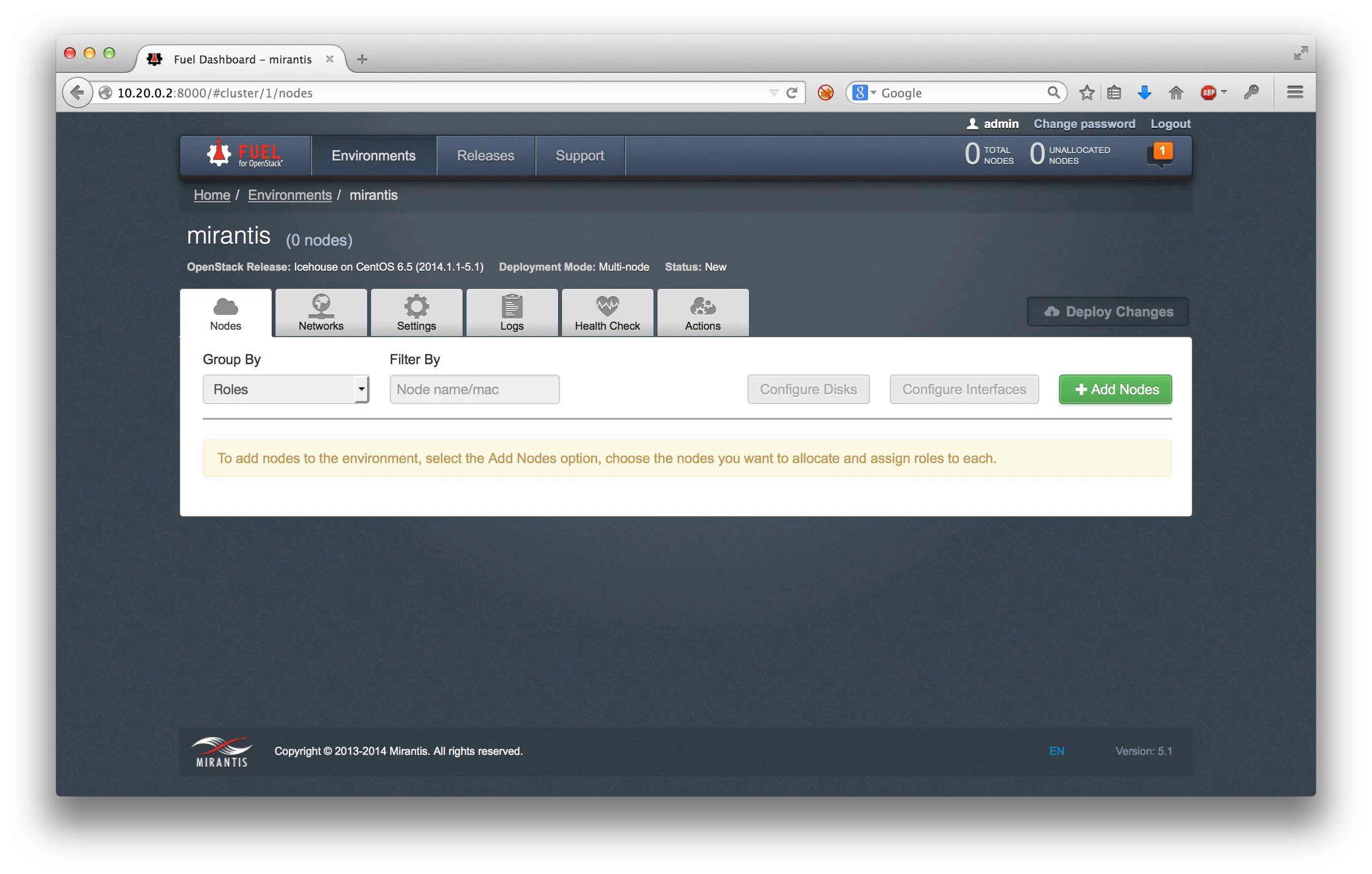

Inside the new environment new nodes can be added once they are discovered in the admin network using PXE boot:

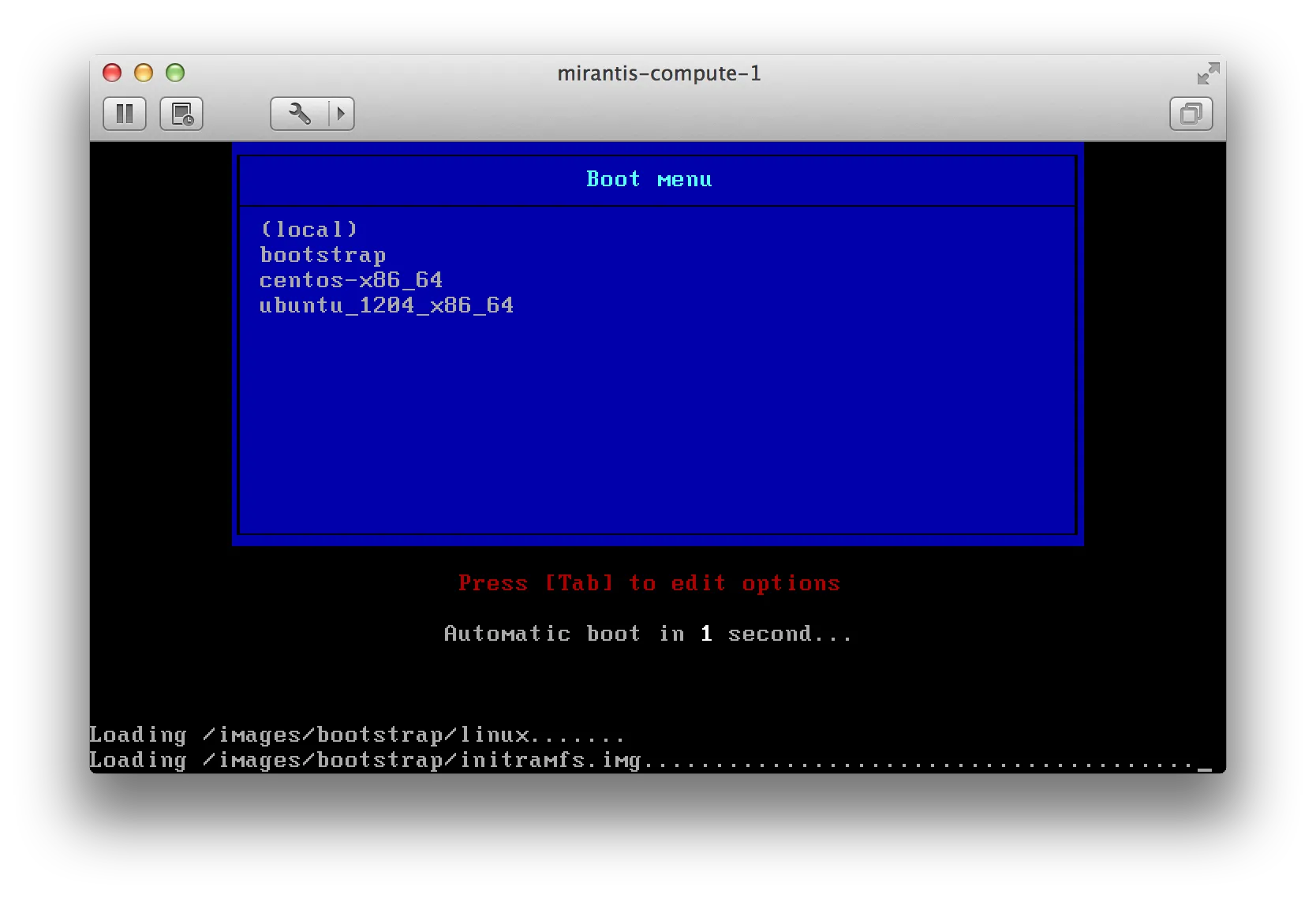

Nodes should automatically boot into bootstrap mode:

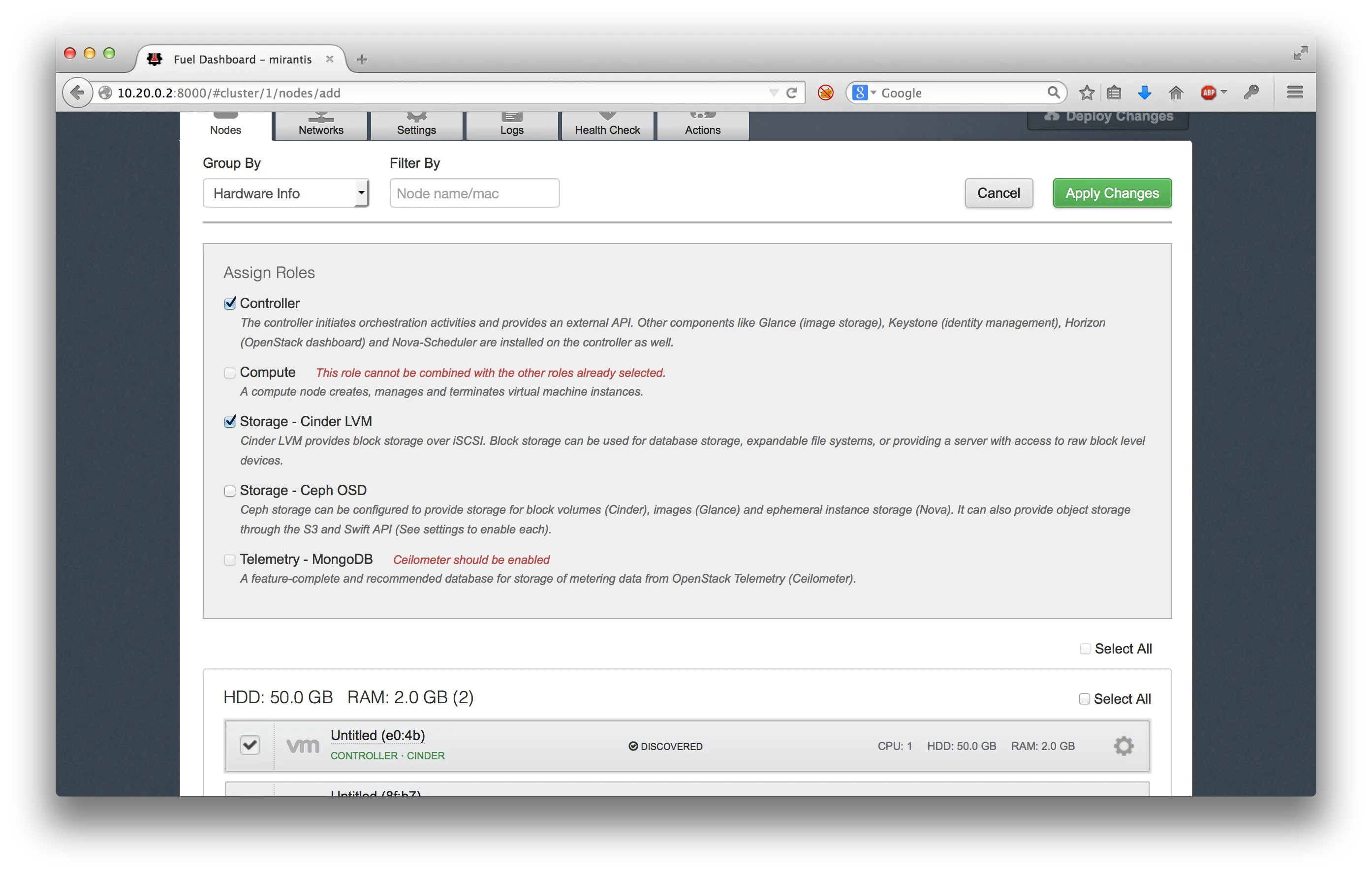

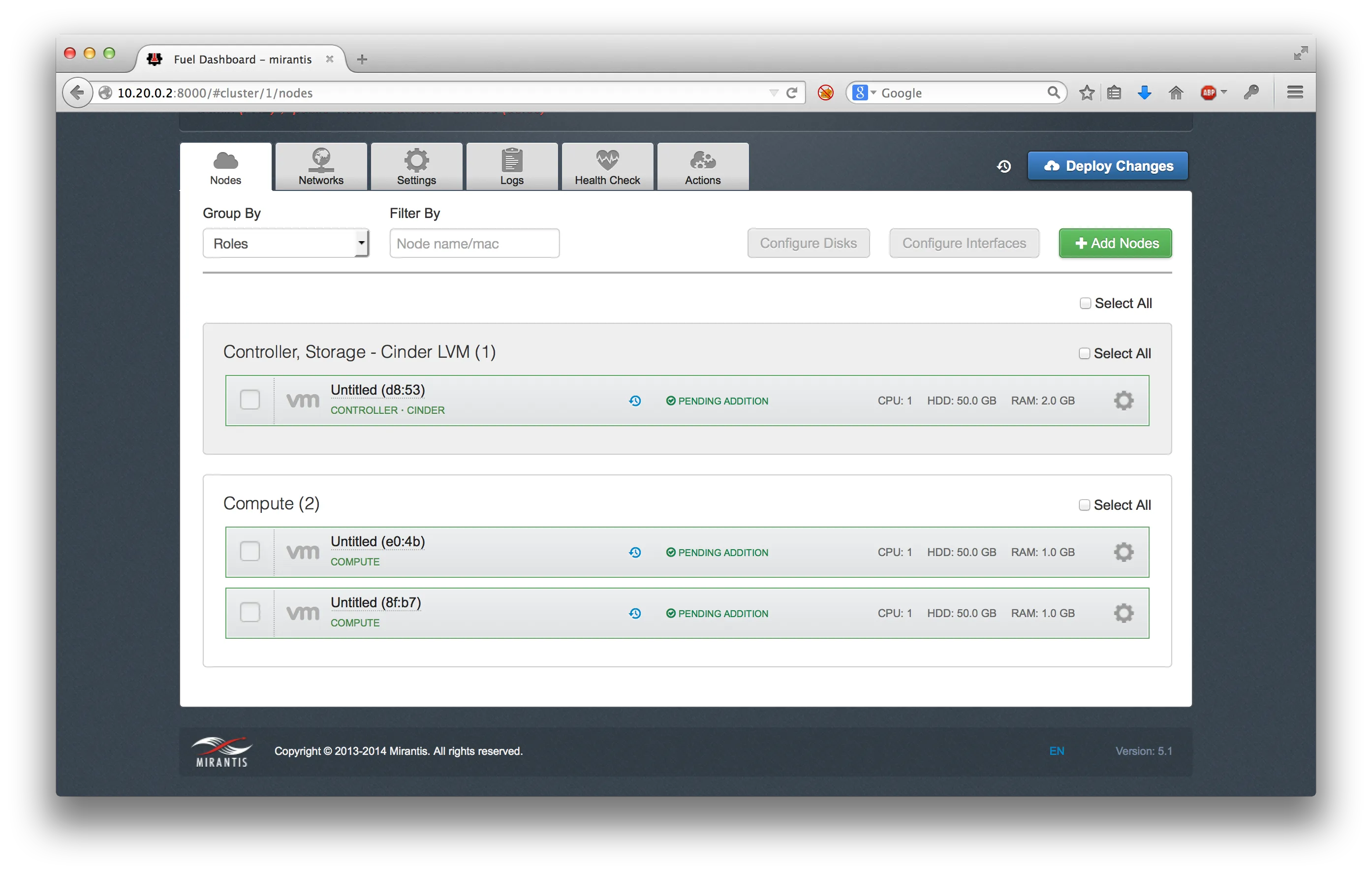

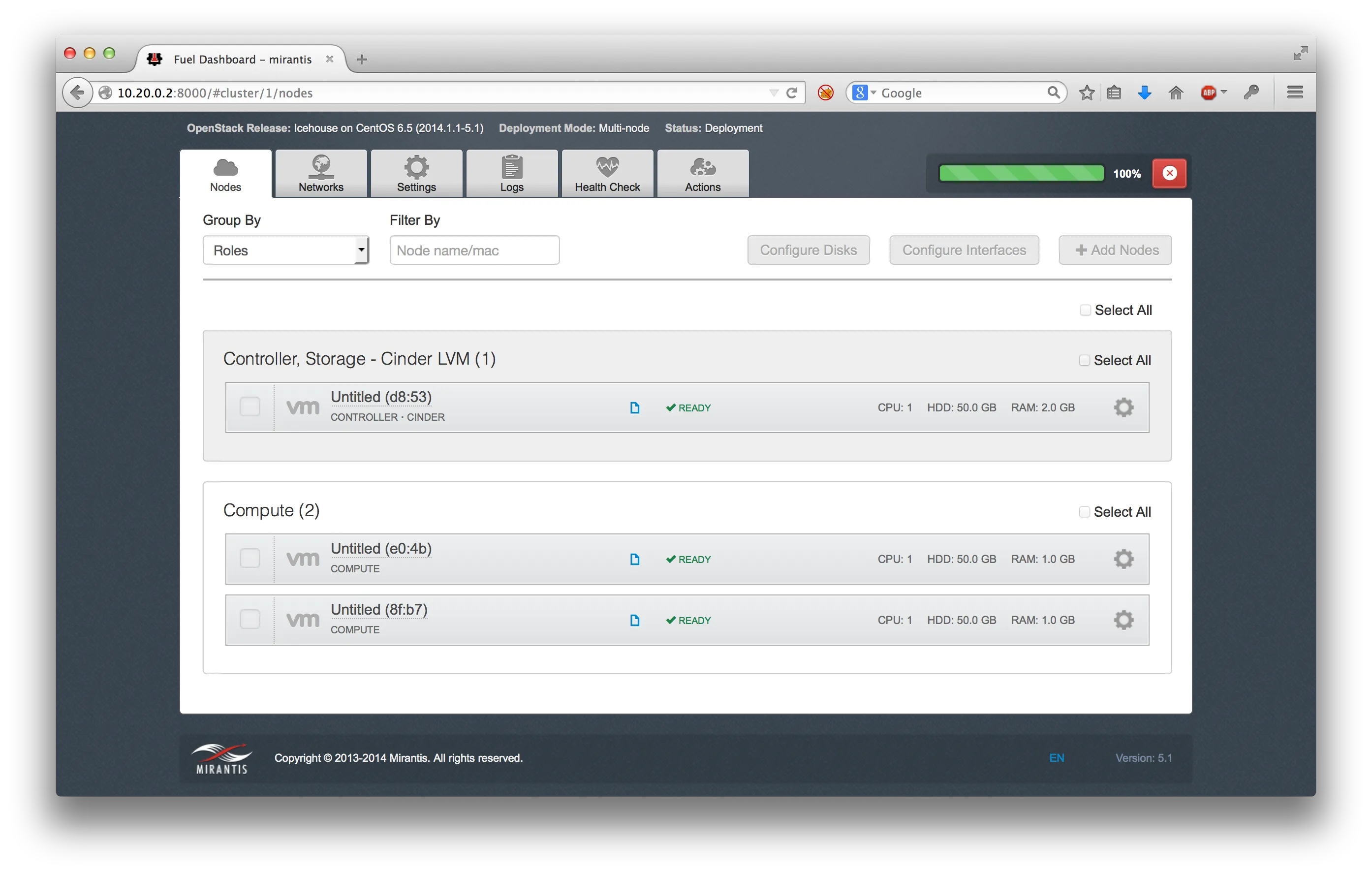

As soon as the nodes are identified by Fuel they can be assigned to their respective roles. The controller will have controller services and cinder deployed, the compute node simply the compute service:

You may also check if the default networks are allocated correctly in the nodes:

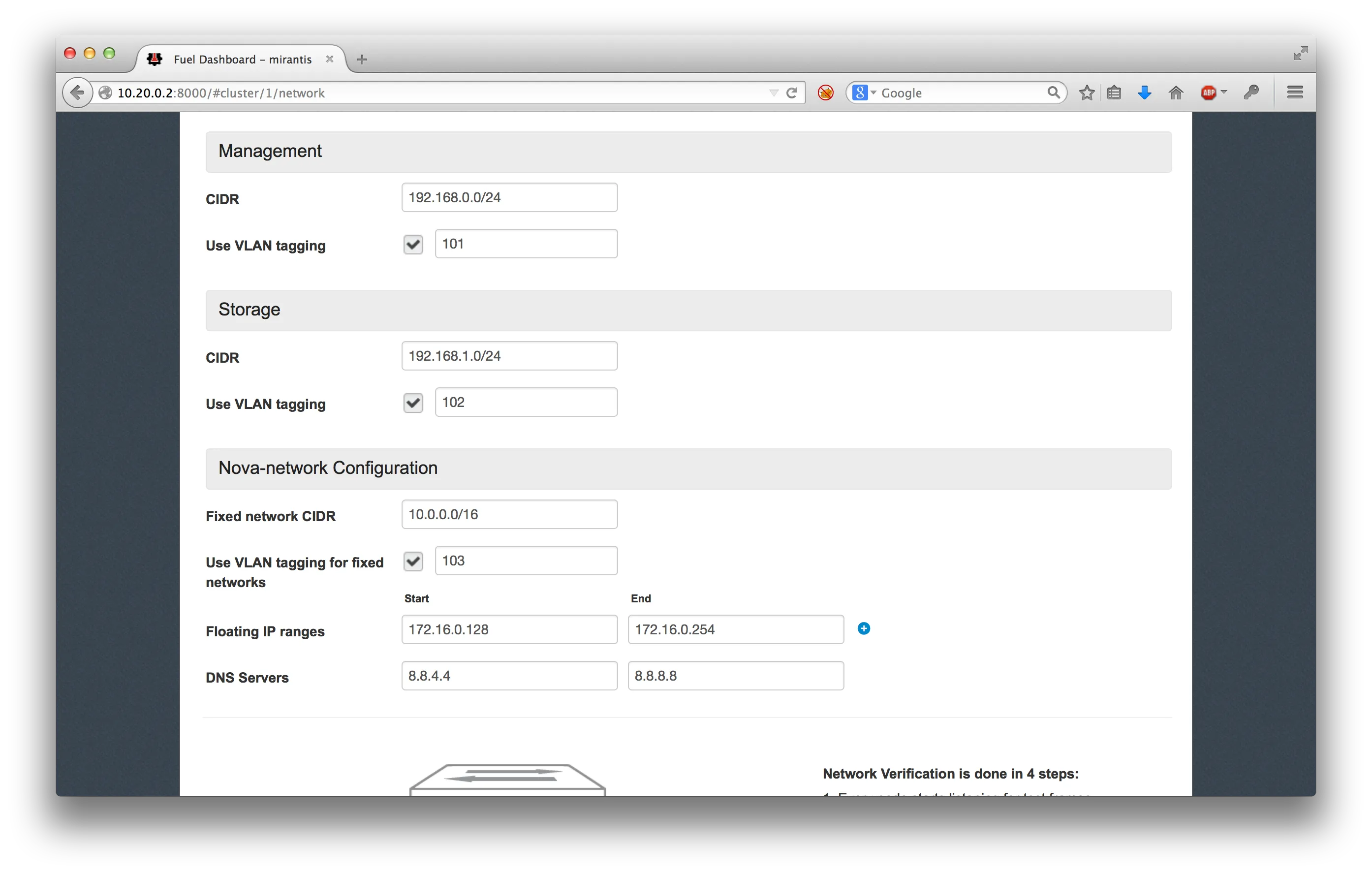

In addition, networking for admin, storage and nova network (fixed and floating) can be configured:

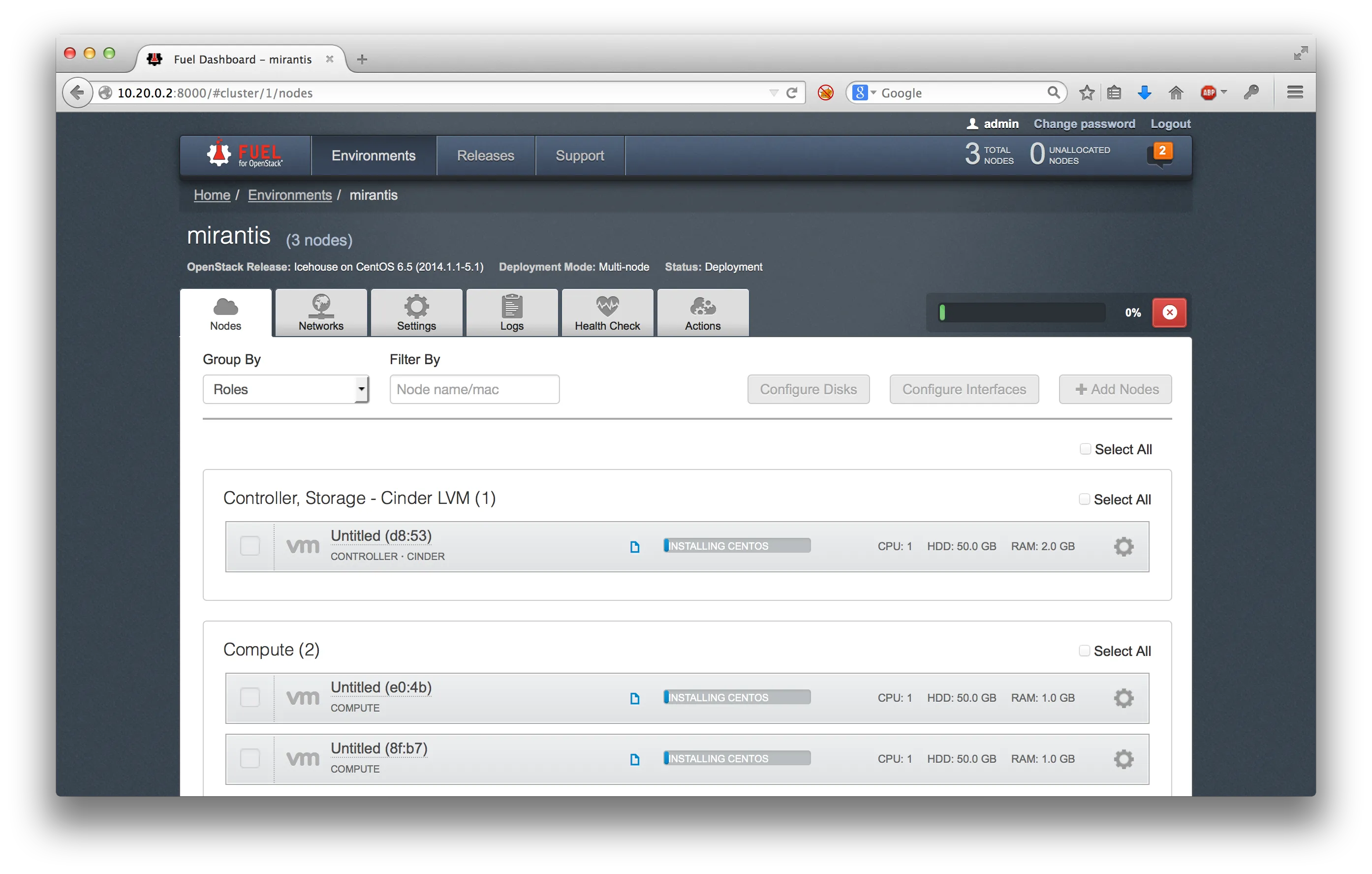

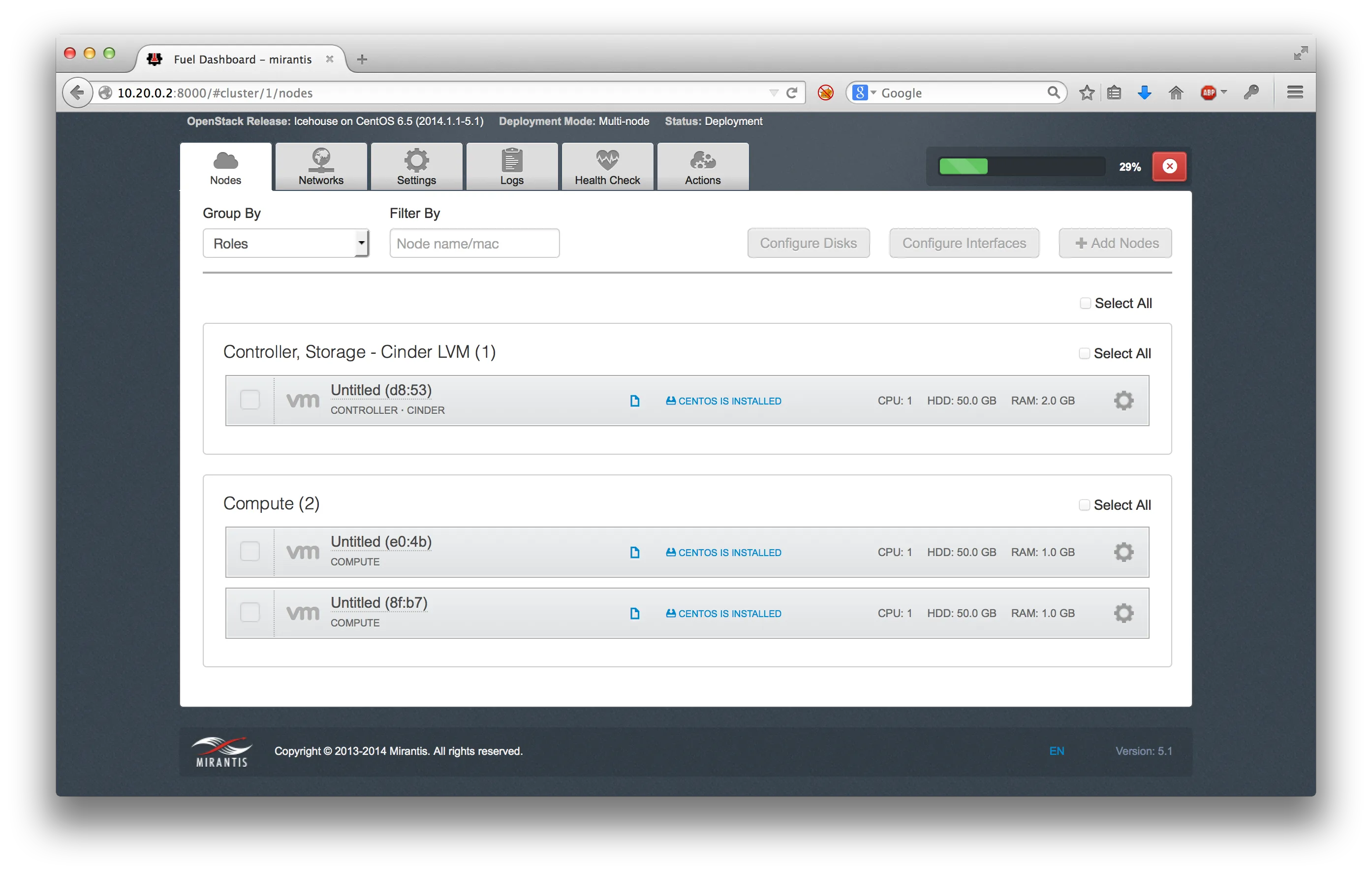

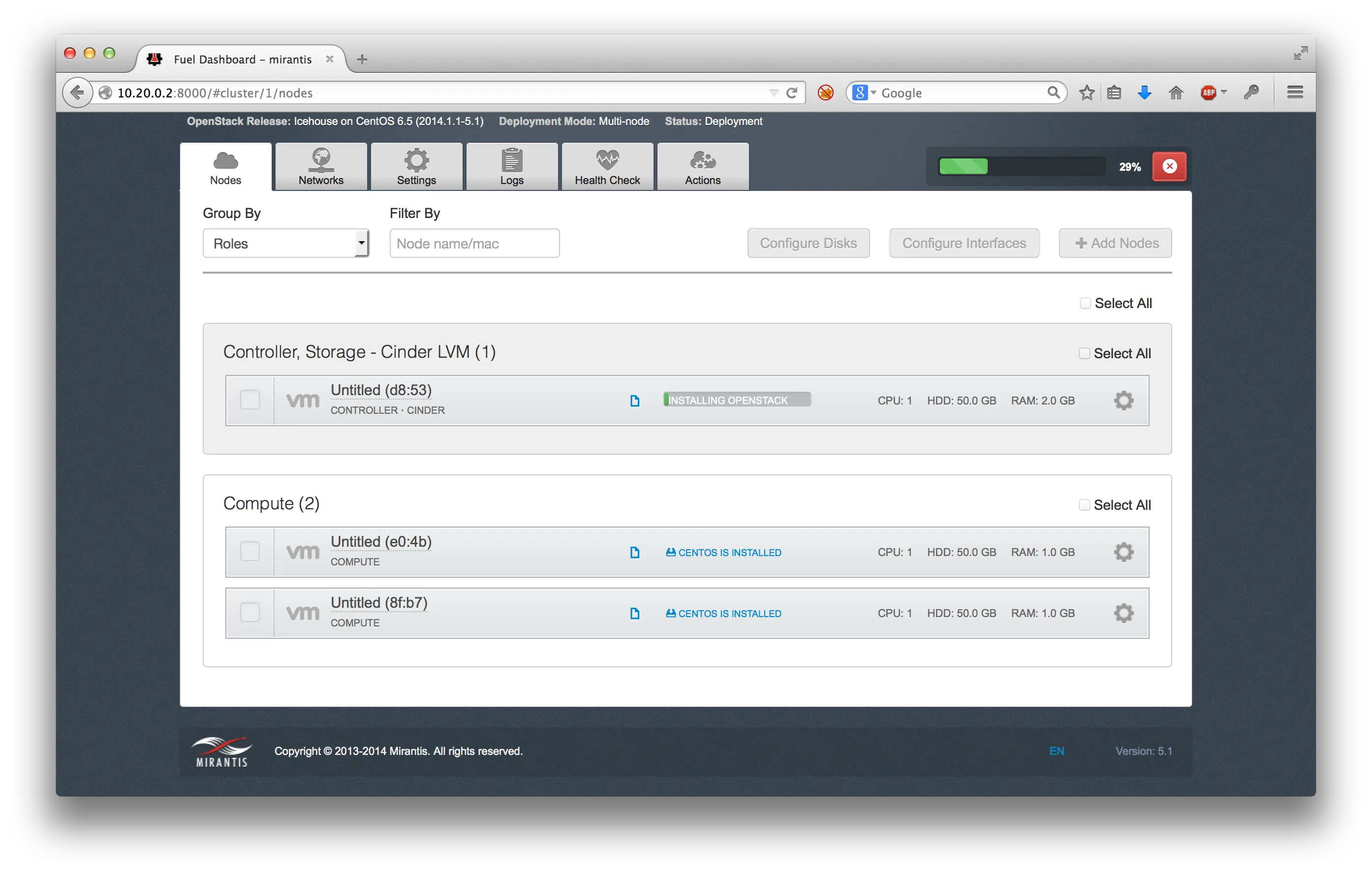

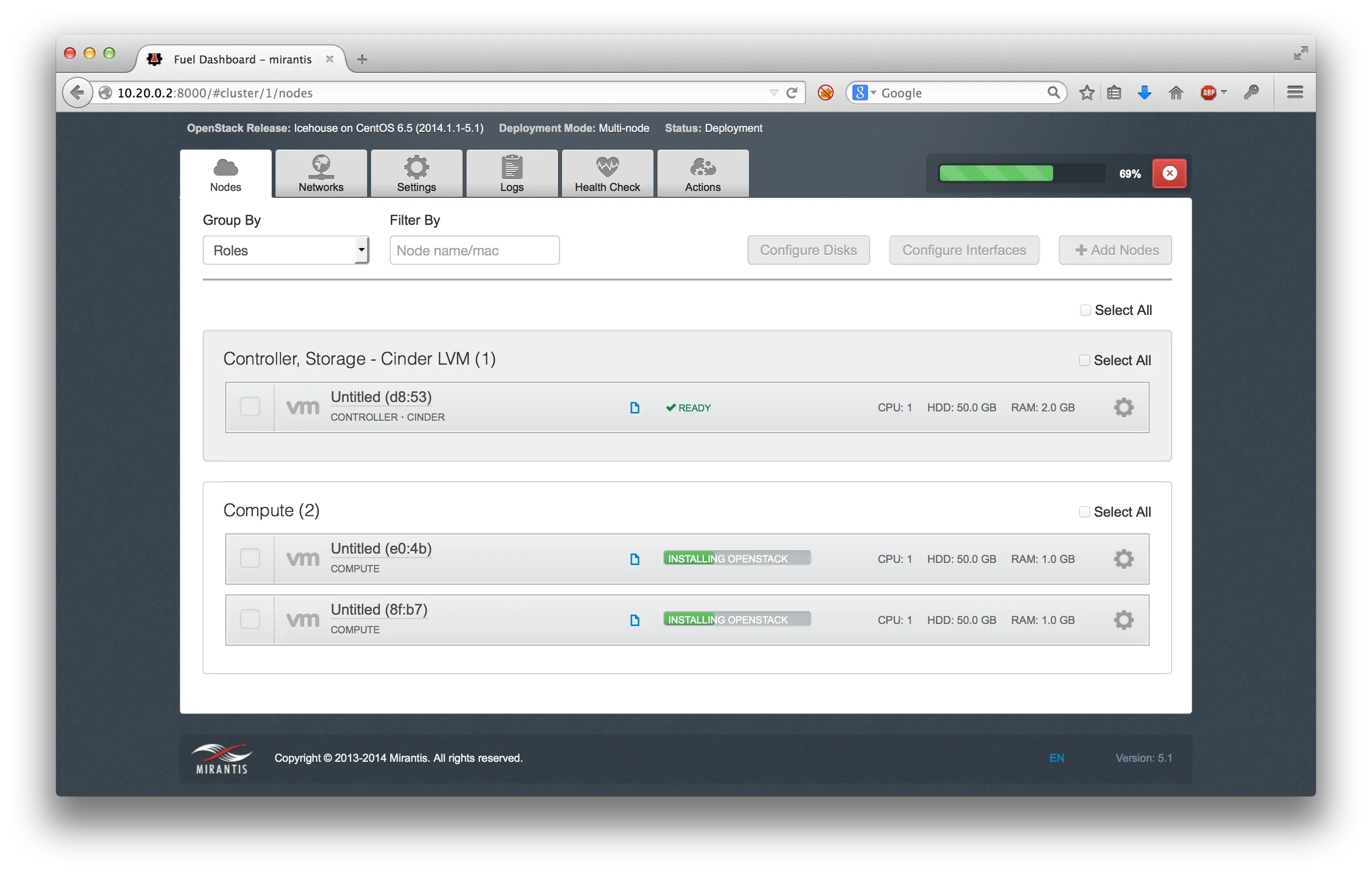

Once all nodes have assigned roles, the deployment process can be started. It will intially install CentOS 6.5 on all nodes, afterwards set up the controller node and finally the two compute nodes:

You may run into a "Hardware not supported error" while installing CentOS which you will only notice when looking into the virtual console. In this setup the warning can safely be ignored:

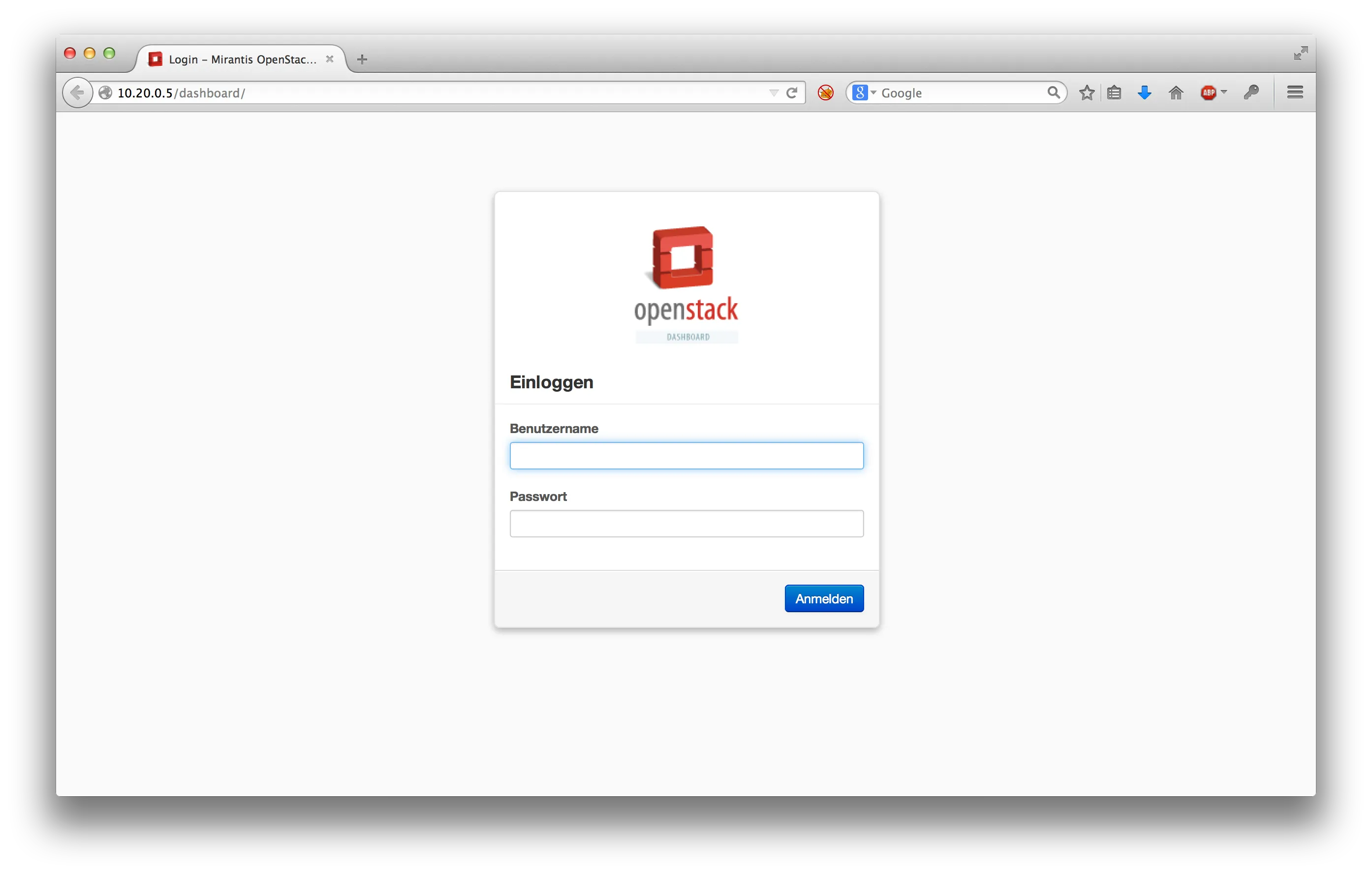

Once the deplyoment is finished, the Mirantis Openstack Dashboard is available at http://10.20.0.5/dashboard/. Fuel will also show the configured address after deployment completion. The default login is admin:admin:

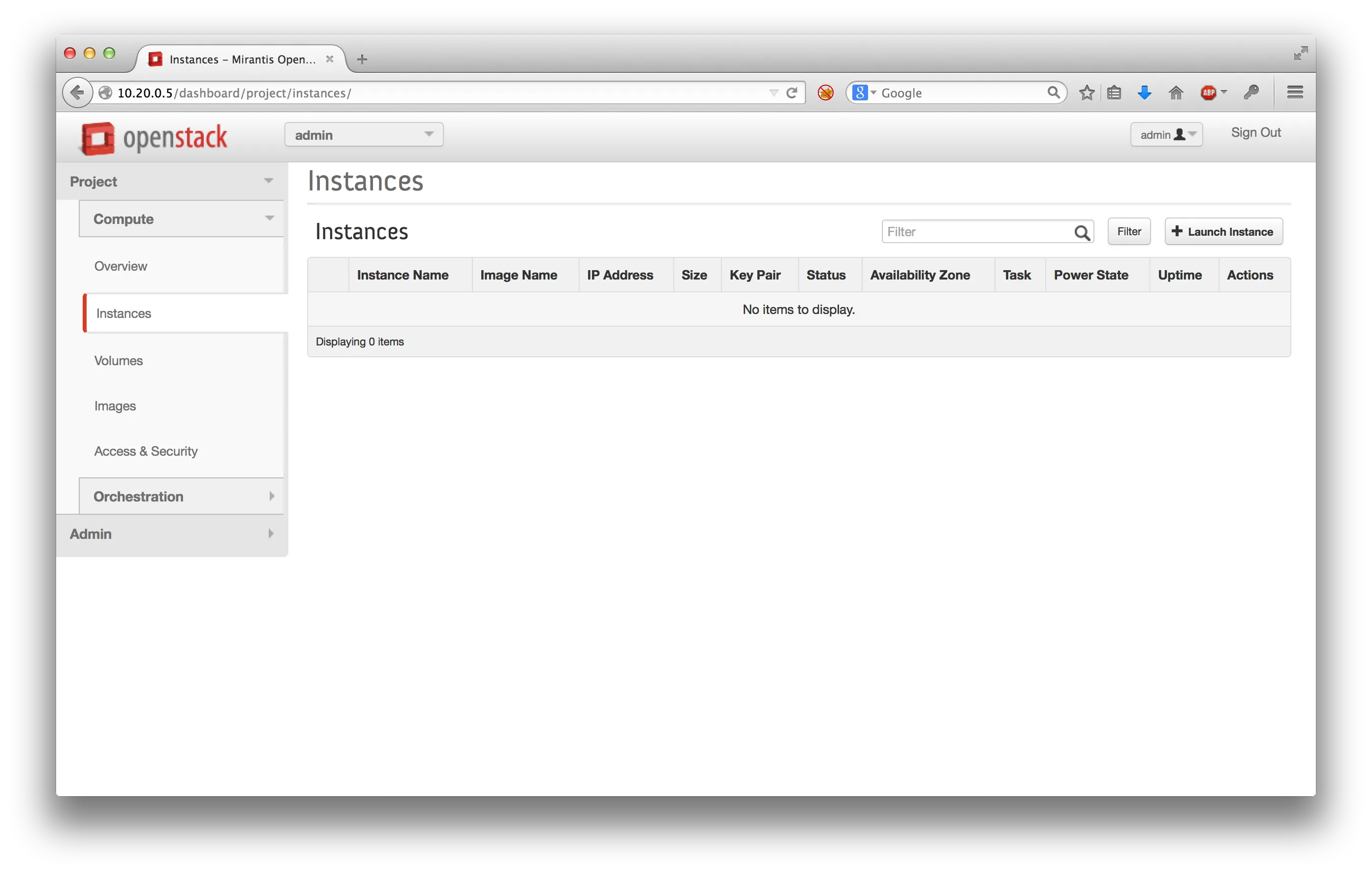

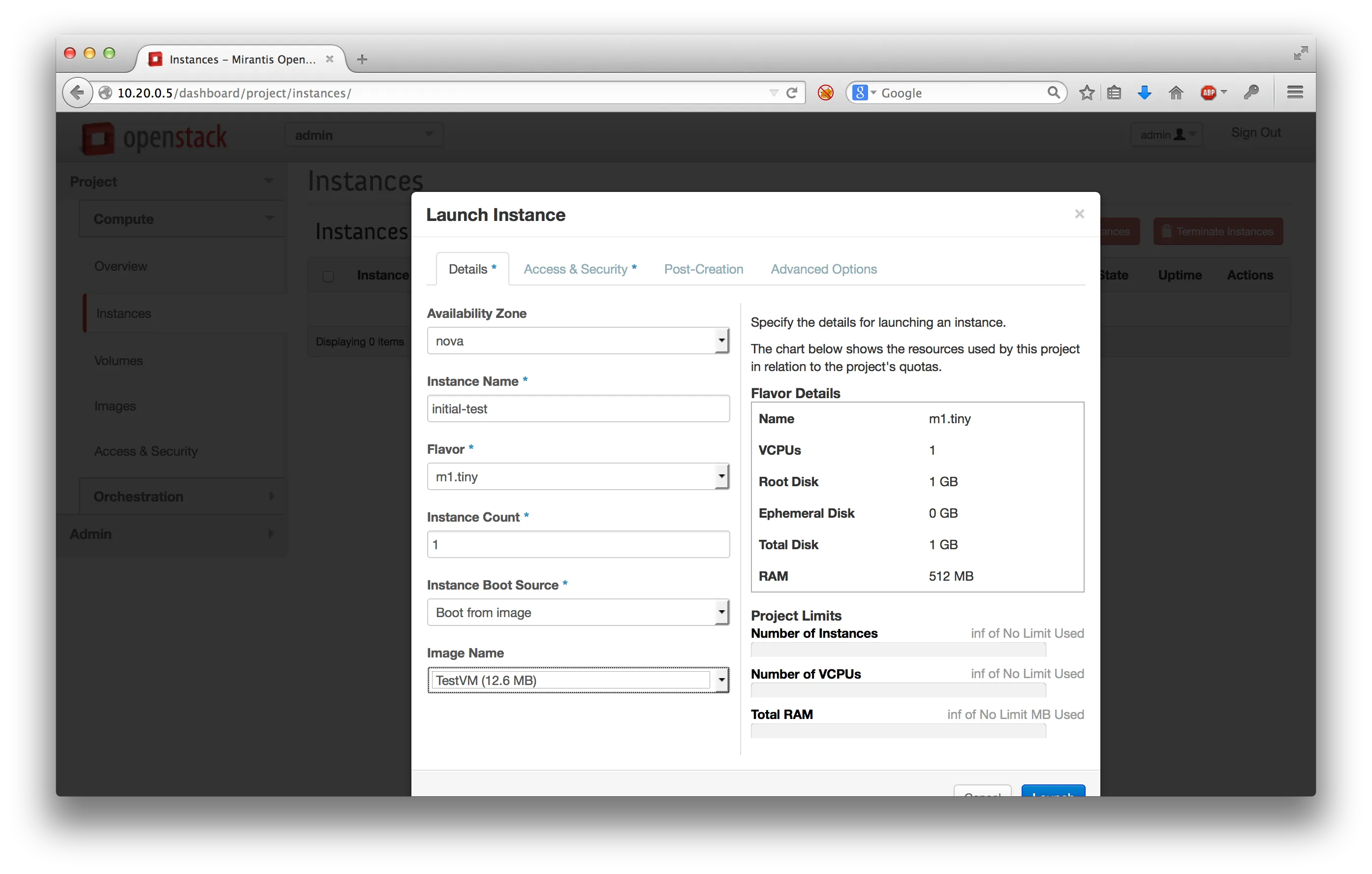

After a succesful login, we can create test instances. A test VM based on Cirrus is provided in the distribution:

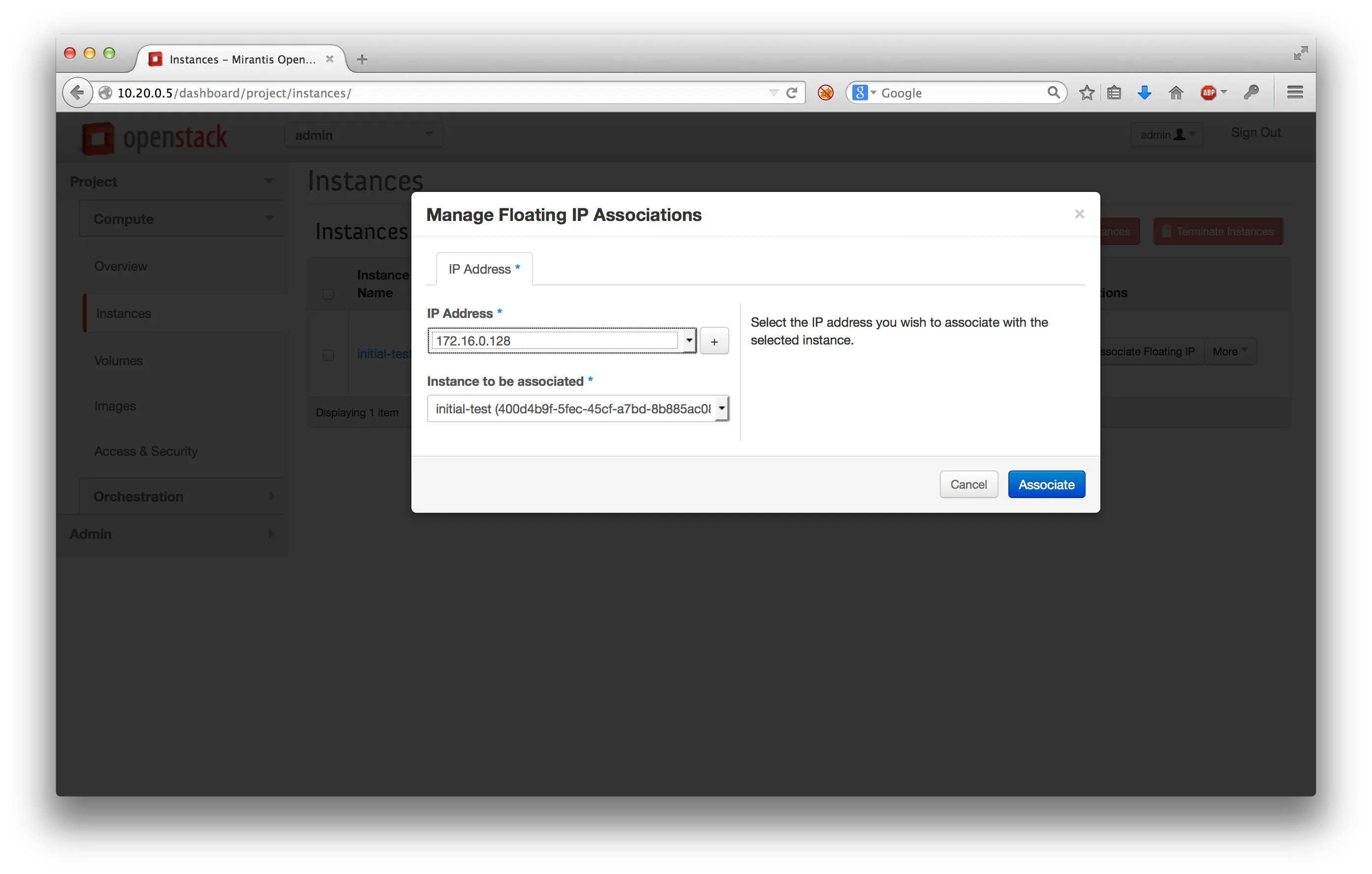

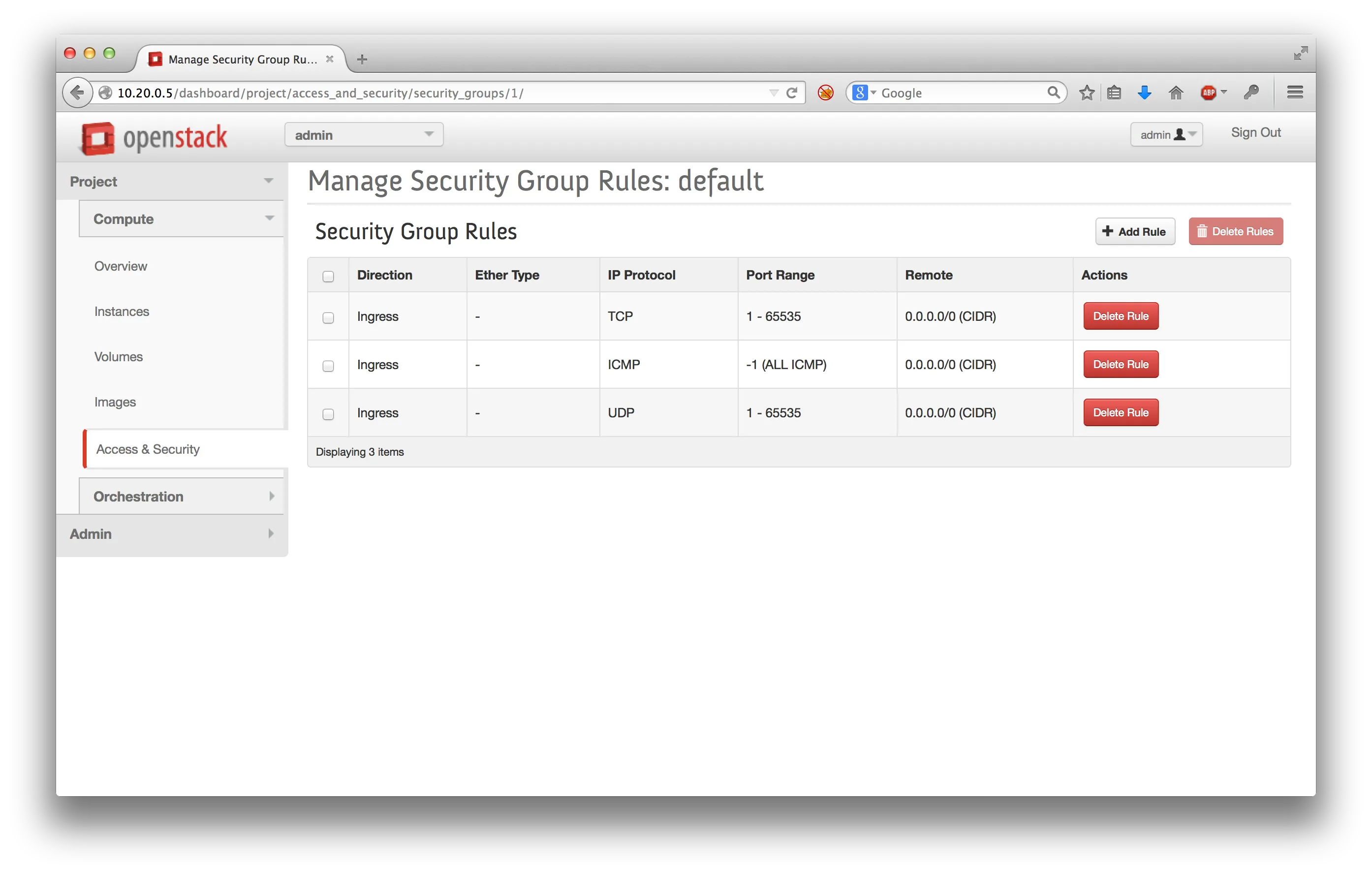

To reach the test VM make sure to assign a floating IP an allow (at least) ICMP and SSH in the security group settings:

Now you should be able to ping the floating IP and log into the test VM from your host machine with the following credentials cirros:cubswin:) :

1 $ ping 172.16.0.128

2 PING 172.16.0.128 (172.16.0.128): 56 data bytes

3 64 bytes from 172.16.0.128: icmp_seq=0 ttl=63 time=1.118 ms

4 64 bytes from 172.16.0.128: icmp_seq=1 ttl=63 time=0.683 ms

5 64 bytes from 172.16.0.128: icmp_seq=2 ttl=63 time=0.763 ms

You can also test the installation using the Openstack command line clients using the credentials and endpoints found in the dashboard via "Access and Security". A good test is to upload a new image (e.g. Ubuntu 14.04 LTS) to the image service and afterwards launch said image:

1$ glance image-create --name Ubuntu1404 --file Documents/Development/iso/trusty64.qcow2 --container-format bare --disk-format qcow2 --is-public true --progress

2[=============================>] 100%

3+------------------+--------------------------------------+

4| Property | Value |

5+------------------+--------------------------------------+

6| checksum | 040a20a402b8eff2afe4ce409c8688ac |

7| container_format | bare |

8| created_at | 2014-10-12T10:05:55 |

9| deleted | False |

10| deleted_at | None |

11| disk_format | qcow2 |

12| id | 0721d7d1-a21b-4851-a6ea-b0bb4505fa04 |

13| is_public | True |

14| min_disk | 0 |

15| min_ram | 0 |

16| name | Ubuntu1404 |

17| owner | 4323809bb3154929827b11df0c891da9 |

18| protected | False |

19| size | 255066624 |

20| status | active |

21| updated_at | 2014-10-12T10:06:05 |

22| virtual_size | None |

23+------------------+--------------------------------------+

You are interested in our courses or you simply have a question that needs answering? You can contact us at anytime! We will do our best to answer all your questions.

Contact us