Is Atlantis a Viable Alternative to HashiCorp Cloud Platform Terraform?

Infrastructure as Code (IaC) has revolutionized the way organizations manage cloud infrastructure, with Terraform leading as a premier tool. HashiCorp Cloud

We need to have a VirtualBox environment set up. For SLES have a look at this blog post: Install Virtualbox on SLES 11 SP3.

As SLES and SUSECloud are commercial offerings you have to register in order to be able to do download the iso images.

Again. You must register to be able to download the base image.

And now some hardware requirements. We want to build a minimal viable multi nova zone and ceph/cinder backed OpenStack.

This amounts to 13 GB Ram

To build the needed environment I have prepared some scripting. Download or clone the scripts from the Github location: crowbar-virtualbox

1git clone https://github.com/iteh/crowbar-virtualbox.git

2cd crowbar-virtualbox

Copy the config file to customize it. We will use the default settings, so this is just for reference.

1cp config.sh.example config.sh

2

3cat config.sh

4

5CONFIG_SH_SOURCED=1

6

7NODE_PREFIX="testcluster-"

8

9ADMIN_MEMORY=4096

10COMPUTE_MEMORY=2048

11STORAGE_MEMORY=1024

12GATEWAY_MEMORY=1024

13

14NUMBER_OPENSTACK_NODES=3

15NUMBER_STORAGE_NODES=3

16

17NUMBER_COMPUTE_NICS=6

18NUMBER_STORAGE_NICS=4

19NUMBER_ADMIN_NICS=6

20NUMBER_GATEWAY_NICS=6

21

22# one of Am79C970A|Am79C973|82540EM|82543GC|82545EM|virtio

23

24IF_TYPE=82545EM

25

26VBOXNET_4_IP="192.168.124.1"

27VBOXNET_5_IP="0.0.0.0"

28VBOXNET_6_IP="0.0.0.0"

29VBOXNET_7_IP="0.0.0.0"

30VBOXNET_8_IP="0.0.0.0"

31VBOXNET_9_IP="0.0.0.0"

32VBOXNET_10_IP="10.11.12.1"

33

34

35VBOXNET_MASK="255.255.255.0"

Create the testcluster and pass in the iso image. This will output a lot of information.

1./create_testcluster.sh /home/ehaselwanter/SLES_11_SP3_JeOS.x86_64-0.0.1.preload.iso

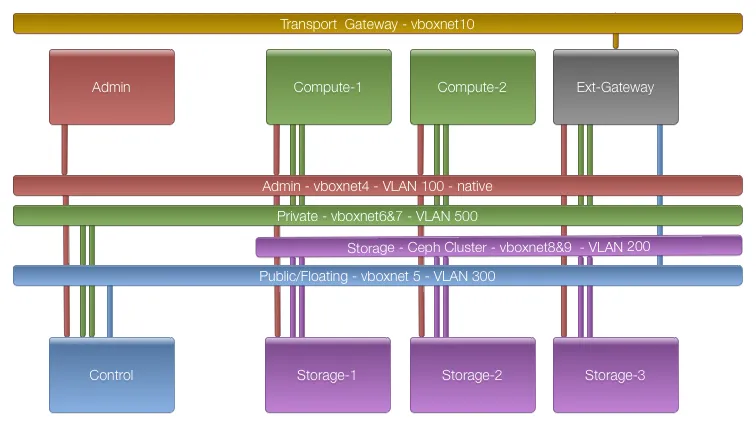

We now have vboxnet4 - vboxnet9 as well as 7 machines wired like in the image shown above. The reason for starting with vboxnet4 is that if you run other VirtualBox VMs on the same host they most likely start using hostonlyifs at vboxnet0

1ip a |grep vboxnet

24: vboxnet0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 1000

35: vboxnet1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 1000

46: vboxnet2: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 1000

57: vboxnet3: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 1000

68: vboxnet4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 1000

7 inet 192.168.124.1/24 brd 192.168.124.255 scope global vboxnet4

89: vboxnet5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 1000

9 inet 192.168.125.1/24 brd 192.168.125.255 scope global vboxnet5

1010: vboxnet6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 1000

11 inet 192.168.122.1/24 brd 192.168.122.255 scope global vboxnet6

1211: vboxnet7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 1000

13 inet 192.168.123.1/24 brd 192.168.123.255 scope global vboxnet7

1412: vboxnet8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 1000

15 inet 192.168.128.1/24 brd 192.168.128.255 scope global vboxnet8

1613: vboxnet9: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 1000

17 inet 192.168.129.1/24 brd 192.168.129.255 scope global vboxnet9

1VBoxManage list vms | grep testcluster

2"testcluster-admin" {d12731a2-f25c-4b70-929e-d11a80c19c30}

3"testcluster-node-1" {64b29a6d-f645-48a7-9a8a-1800db4a3267}

4"testcluster-node-2" {80aacb6e-9f40-4307-989d-be0b4fb3aa9e}

5"testcluster-node-3" {6cae8548-d94b-4a67-8f20-76c878537c5b}

6"testcluster-node-4" {3ca15c4b-1818-45b2-a61a-e09893f3852f}

7"testcluster-node-5" {325daa9a-6d38-4985-b86c-438813c79f44}

8"testcluster-node-6" {33509bf5-a369-4146-8e3b-e1c4054a8883}

Boot up the admin node

1VBoxManage startvm testcluster-admin --type headless

2VBoxManage controlvm testcluster-admin vrde on

Now we can watch the progress with the windows remote desktop client. I use the Microsoft Remote Desktop Connection Client and connect to

<host-ip>:5010. The preload iso asks if it should install to the disk. Here we have to use a little trick. Use the arrow keys (down,up,down, NOT right,left,right!) to change back and forth between Yes and No and hit return on Yes. I had an issue just hitting return which aborted the installation. After the installation you are asked to accept the EULA. Say yes if you want to install SUSECloud 3.

We have a complex networking setup. What we need to do is come up with a network.json tailored to our setup.

SUSE did a great job documenting this: Appendix C. The Network Barclamp Template File.

We have to make sure our network devices are configured the right way. Some background information can be found here: Linux Enumeration of NICs.

TLDR; Networking interface cards are numbered in an order determined by algorithms implemented by Linux distributions/kernel versions. There are several ways to deal with this. The Crowbar/SUSECloud approach is to abstract out the naming internally and determine the physical layout based on mapping information provided to the system. The hard facts are encoded in the bus order and the udev rules.

The scripting connects vboxnet($i+3) with hostonlyadapter$i. So according to the image above the admin network is on vboxnet4 which is connected to hostonlyadapter1. This is the outside view. The admin should be accessible from the host on 192.168.124.10 if and only if the correct inside ethx device is used. This most of the time just does not work. Check if you can ssh into the admin now. It will not work:

crowbar-virtualbox$ ssh 192.168.124.10

ssh: connect to host 192.168.124.10 port 22: No route to host

This is because SLES did some calculation on how to name the interfaces not knowing the outside world. How do we fix this? We have to move the IP config to the correct interface. The one which is connected to vboxnet4.

We can instrument some additional information we have about the system. The scripting did assign mac addresses in the order of the hostonlyadapters. hostonlyadapter$i has the mac address c0ffee000${N}0${i} were N is the number of the machine. N=0 for the admin. So the interface ethx we are searching is the one with the mac address c0:ff:ee:00:00:01.

Login to the node on the RDP console. Username: root, password: linux.

admin:~ # ip a|grep -B1 00:01

7: eth5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether c0:ff:ee:00:00:01 brd ff:ff:ff:ff:ff:ff```

So eth5 is connected to vboxnet4. Lets fix this:

admin:~ # mv /etc/sysconfig/network/ifcfg-eth0 /etc/sysconfig/network/ifcfg-eth5

admin:~ # /etc/init.d/network restart

Now we can connect from the host to the admin via vboxnet4

:~/crowbar-virtualbox$ ssh 192.168.124.10

The authenticity of host '192.168.124.10 (192.168.124.10)' can't be established.

ECDSA key fingerprint is e9:63:b6:1f:03:38:e4:ad:06:56:6e:a7:4e:f2:2f:01.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.124.10' (ECDSA) to the list of known hosts.

Password:

This step was manual. Crowbar/SUSECloud automated this part based on configuration. We just have to provide the information needed. First we need the system identifier to build a bus_order list. With this list we map physical nics into a deterministic order.

admin:~ # dmidecode -s system-product-name

VirtualBox

This yields VirtualBox as our pattern parameter value. Next we have to order our networking devices according to the information we know about the system:

admin:~ # ls -lgG /sys/class/net/ | grep eth | awk '{print $7,$8,$9}'

eth0 -> ../../devices/pci0000:00/0000:00:03.0/net/eth0

eth1 -> ../../devices/pci0000:00/0000:00:08.0/net/eth1

eth2 -> ../../devices/pci0000:00/0000:00:09.0/net/eth2

eth3 -> ../../devices/pci0000:00/0000:00:0a.0/net/eth3

eth4 -> ../../devices/pci0000:00/0000:00:10.0/net/eth4

eth5 -> ../../devices/pci0000:00/0000:00:11.0/net/eth5

This is the ordering the system provides. We do not care about the names, just about the order we know from the outside world. We can reverse engineer this from the udev rules.

admin:~ # cat /etc/udev/rules.d/70-persistent-net.rules|grep SUBSYSTEM | sort -k4 | awk '{print $4,$8;}'

ATTR{address}=="c0:ff:ee:00:00:01", NAME="eth5"

ATTR{address}=="c0:ff:ee:00:00:02", NAME="eth1"

ATTR{address}=="c0:ff:ee:00:00:03", NAME="eth2"

ATTR{address}=="c0:ff:ee:00:00:04", NAME="eth3"

ATTR{address}=="c0:ff:ee:00:00:05", NAME="eth4"

ATTR{address}=="c0:ff:ee:00:00:06", NAME="eth0"

So the order we want the system to use is eth5,eth1,eth2,eth3,eth4,eth0. Translated to pci bus ids and in json form:

"interface_map": [

{

"bus_order": [

"0000:00/0000:00:11.0",

"0000:00/0000:00:08.0",

"0000:00/0000:00:09.0",

"0000:00/0000:00:0a.0",

"0000:00/0000:00:10.0",

"0000:00/0000:00:03.0"

],

"pattern": "VirtualBox"

},

We come back to this partial json after installing the admin node packages.

SUSECloud 3 needs access to the SLES11SP3 repositories and the SUSECloud 3 repositories. We already have downloaded the the iso images, so the next step is to make them available to the admin node. Copy the iso images from the host to the admin node.

scp SUSE-CLOUD-3-x86_64-GM-DVD1.iso root@192.168.124.10:

scp SLES-11-SP3-DVD-x86_64-GM-DVD1.iso root@192.168.124.10:

We could add the isos to VirtualBox itself or copy the contents to disk, but to mount the iso is sufficient so we go with that:

echo "/root/SLES-11-SP3-DVD-x86_64-GM-DVD1.iso /srv/tftpboot/suse-11.3/install/ auto loop" >> /etc/fstab

zypper ar -c -t yast2 "/srv/tftpboot/suse-11.3/install/" "SUSE-Linux-Enterprise-Server-11-SP3

echo "/root/SUSE-CLOUD-3-x86_64-GM-DVD1.iso /srv/tftpboot/repos/Cloud auto loop" >> /etc/fstab

zypper ar -c -t yast2 "/srv/tftpboot/repos/Cloud" "Cloud"

To test if it is working and because we might want use yast to configure networking we install yast2-network.

zypper ref

zypper up

zypper in yast2-network

Now we are ready to install the cloud_admin on the admin node:

zypper in -t pattern cloud_admin

The install-chef-suse.sh script we will use later does some sanity checking. One thing we need is proper fqdn for the admin node.

admin:~ # echo "192.168.124.10 admin.suse-testbed.de admin" >> /etc/hosts

admin:~ # echo "admin" > /etc/HOSTNAME

admin:~ # hostname -F /etc/HOSTNAME

admin:~ # hostname

admin

admin:~ # hostname -f

admin.suse-testbed.de

Another thing we want to customize is the network.json. We have to tell SUSECloud about our physical, well, virtual environment. What we want to do is configure logical networks which map to physical network cards. The default configuration can be found at /etc/crowbar/network.json. We have to alter it. The documentation tells us that we can add our own conduit_map: C.4. Network Conduits. I failed to do so. If you wonder, you can test whatever you do on the command line. This is what I got adding adding a "special" conduit_map:

admin:~ # /opt/dell/bin/network-json-validator --admin-ip 192.168.124.10 /etc/crowbar/network.json

Invalid mode 'special': must be one of 'single', 'dual', 'team'

So we alter one of the predefined ones. I have choosen "team" as we want to use bonding on several conduits. Since the first mode that matches is applied, it is important that the specific modes (for 6 and 4 NICs) are listed before the general one

"conduit_map": [

{

"conduit_list": {

"intf0": {

"if_list": [

"1g1"

]

},

"intf1": {

"if_list": [

"1g2"

]

},

"intf2": {

"if_list": [

"+1g3",

"+1g4"

]

},

"intf3": {

"if_list": [

"+1g5",

"+1g6"

]

}

},

"pattern": "team/6/nova-multi.*"

},

{

"conduit_list": {

"intf0": {

"if_list": [

"1g1"

]

},

"intf1": {

"if_list": [

"1g2"

]

},

"intf2": {

"if_list": [

"+1g3",

"+1g4"

]

},

"intf3": {

"if_list": [

"+1g3",

"+1g4"

]

}

},

"pattern": "team/4/ceph.*"

},

{

"conduit_list": {

"intf0": {

"if_list": [

"1g1"

]

},

"intf1": {

"if_list": [

"1g2"

]

},

"intf2": {

"if_list": [

"+1g3",

"+1g4"

]

},

"intf3": {

"if_list": [

"+1g5",

"+1g6"

]

}

},

"pattern": "team/6/admin"

},

{

"conduit_list": {

"intf0": {

"if_list": [

"1g1"

]

},

"intf1": {

"if_list": [

"+1g2"

]

},

"intf2": {

"if_list": [

"+1g3",

"+1g4"

]

},

"intf3": {

"if_list": [

"+1g5",

"+1g6"

]

}

},

"pattern": "team/.*/.*"

},

Details about the format and parameters can be found in C.4. Network Conduits.

Some additional advice here: I tried to define the minimal needed conduit list on each pattern to match the desired config for the image above. Again the validator failed me. One of the errors I got was:

admin:~ # /opt/dell/bin/network-json-validator --admin-ip 192.168.124.10 /etc/crowbar/network.json

Conduit list with pattern 'team/.*/ceph.*' does not have the same list of conduits as catch-all conduit list for mode 'team' (with pattern 'team/.*/.*'): 'intf0, intf3' instead of 'intf0, intf1, intf2'.

So I tried to add intf0 - intf3 to all patterns, but with an empty list of interfaces (as for the ceph part we just have 4 nics, why define all intfx on that node) like so:

"intf2": {

"if_list": [

"",

""

]

},

The validator did not complain anymore, but later the installer was not able to do its job as there is some code failing with empty string if_lists.

We now have the bus_order to get the network interface order right. We have the conduit_map to be able to address the networks with a consistent name. The one thing missing is the L3 information. Which IP ranges, VLAN tags etc. to use on top of the broadcast domains.

"networks": {

"admin": {

"add_bridge": false,

"broadcast": "192.168.124.255",

"conduit": "intf0",

"netmask": "255.255.255.0",

"ranges": {

"admin": {

"end": "192.168.124.11",

"start": "192.168.124.10"

},

"dhcp": {

"end": "192.168.124.80",

"start": "192.168.124.21"

},

"host": {

"end": "192.168.124.160",

"start": "192.168.124.81"

},

"switch": {

"end": "192.168.124.250",

"start": "192.168.124.241"

}

},

"router": "192.168.124.1",

"router_pref": 10,

"subnet": "192.168.124.0",

"use_vlan": false,

"vlan": 100

},

"bmc": {

"add_bridge": false,

"broadcast": "192.168.124.255",

"conduit": "bmc",

"netmask": "255.255.255.0",

"ranges": {

"host": {

"end": "192.168.124.240",

"start": "192.168.124.162"

}

},

"subnet": "192.168.124.0",

"use_vlan": false,

"vlan": 100

},

"bmc_vlan": {

"add_bridge": false,

"broadcast": "192.168.124.255",

"conduit": "intf2",

"netmask": "255.255.255.0",

"ranges": {

"host": {

"end": "192.168.124.161",

"start": "192.168.124.161"

}

},

"subnet": "192.168.124.0",

"use_vlan": true,

"vlan": 100

},

"nova_fixed": {

"add_bridge": false,

"broadcast": "192.168.123.255",

"conduit": "intf2",

"netmask": "255.255.255.0",

"ranges": {

"dhcp": {

"end": "192.168.123.254",

"start": "192.168.123.50"

},

"router": {

"end": "192.168.123.49",

"start": "192.168.123.1"

}

},

"router": "192.168.123.1",

"router_pref": 20,

"subnet": "192.168.123.0",

"use_vlan": true,

"vlan": 500

},

"nova_floating": {

"add_bridge": false,

"broadcast": "192.168.126.191",

"conduit": "intf1",

"netmask": "255.255.255.192",

"ranges": {

"host": {

"end": "192.168.126.191",

"start": "192.168.126.129"

}

},

"subnet": "192.168.126.128",

"use_vlan": true,

"vlan": 300

},

"os_sdn": {

"add_bridge": false,

"broadcast": "192.168.130.255",

"conduit": "intf2",

"netmask": "255.255.255.0",

"ranges": {

"host": {

"end": "192.168.130.254",

"start": "192.168.130.10"

}

},

"subnet": "192.168.130.0",

"use_vlan": true,

"vlan": 400

},

"public": {

"add_bridge": false,

"broadcast": "192.168.126.255",

"conduit": "intf1",

"netmask": "255.255.255.0",

"ranges": {

"dhcp": {

"end": "192.168.126.127",

"start": "192.168.126.50"

},

"host": {

"end": "192.168.126.49",

"start": "192.168.126.2"

}

},

"router": "192.168.126.1",

"router_pref": 5,

"subnet": "192.168.126.0",

"use_vlan": true,

"vlan": 300

},

"storage": {

"add_bridge": false,

"broadcast": "192.168.125.255",

"conduit": "intf3",

"netmask": "255.255.255.0",

"ranges": {

"host": {

"end": "192.168.125.239",

"start": "192.168.125.10"

}

},

"subnet": "192.168.125.0",

"use_vlan": true,

"vlan": 200

}

These are basically the default values from /etc/crowbar/network.json found in bc-template-network.json. What we want to change is as follows:

The final network.json (using mode:team in the top level attributes!) can be downloaded here: example network json

Another thing we must customize for this example is the repo location for a few mandatory SUSE repositories. This repositories can be downloaded/mirrored after subscribing to SLES11SP3 and SUSECloud3.

An in detail discussion about the repositories can be found here: 2.2. Product and Update Repositories.

You have to provide access to the repos.

If you already have up and running a smt server, add the repos there:

for REPO in SLES11-SP3-{Pool,Updates} SUSE-Cloud-3.0-{Pool,Updates}; do

smt-repos $REPO sle-11-x86_64 -e

done

and configure the admin to use them:

admin:~ # cat > /etc/crowbar/provisioner.json

{

"attributes": {

"provisioner": {

"suse": {

"autoyast": {

"repos": {

"SLES11-SP3-Pool": {

"url": "http://<smt-server>/repo/$RCE/SLES11-SP3-Pool/sle-11-x86_64/"

},

"SLES11-SP3-Updates": {

"url": "http://<smt-server>/repo/$RCE/SLES11-SP3-Updates/sle-11-x86_64/"

},

"SUSE-Cloud-3.0-Pool": {

"url": "http://<smt-server>/repo/$RCE/SUSE-Cloud-3.0-Pool/sle-11-x86_64/"

},

"SUSE-Cloud-3.0-Updates": {

"url": "http://<smt-server>/repo/$RCE/SUSE-Cloud-3.0-Updates/sle-11-x86_64/"

}

}

}

}

}

}

}

With everything in place now you really want to create a snapshot of the admin machine to try out various customizations. Be aware: there might be dragons! Especially with the network.json. On the host issue

VBoxManage snapshot testcluster-admin take "all-set-before-installer" --pause

0%...10%...20%...30%...40%...50%...60%...70%...80%...90%...100%

to have a snapshot to come back to. Restoring to this state is as simple as:

VBoxManage controlvm testcluster-admin poweroff

VBoxManage snapshot testcluster-admin restore all-set-before-installer

Just one step missing. Install the admin:

screen /opt/dell/bin/install-chef-suse.sh

After the installer finished you can access the SUSECloud3 Admin at port 3000 on the vboxnet4.

Please add issues/wishes to the comments here or on https://github.com/iteh/crowbar-virtualbox

You are interested in our courses or you simply have a question that needs answering? You can contact us at anytime! We will do our best to answer all your questions.

Contact us